By Fredrik Dahlgren

Many engineers choose Rust as their language of choice for implementing cryptographic protocols because of its robust security guarantees. Although Rust makes safe cryptographic engineering easier, there are still some challenges to be aware of. Among them is the need to preserve constant-time properties, which ensure that, regardless of the input, code will always take the same amount of time to run. These properties are important in preventing timing attacks, but they can be compromised by compiler optimizations.

Recently, a client asked us how packaging a library as an npm module using wasm-pack and then running it using node would affect constant-time cryptographic code implemented in Rust. Writing constant-time code is always a fight against the optimizer, but in this case, the code would be optimized twice before being executed, first by LLVM and then by the Turbofan JIT compiler. How would this affect the constant-time cryptographic code used by the library?

We ran a number of small test cases to explore how optimizing the codebase twice would affect the constant-time properties of the code. This post will focus on the challenges of implementing constant-time Rust code and show that LLVM may introduce a new side-channel when compiling constant-time code to WebAssembly (Wasm). In part 2, we look at whether it is possible to selectively disable optimizations for security-critical code that needs to execute in constant time.

Constant-time what?

Cryptography is difficult to implement correctly. This is true when you are implementing both high-level protocols and low-level cryptographic primitives. Apart from worrying about overall correctness and edge cases that could expose secrets in unexpected ways, potential side-channel leakages and timing attacks are also deep concerns.

A timing attack is an attempt to exploit the fact that the application’s execution time may subtly depend on the input. If the application makes control flow-related decisions based on secret data, like the seed for a random number generator or a private key, this could ever so slightly affect the execution time of the application. Likewise, if secret data is used to determine which location in memory to read from, this could cause cache misses, which in turn would affect the execution time of the application. In both cases, information about the secret data is leaked through timing differences during the execution of the program.

To prevent such timing differences, cryptography engineers typically avoid implementing decisions based on secret data. However, in situations in which code needs to make decisions based on secret data, there are clever ways to implement them in constant time, that is, in a way that always executes in the same amount of time regardless of the input. For example, consider the following function, which performs a conditional selection between variables a and b in Rust.

#[inline] fn conditional_select(a: u32, b: u32, choice: bool) -> u32 { if choice { a } else { b } }

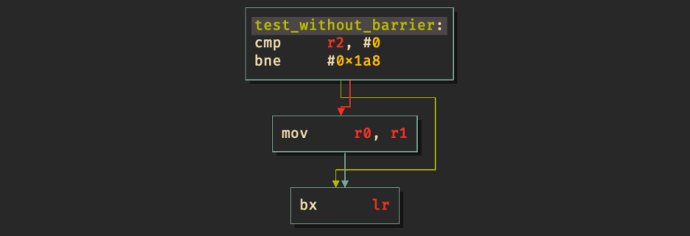

The function returns a if choice is true, otherwise b is returned. Depending on the compiler toolchain and the targeted instruction set, the compiler could choose to implement the conditional selection using a branching instruction like jne on x86 or bne on ARM. This would introduce a timing difference in the execution of the function, which could leak information about the choice variable. The following Rust implementation uses a clever trick to perform the same conditional selection in constant time.

#[inline] fn conditional_select(a: u32, b: u32, choice: u8) -> u32 { // if choice = 0, mask = (-0) = 0000...0000 // if choice = 1, mask = (-1) = 1111...1111 let mask = -(choice as i32) as u32; b ^ (mask & (a ^ b)) }

Here, we make no choices based on the choice secret value, which means that there is only one path through the function. Consequently, the execution time will always be the same.

Fighting the compiler

Ideally, this should be the end of the story, but in practice, there are risks inherent to this approach. Since the compiler has no concept of time, it doesn’t view timing differences as observable behavior. This means that it is free to rewrite and optimize constant-time code, which could introduce new timing leaks into the program. A carefully written constant-time implementation like the one above could still be optimized down to a branching instruction by the compiler, which would leak the value of choice!

This feels like an impossible situation. If this is really the case, the compiler is actually working against us to break our carefully crafted constant-time implementation of conditional_select. So what could we do to stop the compiler from optimizing the function and potentially breaking the constant-time properties of the code?

The most obvious solution is the nuclear option—to turn off all optimizations and compile the entire codebase with the -C opt-level=0 flag. This is almost always an untenable solution, however. Cryptographic code typically handles huge amounts of data, which means that it needs all the optimizations it can get from the compiler. A more attractive solution is to attempt to stop the compiler from optimizing sensitive code paths using what is known as optimization barriers. The subtle crate uses the following construction to attempt to thwart LLVM’s attempts to optimize constant-time code paths.

#[inline(never)] fn black_box(input: u8) -> u8 { unsafe { core::ptr::read_volatile(&input as *const u8) } }

Here, the call to core::ptr::read_volatile tells the compiler that the memory at &input is volatile and that it cannot make any assumptions about it. This call functions as an optimization barrier that stops LLVM from “seeing through the black box” and realizing that the input is actually a boolean. This in turn prevents the compiler from rewriting boolean operations on the output as conditional statements, which could leak timing information about the input. The Rust Core Library documentation has the following to say about core::ptr::read_volatile:

“Rust does not currently have a rigorously and formally defined memory model, so the precise semantics of what ‘volatile’ means here is subject to change over time. That being said, the semantics will almost always end up pretty similar to C11’s definition of volatile.”

This doesn’t seem very reassuring, but remember that timing differences are not viewed as observable by the compiler, so the compiler is always free to rewrite constant-time code and introduce new side-channel leaks. Any attempt to stop the compiler from doing so is bound to be on a best-effort basis until there is built-in language and compiler support for secret types. (There is a Rust RFC introducing secret types, but this has been postponed, awaiting LLVM support.)

Let’s see what happens with the conditional_select function if we compile it without an optimization barrier. To better illustrate this, we will target an instruction set that does not have conditional instructions like cmov (like x86_64 and aarch64), which allows the compiler to optimize the function without breaking the constant-time properties of the implementation. The following function simply calls the constant-time version of conditional_select to return either a or b.

pub fn test_without_barrier(a: u32, b: u32, choice: bool) -> u32 {

let choice = choice as u8;

conditional_select(a, b, choice)

}

By compiling the function for the ARM Cortex M0+ (which is used by the Raspberry Pi Pico), we get the following decompiled output.

We see that the compiler has replaced our carefully crafted conditional selection with a simple branch on the value of choice (in r2), completely destroying the constant-time properties of the function! Now, let’s see what happens if we insert an optimization barrier.

pub fn test_with_barrier(a: u32, b: u32, choice: bool) -> u32 {

let choice = black_box(choice as u8);

conditional_select(a, b, choice)

}

Looking at the corresponding disassembly, we see that it consists of a single basic block, resulting in a single path through the function, independent of the value of choice. This means that we can be reasonably sure that the function will always run in constant time.

So what about Wasm?

Now, let’s come back to the original problem. Our client was running code compiled from Rust down to Wasm using node. This means that the library is first compiled to Wasm using LLVM and then compiled again by node using the Turbofan JIT compiler. We expect that LLVM will respect the optimization guards inserted by libraries like the subtle crate, but what about Turbofan?

To see how the codebase would be affected, we compiled the test_with_barrier function defined above using wasm-bindgen and wasm-pack. We then dumped the code generated by the Turbofan JIT and examined the output to see whether the optimization barrier remained and whether the constant-time properties of the implementation had been preserved.

The following code is the result of compiling our example using wasm-pack and dumping the resulting Wasm in text format using wasm2wat. (We annotated some of the functions and removed some sections related to wasm-bindgen to make the code more readable.)

(module

(type (;0;) (func (param i32) (result i32)))

(type (;1;) (func (param i32 i32 i32) (result i32)))

(func $black_box (type 0) (param $input i32) (result i32)

(local $__frame_pointer i32)

global.get $__stack_pointer

i32.const 16

i32.sub

local.tee $__frame_pointer ;; push __stack_pointer - 16

local.get $input

i32.store8 offset=15 ;; store input at __stack_pointer - 1

local.get 1

i32.load8_u offset=15) ;; load output from __stack_pointer - 1

(func $test_without_barrier (type 1)

(param $a i32) (param $b i32) (param $choice i32) (result i32)

local.get $a

local.get $b

local.get $choice

select)

(func $test_with_barrier (type 1)

(param $a i32) (param $b i32) (param $choice i32) (result i32)

local.get $b

local.get $a

i32.xor ;; push a ^ b

i32.const 0

local.get $choice

i32.const 0

i32.ne ;; push input = choice != 0

call $black_box ;; push output = black_box(input)

i32.const 255

i32.and ;; push output = output & 0xFF

i32.sub ;; push mask = 0 - output

i32.and ;; push mask & (a ^ b)

local.get $b

i32.xor) ;; push b ^ (mask & (a ^ b))

(table (;0;) 1 1 funcref)

(memory (;0;) 17)

(global $__stack_pointer (mut i32) (i32.const 1048576))

(export "memory" (memory 0))

(export "test_without_barrier" (func $test_without_barrier))

(export "test_with_barrier" (func $test_with_barrier)))

We see that black_box has been compiled down to a simple i32.store8 followed by an (unsigned) i32.load8_u from the same offset. This initially looks like it could be optimized away completely since the memory is never read outside black_box.

We also see that test_with_barrier has not been optimized across the call to black_box. The function still performs a branchless conditional selection controlled by the output from the optimization barrier. This looks good and gives us some confidence that the constant-time properties provided by the subtle crate are preserved when targeting Wasm. However, as soon as the Wasm module is loaded by node, it is passed off to the Liftoff and Turbofan JIT compilers to optimize the code further.

To investigate how this affects our small example, we load the compiled Wasm module using JavaScript and dump the trace output from Turbofan using node. This can be done by passing the --trace-turbo flag to the node runtime. The trace generated by node can then be viewed in the Turbolizer web GUI (which can be found in the V8 repository).

Turbolizer can be used to analyze each step of the Turbofan compilation pipeline. Here, we are interested in displaying only what the emitted assembly code looks like for a given function. Looking at the output for test_with_barrier, we see that no optimizations are performed across the black_box function call on line 2c. The output is essentially identical to the decompiled Wasm code above.

B0:

0 push rbp

1 REX.W movq rbp,rsp

4 push 0x8

6 push rsi

7 REX.W subq rsp,0x18

b REX.W movq rbx,[rsi+0x2f]

f REX.W movq [rbp-0x18],rdx

13 REX.W movq [rbp-0x20],rax

17 REX.W cmpq rsp,[rbx]

1a jna B2 <+0x4a>

B1:

20 cmpl rcx,0x0 ;; rax = choice? 1: 0

23 setnzl bl

26 movzxbl rbx,rbx

29 REX.W movq rax,rbx

2c call 0x7ffc2400fa31 ;; call to black_box(rax)

31 movzxbl rbx,rax ;; rbx = -black_box(rax)

34 negl rbx

36 REX.W movq rdx,[rbp-0x20] ;; rdx = a ^ b

3a xorl rdx,[rbp-0x18]

3d andl rbx,rdx ;; rbx = rbx & rdx

3f REX.W movq rax,[rbp-0x18] ;; rax = b ^ (rbx & (a ^ b))

43 xorl rax,rbx

45 REX.W movq rsp,rbp

48 pop rbp

49 retl ;; return rax

B2:

4a REX.W movq [rbp-0x28],rcx

4e call 0x7ffc2400fa7b

53 REX.W movq rsi,[rbp-0x10]

57 REX.W movq rcx,[rbp-0x28]

5b jmp B1 <+0x20>

5d nop

5e nop

It also is interesting to see what the Turbolizer output for black_box looks like. Looking at the emitted assembly for black_box, we see that apart from setting up the local stack frame, the function simply stores and then immediately loads the input from memory (lines 14 and 18) before returning.

B0:

0 push rbp

1 REX.W movq rbp,rsp

4 push 0x8

6 push rsi

7 REX.W movq rbx,[rsi+0x17]

b REX.W movq rdx,[rsi+0x67]

f movl rdx,[rdx]

11 subl rdx,0x10

14 movb [rdx+rbx*1+0xf],al ;; store input to memory

18 movzxbl rax,[rdx+rbx*1+0xf] ;; load output from memory

1d REX.W movq rsp,rbp

20 pop rbp

21 retl

You may be surprised that this function is not inlined or optimized away by Turbofan. Since there is nothing in Wasm that corresponds to the volatile read in Rust, there is really no reason for Turbofan to keep black_box around anymore. However, since black_box writes to memory, it is not completely side-effect free, and so cannot be optimized away completely by the JIT compiler.

Introducing a new side-channel

The fact that the compiled version of black_box writes the input to memory before returning it is actually somewhat surprising. Since black_box takes a value as input and read_volatile takes a reference as input, LLVM needs to turn the input value into a reference somehow. When compiling for architectures like x86 or ARM, LLVM can simply use the address of the input on the stack, but the Wasm stack is not addressable in this way, which means that LLVM has to write the input to memory to be able to reference it. All of this means that the secret value that we wanted to protect using an optimization barrier is leaked to Wasm memory by LLVM. Moreover, looking at the compiled Wasm code above, we see that this memory is exported by the Wasm module, which means that it can be read from JavaScript. If we call the exported test_with_barrier function and examine the memory before and after the call, we can see that the secret value passed to black_box is now accessible from JavaScript.

const path = require('path').join(__dirname, 'ct_wasm.wasm');

const bytes = require('fs').readFileSync(path);

// Load Wasm module from file.

const wasmModule = new WebAssembly.Module(bytes);

const wasmInstance = new WebAssembly.Instance(wasmModule);

const wasmMemory = new Uint8Array(wasmInstance.exports.memory.buffer);

const testWithBarrier = wasmInstance.exports.test_with_barrier;

// __stack_pointer defined by the Wasm module.

const stackPointer = 1048576;

// Print memory[__frame_pointer + 15] before call to black_box.

const before = wasmMemory[stackPointer - 1];

console.log("Before the call to black_box: " + before);

// Call test_with_barrier which calls black_box with secret value 1.

testWithBarrier(123, 456, 1);

// Print memory[__frame_pointer + 15] after call to black_box.

const after = wasmMemory[stackPointer - 1];

console.log("After the call to black_box: " + after);

Running this small test produces the following output, showing that the secret value passed to black_box is indeed leaked by the program.

❯ node js/ct_wasm.js Before the call to black_box: 0 After the call to black_box: 1

Since the purpose of the black_box function is to protect the code from optimizations based on secret values, every value that goes into black_box is sensitive by definition. This is not a good situation.

Using a different optimization barrier

There have been some discussions in the Rust Cryptography Interest Group about defining a new Rust intrinsic based on this C++ optimization barrier. The corresponding Rust implementation would then look something like the following (here using the now deprecated llvm_asm macro).

#[inline(never)]

fn black_box(input: u8) -> u8 {

unsafe { llvm_asm!("" : "+r"(input) : : : "volatile"); }

input

}

After recompiling the codebase with wasm-pack and decompiling the resulting Wasm module, we see that black_box is now given by a single local.get $input (returning the first argument to the function), which is what we want. This function does not leak secret values to memory, but is it preserved by Turbofan?

By running the corresponding test_with_barrier function through Turbofan, we see that it results in machine code that is identical to the previous constant-time version above. Thus, with the llvm_asm-based barrier, we get a constant-time implementation that does not leak secret values to the surrounding JavaScript runtime.

However, as we have already noted, there is no reason to expect Turbofan not to inline the black_box function in future versions of the compiler. (In fact, if we look at the source code responsible for the Wasm compilation pipeline in the V8 repository, we see that the FLAG_wasm_inlining flag, which controls the WasmInliningPhase in the compiler pipeline, defaults to false in version 9.7.24 of V8; but we expect this optimization phase to be enabled at some point.)

Going forward

It is clear that fighting LLVM by inserting optimization barriers is not a great way to provide constant-time guarantees. There are ongoing efforts to address this problem at the language level. The secret types RFC and the CT-Wasm project, which introduce secret types for Rust and Wasm respectively, are two great examples of such efforts. What is missing is a way forward for getting secret types and the corresponding semantics into LLVM. This is most likely a precondition for the Rust implementation to move forward. (The Rust RFC is currently postponed, awaiting a corresponding RFC for LLVM.) Without LLVM support, it is difficult to see how higher-level languages that depend on LLVM could provide any absolute constant-time guarantees. Until then, we are all left playing hide-and-seek with the compiler back end.

In this post, we examined the use of optimization barriers to prevent the optimizer from wreaking havoc on constant-time cryptographic implementations in Rust, and the security guarantees optimization barriers provide when targeting Wasm. In the upcoming second part of this blog post, we will explore how constant-time properties of the implementation may be preserved by selectively disabling optimizations at the function level.