2024-1-11 05:6:58 Author: blogs.sap.com(查看原文) 阅读量:8 收藏

Why ArgoCD is that popular with the Kubernetes environments ?

Simply because it enforces their declarative and contractual nature, as described here: https://argo-cd.readthedocs.io/en/stable/#why-argo-cd

Long story short. For this brief, I wanted to repurpose my custom helm pipelines into ArgoCD.

The main hurdle I had to overcome was the fact that my original helm chart based pipeline is composed of several independent helm charts (sources).

Luckily, ArgoCD supports both single and multiple source deployments. (Please note, the multi-source deployments are still a beta feature, thus subject to change.)

That saved my day. Let me share how it went.

Here goes a high level architecture of a SAP solution deployed to a kyma cluster.

As of such, it is a pretty standard SAP business solution that leverages the SAP BTP multi-tenancy model with the approuter, frontend and backend services deployed to a kyma cluster (as described in way more details here: Developing multi-tenant SaaS applications easy with SAP BTP, Kyma runtime.)

The fronted is a React-Native build-app developed with SAP Build Apps as described here: Deploy SAP Build Apps web applications easy to SAP BTP, Kyma runtime.

Here goes the helm charts tree of the above solution

📦helm ┣ 📂faas-app ┃ ┣ 📂templates ┃ ┃ ┣ 📜NOTES.txt ┃ ┃ ┣ 📜_helpers.tpl ┃ ┃ ┣ 📜apirule.yaml ┃ ┃ ┣ 📜binding-ias.yaml ┃ ┃ ┣ 📜configmap.yaml ┃ ┃ ┣ 📜deployment.yaml ┃ ┃ ┣ 📜destination-rule.yaml ┃ ┃ ┣ 📜resources.yaml ┃ ┃ ┣ 📜service-ias.yaml ┃ ┃ ┣ 📜service.yaml ┃ ┃ ┗ 📜xs-app.yaml ┃ ┣ 📜.helmignore ┃ ┣ 📜Chart.yaml ┃ ┣ 📜SAP_Best_scrn_R_gry_neg.png ┃ ┣ 📜kyma-logo.png ┃ ┣ 📜spark.jpeg ┃ ┗ 📜values.yaml ┣ 📂faas-saas ┃ ┣ 📂templates ┃ ┃ ┣ 📜NOTES.txt ┃ ┃ ┣ 📜_helpers.tpl ┃ ┃ ┣ 📜binding-registry.yaml ┃ ┃ ┣ 📜binding-role.yaml ┃ ┃ ┣ 📜configmap.yaml ┃ ┃ ┣ 📜function.yaml ┃ ┃ ┣ 📜role.yaml ┃ ┃ ┣ 📜sa-secret.yaml ┃ ┃ ┣ 📜sa.yaml ┃ ┃ ┣ 📜saas-apirule.yaml ┃ ┃ ┗ 📜service-registry.yaml ┃ ┣ 📜Chart.yaml ┃ ┗ 📜values.yaml ┗ 📂faas-srv ┃ ┣ 📂templates ┃ ┃ ┣ 📜NOTES.txt ┃ ┃ ┣ 📜_helpers.tpl ┃ ┃ ┣ 📜binding-dest-x509.yaml ┃ ┃ ┣ 📜binding-dest.yaml ┃ ┃ ┣ 📜binding-quovadis-sap.yaml ┃ ┃ ┣ 📜binding-uaa.yaml ┃ ┃ ┣ 📜function.yaml ┃ ┃ ┣ 📜service-dest.yaml ┃ ┃ ┣ 📜service-quovadis-sap.yaml ┃ ┃ ┗ 📜service-uaa.yaml ┃ ┣ 📜.helmignore ┃ ┣ 📜Chart.yaml ┃ ┗ 📜values.yaml

That translates into the following argocd project manifest:

project: default

source:

repoURL: 'https://github.com/quovadis.git'

path: kyma-btp/kyma/helm/

targetRevision: HEAD

destination:

namespace: anywhere

name: shoot--kyma-stage--*****

syncPolicy:

syncOptions:

- ServerSideApply=true

- RespectIgnoreDifferences=true

ignoreDifferences:

- group: apps

kind: Deployment

jsonPointers:

- /spec/replicas

- group: services.cloud.sap.com

kind: ServiceBinding

jsonPointers:

- /spec/parametersFrom

- group: serverless.kyma-project.io

kind: Function

jsonPointers:

- /spec/labels

- /spec/runtimeImageOverride

- group: batch

kind: Job

jsonPointers:

- /*

sources:

- repoURL: 'https://github.com/quovadis.git'

path: kyma-btp/kyma/helm/faas-srv/

targetRevision: HEAD

helm:

valueFiles:

- values.yaml

parameters:

- name: services.app.service.port

value: <port>

- name: clusterDomain

value: <custom domain>

- name: gateway

value: <istio gateway>

- repoURL: 'https://github.com/quovadis.git'

path: kyma-btp/kyma/helm/faas-saas/

targetRevision: HEAD

helm:

valueFiles:

- values.yaml

parameters:

- name: services.app.service.port

value: <port>

- name: clusterDomain

value: <custom domain>

- name: gateway

value: <istio gateway>

- repoURL: 'https://github.com/quovadis.git'

path: kyma-btp/kyma/helm/faas-app/

targetRevision: HEAD

helm:

valueFiles:

- values.yaml

parameters:

- name: services.app.service.port

value: <port>

- name: clusterDomain

value: <custom domain>

- name: gateway

value: <istio gateway>Good to know:

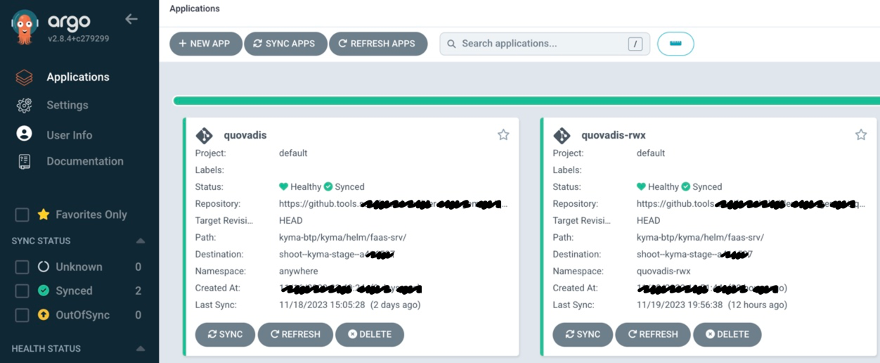

- There is at least one default argoCD project and a number of argoCD applications as well.

- ArgoCD applications may be pointing to different target kyma clusters and/or namespaces.

For instance, here goes a top level application view of resources deployed to a kyma cluster

After the deployment to a kyma cluster the business users (aka consumers) gain access to the kyma app by subscribing to it from the Service Marketplace in their respective BTP tenants (sub-accounts).

Eventually, in order to acquire permissions to access the app, business users must be assigned suitable role collections available to them in their respective BTP sub-accounts.

ArgoCD APIs

Last but not least, another great feature of ArgoCD is the REST APIs package and its swagger definition.

Eventually, I used SAP API Management to implement the whole ArgCD API package as an API proxy.

For instance, one can manage argoCD applications programmatically:

Prepare your kyma cluster for ArgoCD operations

Assuming you have a service account token-based kubeconfig to access your kyma cluster.

The below Makefile summerizes the steps required to add a shoot kyma cluster to an ArgoCD instance:

- create a dedicated namespace for argocd related configurations

- Create a service account to be used by argocd

- create a cluster rolebinding for argocd service account

- create a target namespace in your cluster to deploy argocd applications to

- log into your argocd service

- add your cluster by its shoot name to your argocd service

.DEFAULT_GOAL := help

LOCAL_DIR = $(dir $(abspath $(lastword $(MAKEFILE_LIST))))

ARGOCD_NAMESPACE=argocd

ARGOCD_SERVICEACCOUNT=demo-argocd-manager

KUBECONFIG=~/.kube/kubeconfig--c-***.yaml

NAMESPACE=quovadis

ARGOCD=<argocd server>

CUSTOM_DOMAIN= $(shell kubectl get cm -n kube-system shoot-info --kubeconfig $(KUBECONFIG) -ojsonpath='{.data.domain}' )

SHOOT_NAME= $(shell kubectl config current-context --kubeconfig $(KUBECONFIG) )

help: ## Display this help.

@awk 'BEGIN {FS = ":.*##"; printf "\nUsage:\n make \033[36m<target>\033[0m\n"} /^[a-zA-Z_0-9-]+:.*?##/ { printf " \033[36m%-15s\033[0m %s\n", $$1, $$2 } /^##@/ { printf "\n\033[1m%s\033[0m\n", substr($$0, 5) } ' $(MAKEFILE_LIST)

echo $(KUBECONFIG) $(NAMESPACE) $(SHOOT_NAME)

.PHONY: argocd-bootstrap-cluster

argocd-bootstrap-cluster: ## bootstrap argocd on your kyma cluster

# create a dedicated namespace for argocd related configurations

kubectl create ns $(ARGOCD_NAMESPACE) --kubeconfig $(KUBECONFIG) --dry-run=client -o yaml | kubectl apply --kubeconfig $(KUBECONFIG) -f -

# Create a service account to be used by argocd

kubectl -n $(ARGOCD_NAMESPACE) create serviceaccount $(ARGOCD_SERVICEACCOUNT) --kubeconfig $(KUBECONFIG) --dry-run=client -o yaml | kubectl apply --kubeconfig $(KUBECONFIG) -f -

# create a cluster rolebinding for argocd service account

kubectl create clusterrolebinding $(ARGOCD_SERVICEACCOUNT) --serviceaccount $(ARGOCD_NAMESPACE):$(ARGOCD_SERVICEACCOUNT) --clusterrole cluster-admin --kubeconfig $(KUBECONFIG) --dry-run=client -o yaml | kubectl apply --kubeconfig $(KUBECONFIG) -f -

kubectl create ns $(NAMESPACE) --kubeconfig $(KUBECONFIG) --dry-run=client -o yaml | kubectl apply --kubeconfig $(KUBECONFIG) -f -

kubectl label namespace $(NAMESPACE) istio-injection=enabled --kubeconfig $(KUBECONFIG)

argocd login $(ARGOCD) --sso

argocd cluster add $(SHOOT_NAME) --service-account $(ARGOCD_SERVICEACCOUNT) --system-namespace $(ARGOCD_NAMESPACE) --namespace $(NAMESPACE) --kubeconfig $(KUBECONFIG)ArgoCD application(s)

As aforementioned, any argoCD application must be part of a project. And anyone can use the default argoCD project to start with…

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: quovadis-rwx

spec:

destination:

name: ''

namespace: quovadis

server: 'https://api.*****.stage.kyma.ondemand.com'

source:

path: kyma-btp/kyma/helm

repoURL: 'https://github.tools.sap/<repo>/quovadis.git'

targetRevision: HEAD

sources: []

project: default

syncPolicy:

syncOptions:

- ServerSideApply=true

- RespectIgnoreDifferences=true

ArgoCD blogposts:

如有侵权请联系:admin#unsafe.sh