2024-7-9 21:15:12 Author: securelist.com(查看原文) 阅读量:5 收藏

Detection is a traditional type of cybersecurity control, along with blocking, adjustment, administrative and other controls. Whereas before 2015 teams asked themselves what it was that they were supposed to detect, as MITRE ATT&CK evolved, SOCs were presented with practically unlimited space for ideas on creating detection scenarios.

With the number of scenarios becoming virtually unlimited, another question inevitably arises: “What do we detect first?” This and the fact that SOC teams forever play the long game, having to respond with limited resources to a changing threat landscape, evolving technology and increasingly sophisticated malicious actors, makes managing efforts to develop detection logic an integral part of any modern SOC’s activities.

The problem at hand is easy to put into practical terms: the bulk of the work done by any modern SOC – with the exception of certain specialized SOC types – is detecting, and responding to, information security incidents. Detection is directly associated with preparation of certain algorithms, such as signatures, hard-coded logic, statistical anomalies, machine learning and others, that help to automate the process. The preparation consists of at least two processes: managing detection scenarios and developing detection logic. These cover the life cycle, stages of development, testing methods, go-live, standardization, and so on. These processes, like any others, require certain inputs: an idea that describes the expected outcome at least in abstract terms.

This is where the first challenges arise: thanks to MITRE ATT&CK, there are too many ideas. The number of described techniques currently exceeds 200, and most are broken down into several sub-techniques – MITRE T1098 Account Manipulation, for one, contains six sub-techniques – while SOC’s resources are limited. Besides, SOC teams likely do not have access to every possible source of data for generating detection logic, and some of those they do have access to are not integrated with the SIEM system. Some sources can help with generating only very narrowly specialized detection logic, whereas others can be used to cover most of the MITRE ATT&CK matrix. Finally, certain cases require activating extra audit settings or adding selective anti-spam filtering. Besides, not all techniques are the same: some are used in most attacks, whereas others are fairly unique and will never be seen by a particular SOC team. Thus, setting priorities is both about defining a subset of techniques that can be detected with available data and about ranking the techniques within that subset to arrive at an optimized list of detection scenarios that enables detection control considering available resources and in the original spirit of MITRE ATT&CK: discovering only some of the malicious actor’s atomic actions is enough for detecting the attack.

A slight detour. Before proceeding to specific prioritization techniques, it is worth mentioning that this article looks at options based on tools built around the MITRE ATT&CK matrix. It assesses threat relevance in general, not in relation to specific organizations or business processes. Recommendations in this article can be used as a starting point for prioritizing detection scenarios. A more mature approach must include an assessment of a landscape that consists of security threats relevant to your particular organization, an allowance for your own threat model, an up-to-date risk register, and automation and manual development capabilities. All of this requires an in-depth review, as well as liaison between various processes and roles inside your SOC. We offer more detailed maturity recommendations as part of our SOC consulting services.

MITRE Data Sources

Optimized prioritization of the backlog as it applies to the current status of monitoring can be broken down into the following stages:

- Defining available data sources and how well they are connected;

- Identifying relevant MITRE ATT&CK techniques and sub-techniques;

- Finding an optimal relation between source status and technique relevance;

- Setting priorities.

A key consideration in implementing this sequence of steps is the possibility of linking information that the SOC receives from data sources to a specific technique that can be detected with that information. In 2021, MITRE completed its ATT&CK Data Sources project, its result being a methodology for describing a data object that can be used for detecting a specific technique. The key elements for describing data objects are:

- Data Source: an easily recognizable name that defines the data object (Active Directory, application log, driver, file, process and so on);

- Data Components: possible data object actions, statuses and parameters. For example, for a file data object, data components are file created, file deleted, file modified, file accessed, file metadata, and so on.

Virtually every technique in the MITRE ATT&CK matrix currently contains a Detection section that lists data objects and relevant data components that can be used for creating detection logic. A total of 41 data objects have been defined at the time of publishing this article.

MITRE most relevant data components

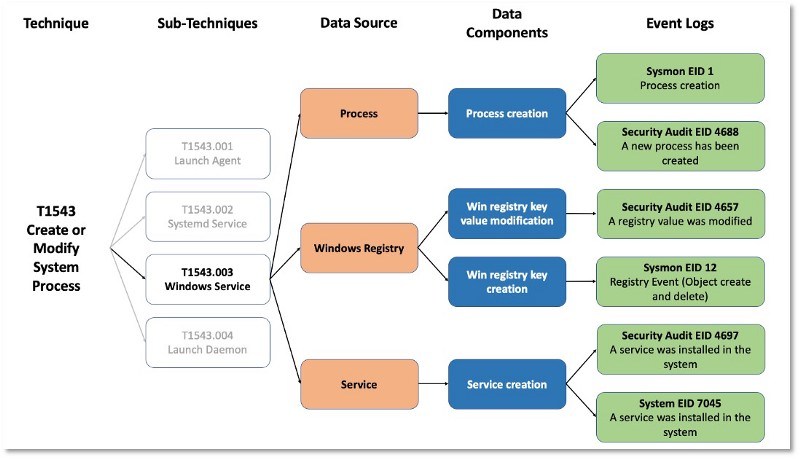

The column on the far right in the image above (Event Logs) illustrates the possibilities of expanding the methodology to cover specific events received from real data sources. Creating a mapping like this is not one of the ATT&CK Data Sources project goals. This Event Logs example is rather intended as an illustration. On the whole, each specific SOC is expected to independently define a list of events relevant to its sources, a fairly time-consuming task.

To optimize your approach to prioritization, you can start by isolating the most frequent data components that feature in most MITRE ATT&CK techniques.

The graph below presents the up-to-date top 10 data components for MITRE ATT&CK matrix version 15.1, the latest at the time of writing this.

The most relevant data components (download)

For these data components, you can define custom sources for the most results. The following will be of help:

- Expert knowledge and overall logic. Data objects and data components are typically informative enough for the engineer or analyst working with data sources to form an initial judgment on the specific sources that can be used.

- Validation directly inside the event collection system. The engineer or analyst can review available sources and match events with data objects and data components.

- Publicly available resources on the internet, such as Sensor Mappings to ATT&CK, a project by the Center for Threat-Informed Defense, or this excellent resource on Windows events: UltimateWindowsSecurity.

That said, most sources are fairly generic and typically connected when a monitoring system is implemented. In other words, the mapping can be reduced to selecting those sources which are connected in the corporate infrastructure or easy to connect.

The result is an unranked list of integrated data sources that can be used for developing detection logic, such as:

- For Command Execution: OS logs, EDR, networked device administration logs and so on;

- For Process Creation: OS logs, EDR;

- For Network Traffic Content: WAF, proxy, DNS, VPN and so on;

- For File Modification: DLP, EDR, OS logs and so on.

However, this list is not sufficient for prioritization. You also need to consider other criteria, such as:

- The quality of source integration. Two identical data sources may be integrated with the infrastructure differently, with different logging settings, one source being located only in one network segment, and so on.

- Usefulness of MITRE ATT&CK techniques. Not all techniques are equally useful in terms of optimization. Some techniques are more specialized and aimed at detecting rare attacker actions.

- Detection of the same techniques with several different data sources (simultaneously). The more options for detecting a technique have been configured, the higher the likelihood that it will be discovered.

- Data component variability. A selected data source may be useful for detecting not only those techniques associated with the top 10 data components but others as well. For example, an OS log can be used for detecting both Process Creation components and User Account Authentication components, a type not mentioned on the graph.

Prioritizing with DeTT&CT and ATT&CK Navigator

Now that we have an initial list of data sources available for creating detection logic, we can proceed to scoring and prioritization. You can automate some of this work with the help of DeTT&CT, a tool created by developers unaffiliated with MITRE to help SOCs with using MITRE ATT&CK for scoring and comparing the quality of data sources, coverage and detection scope according to MITRE ATT&CK techniques. The tool is available under the GPL-3.0 license.

DETT&CT supports an expanded list of data sources as compared to the MITRE model. This list is implemented by design and you do not need to redefine the MITRE matrix itself. The expanded model includes several data components, which are parts of MITRE’s Network Traffic component, such as Web, Email, Internal DNS, and DHCP.

You can install DETT&CT with the help of two commands: git clone and pip install -r. This gives you access to DETT&CT Editor: a web interface for describing data sources, and DETT&CT CLI for automated analysis of prepared input data that can help with prioritizing detection logic and more.

The first step in identifying relevant data sources is describing these. Go to Data Sources in DETT&CT Editor, click New file and fill out the fields:

- Domain: the version of the MITRE ATT&CK matrix to use (enterprise, mobile or ICS).

- This field is not used in analytics; it is intended for distinguishing between files with the description of sources.

- Systems: selection of platforms that any given data source belongs to. This helps to both separate platforms, such as Windows and Linux, and specify several platforms within one system. Going forward, keep in mind that a data source is assigned to a system, not a platform. In other words, if a source collects data from both Windows and Linux, you can leave one system with two platforms, but if one source collects data from Windows only, and another, from Linux only, you need to create two systems: one for Windows and one for Linux.

After filling out the general sections, you can proceed to analyzing data sources and mapping to the MITRE Data Sources. Click Add Data Source for each MITRE data object and fill out the relevant fields. Follow the link above for a detailed description of all fields and example content on the project page. We will focus on the most interesting field: Data quality. It describes the quality of data source integration as determined according to five criteria:

- Device completeness. Defines infrastructure coverage by the source, such as various versions of Windows or subnet segments, and so on.

- Data field completeness. Defines the completeness of data in events from the source. For example, information about Process Creation may be considered incomplete if we see that a process was created, but not the details of the parent process, or for Command Execution, we see the command but not the arguments, and so on.

- Defines the presence of a delay between the event happening and being added to a SIEM system or another detection system.

- Defines the extent to which the names of the data fields in an event from this source are consistent with standard naming.

- Compares the period for which data from the source is available for detection with the data retention policy defined for the source. For instance, data from a certain source is available for one month, whereas the policy or regulatory requirements define the retention period as one year.

A detailed description of the scoring system for filling out this field is available in the project description.

It is worth mentioning that at this step, you can describe more than just the top 10 data components that cover the majority of the MITRE ATT&CK techniques. Some sources can provide extra information: in addition to Process Creation, Windows Security Event Log provides data for User Account Authentication. This extension will help to analyze the matrix without limitations in the future.

After describing all the sources on the list defined earlier, you can proceed to analyze these with reference to the MITRE ATT&CK matrix.

The first and most trivial analytical report identifies the MITRE ATT&CK techniques that can be discovered with available data sources one way or another. This report is generated with the help of a configuration file with a description of data sources and DETT&CT CLI, which outputs a JSON file with MITRE ATT&CK technique coverage. You can use the following command for this:

python dettect.py ds -fd <data-source-yaml-dir>/<data-sources-file.yaml> -l |

The resulting JSON is ready to be used with the MITRE ATT&CK matrix visualization tool, MITRE ATT&CK Navigator. See below for an example.

This gives a literal answer to the question of what techniques the SOC can discover with the set of data sources that it has. The numbers in the bottom right-hand corner of some of the cells reflect sub-technique coverage by the data sources, and the colors, how many different sources can be used to detect the technique. The darker the color, the greater the number of sources.

DETT&CT CLI can also generate an XLSX file that you can conveniently use as the integration of existing sources evolves, a parallel task that is part of the data source management process. You can use the following command to generate the file:

python dettect.py ds -fd <data-source-yaml-dir>/<data-sources-file.yaml> -e |

The next analytical report we are interested in assesses the SOC’s capabilities in terms of detecting MITRE ATT&CK techniques and sub-techniques while considering the scoring of integrated source quality as done previously. You can generate the report by running the following command:

python dettect.py ds -fd <data-source-yaml-dir>/<data-sources-file.yaml> --yaml |

This generates a DETT&CT configuration file that both contains matrix coverage information and considers the quality of the data sources, providing a deeper insight into the level of visibility for each technique. The report can help to identify the techniques for which the SOC in its current shape can achieve the best results in terms of completeness of detection and coverage of the infrastructure.

This information too can be visualized with MITRE ATT&CK Navigator. You can use the following DETT&CT CLI command for this:

python dettect.py v -ft output/<techniques-administration-file.yaml> -l |

See below for an example.

For each technique, the score is calculated as an average of all relevant data source scores. For each data source, it is calculated from specific parameters. The following parameters have increased weight:

- Device completeness;

- Data field completeness;

- Retention.

To set up the scoring model, you need to modify the project source code.

It is worth mentioning that the scoring system presented by the developers of DETT&CT tends to be fairly subjective in some cases, for example:

- You may have one data source out of the three mentioned in connection with the specific technique. However, in some cases, one data source may not be enough even to detect the technique on a minimal level.

- In other cases, the reverse may be true, with one data source giving exhaustive information for complete detection of the technique.

- Detection may be based on a data source that is not currently mentioned in the MITRE ATT&CK Data Sources or Detections for that particular technique.

In these cases, the DETT&CT configuration file techniques-administration-file.yaml can be adjusted manually.

Now that the available data sources and the quality of their integration have been associated with the MITRE ATT&CK matrix, the last step is ranking the available techniques. You can use the Procedure Examples section in the matrix, which defines the groups that use a specific technique or sub-technique in their attacks. You can use the following DETT&CT command to run the operation for the entire MITRE ATT&CK matrix:

In the interests of prioritization, we can merge the two datasets (technique feasibility considering available data sources and their quality, and the most frequently used MITRE ATT&CK techniques):

python dettect.py g -p PLATFORM -o output/<techniques-administration- file.yaml> -t visibility |

The result is a JSON file containing techniques that the SOC can work with and their description, which includes the following:

- Detection ability scoring;

- Known attack frequency scoring.

See the image below for an example.

As you can see in the image, some of the techniques are colored shades of red, which means they have been used in attacks (according to MITRE), but the SOC has no ability to detect them. Other techniques are colored shades of blue, which means the SOC can detect them, but MITRE has no data on these techniques having been used in any attacks. Finally, the techniques colored shades of orange are those which groups known to MITRE have used and the SOC has the ability to detect.

It is worth mentioning that groups, attacks and software used in attacks, which are linked to a specific technique, represent retrospective data collected throughout the period that the matrix has existed. In some cases, this may result in increased priority for techniques that were relevant for attacks, say, from 2015 through 2020, which is not really relevant for 2024.

However, isolating a subset of techniques ever used in attacks produces more meaningful results than simple enumeration. You can further rank the resulting subset in the following ways:

- By using the MITRE ATT&CK matrix in the form of an Excel table. Each object (Software, Campaigns, Groups) contains the property Created (date when the object was created) that you can rely on when isolating the most relevant objects and then use the resulting list of relevant objects to generate an overlap as described above:

python dettect.py g -g sample-data/groups.yaml -p PLATFORM -o

output/<techniques-administration-file.yaml> -t visibility

- By using the TOP ATT&CK TECHNIQUES project created by MITRE Engenuity.

TOP ATT&CK TECHNIQUES was aimed at developing a tool for ranking MITRE ATT&CK techniques and accepts similar inputs to DETT&CT. The tool produces a definition of 10 most relevant MITRE ATT&CK techniques for detecting with available monitoring capabilities in various areas of the corporate infrastructure: network communications, processes, the file system, cloud-based solutions and hardware. The project also considers the following criteria:

- Choke Points, or specialized techniques where other techniques converge or diverge. Examples of these include T1047 WMI, as it helps to implement a number of other WMI techniques, or T1059 Command and Scripting Interpreter, as many other techniques rely on a command-line interface or other shells, such as PowerShell, Bash and others. Detecting this technique will likely lead to discovering a broad spectrum of attacks.

- Prevalence: technique frequency over time.

Note, however, that the project is based on MITRE ATT&CK v.10 and is not supported.

Finalizing priorities

By completing the steps above, the SOC team obtains a subset of MITRE ATT&CK techniques that feature to this or that extent in known attacks and can be detected with available data sources, with an allowance for the way these are configured in the infrastructure. Unfortunately, DETT&CT does not offer any way of creating a convenient XLSX file with an overlap between techniques used in attacks and those that the SOC can detect. However, we have a JSON file that can be used to generate the overlap with the help of MITRE ATT&CK Navigator. So, all you need to do for prioritization is to parse the JSON, say, with the help of Python. The final prioritization conditions may be as follows:

- Priority 1 (critical): Visibility_score >= 3 and Attacker_score >= 75. From an applied perspective, this isolates MITRE ATT&CK techniques that most frequently feature in attacks and that the SOC requires minimal or no preparation to detect.

- Priority 2 (high): (Visibility_score < 3 and Visibility_score >= 1) and Attacker_score >= 75. These are MITRE ATT&CK techniques that most frequently feature in attacks and that the SOC is capable of detecting. However, some work on logging may be required, or monitoring coverage may not be good enough.

- Priority 3 (medium): Visibility_score >= 3 and Attacker_score < 75. These are MITRE ATT&CK techniques with medium to low frequency that the SOC requires minimal or no preparation to detect.

- Priority 4 (low): (Visibility_score < 3 and Visibility_score >= 1) and Attacker_score < 75. These are all other MITRE ATT&CK techniques that feature in attacks and the SOC has the capability to detect.

As a result, the SOC obtains a list of MITRE ATT&CK techniques ranked into four groups and mapped to its capabilities and global statistics on malicious actors’ actions in attacks. The list is optimized in terms of the cost to write detection logic and can be used as a prioritized development backlog.

Prioritization extension and parallel tasks

In conclusion, we would like to highlight the key assumptions and recommendations for using the suggested prioritization method.

- As mentioned above, it is not fully appropriate to use the MITRE ATT&CK statistics on the frequency of techniques in attacks. For more mature prioritization, the SOC team must rely on relevant threat data. This requires defining a threat landscape based on analysis of threat data, mapping applicable threats to specific devices and systems, and isolating the most relevant techniques that may be used against a specific system in the specific corporate environment. An approach like this calls for in-depth analysis of all SOC activities and links between processes. Thus, when generating a scenario library for a customer as part of our consulting services, we leverage Kaspersky Threat Intelligence data on threats relevant to the organization, Managed Detection and Response statistics on detected incidents, and information about techniques that we obtained while investigating real-life incidents and analyzing digital evidence as part of Incident Response service.

- The suggested method relies on SOC capabilities and essential MITRE ATT&CK analytics. That said, the method is optimized for effort reduction and helps to start developing relevant detection logic immediately. This makes it suitable for small-scale SOCs that consist of a SIEM administrator or analyst. In addition to this, the SOC builds what is essentially a detection functionality roadmap, which can be used for demonstrating the process, defining KPIs and justifying a need for expanding the team.

Lastly, we introduce several points regarding the possibilities for improving the approach described herein and parallel tasks that can be done with tools described in this article.

You can use the following to further improve the prioritization process.

- Grouping by detection. On a basic level, there are two groups: network detection or detection on a device. Considering the characteristics of the infrastructure and data sources in creating detection logic for different groups helps to avoid a bias and ensure a more complete coverage of the infrastructure.

- Grouping by attack stage. Detection at the stage of Initial Access requires more effort, but it leaves more time to respond than detection at the Exfiltration stage.

- Criticality coefficient. Certain techniques, such as all those associated with vulnerability exploitation or suspicious PowerShell commands, cannot be fully covered. If this is the case, the criticality level can be used as an additional criterion.

- Granular approach when describing source quality. As mentioned earlier, DETT&CT helps with creating quality descriptions of available data sources, but it lacks exception functionality. Sometimes, a source is not required for the entire infrastructure, or there is more than one data source providing information for similar systems. In that case, a more granular approach that relies on specific systems, subnets or devices can help to make the assessment more relevant. However, an approach like that calls for liaison with internal teams responsible for configuration changes and device inventory, who will have to at least provide information about the business criticality of assets.

Besides improving the prioritization method, the tools suggested can be used for completing a number of parallel tasks that help the SOC to evolve.

- Expanding the list of sources. As shown above, the coverage of the MITRE ATT&CK matrix requires diverse data sources. By mapping existing sources to techniques, you can identify missing logs and create a roadmap for connecting or introducing these sources.

- Improving the quality of sources. Scoring the quality of data sources can help create a roadmap for improving existing sources, for example in terms of infrastructure coverage, normalization or data retention.

- Detection tracking. DETT&CT offers, among other things, a detection logic scoring feature, which you can use to build a detection scenario revision process.

如有侵权请联系:admin#unsafe.sh