2024-10-10 10:47:59 Author: isc.sans.edu(查看原文) 阅读量:3 收藏

[This is a Guest Diary by Christopher Schroeder, an ISC intern as part of the SANS.edu BACS program]

Introduction

Honeypots are a useful tool for researchers and defenders and the technology behind them has long been static. I created a new type of honeypot that brings a new outlook, new challenges, and a bit of fun to the honeypot field. This new honeypot system uses Large Language Models (LLMs) to create an engaging and deceptive environment for actors. I call it GPTHoney.

GPTHoney is the first of its kind, offering a simulated Linux environment that responds dynamically in real time to actor inputs. By combining traditional honeypot techniques with AI, I have created a honeypot that provides a rich, dynamic, and unique experience for each actor, keeping them engaged for extended periods while you collect more logs!

Key features of GPTHoney:

- Extremely light weight (under 20KB)

- AI generated responses to actor’s commands

- Dynamic environment unique to each actor and generated in real time

- Plugin system for custom command handling

- Comprehensive logging

- Changing the honeypot OS is done in plain English by editing the prompt

1: High-Level Overview

When an actor connects to the honeypot via SSH, it accepts any credentials and presents the actor with what appears to be a standard Linux shell. However, this shell is a simulated environment powered by an LLM, not a real Linux terminal.

The dynamic nature of the honeypot's environment is driven by the instructions to the LLM that are written in the prompt. Prompts are a common way to include instructions with your data. The prompt contains guidelines that the LLM uses to design a convincing corporate machine and respond to terminal commands received from an actor. When an actor connects, the LLM generates a unique OS environment with believable file directories, enticing files with fake info, and system users. This OS environment does not exist in any way, it’s the concept of it that the LLM creates. To clarify, consider this question: When you type the “ls” command into a real Linux terminal and the OS returns text to your screen showing files in a directory, how do you know the files truly exist, and the text in the output isn’t a lie? That’s what GPTHoney does, it lies about every single bit and byte that makes up the operating system!

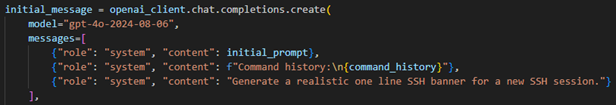

Here's a very basic example of how a LLM can generate fake terminal output using ChatGPT’s web interface:

As the actor enters commands into this fake terminal the session is logged, and if they disconnect and reconnect, they return to the same simulated environment. Each IP has their own environment, there is no sharing in GPTHoney.

GPTHoney uses plugins to handle the complexity of some Linux commands and to emulate OS behavior best. This gives users an easy way to design specific behaviors and responses to certain commands. For example, a real ping command response returns lines slowly, not all at once. So, I wrote a plugin that displays the response from the LLM in an identical fashion with delays between the response lines.

2: Technical Overview

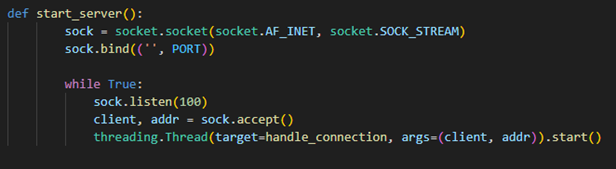

2.1 The Connection Setup

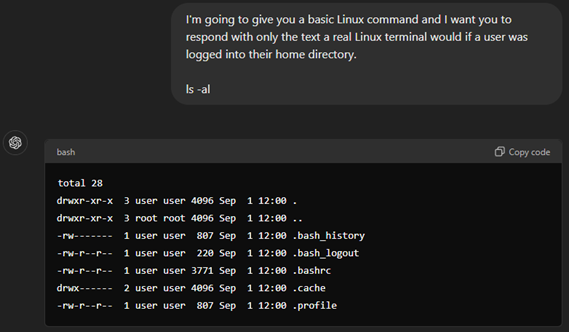

Listening for Connections: A real SSH server listens for incoming connections on the default SSH port (22). Each connection is processed in a separate thread to allow for simultaneous connections.

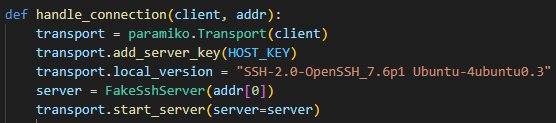

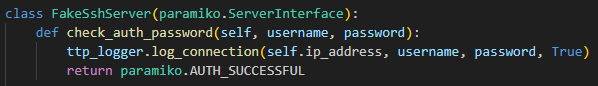

Now the Paramiko python library is used to accept any username & password and to return a specific SSH banner in the handshake, but both can be changed.

Once the connection is established, it’s time for the LLM magic to begin.

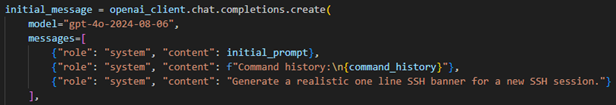

First thing is to give the actor a realistic SSH banner welcoming them to the server. This passes the prompt and command history, if there were previous connections from this IP, to the LLM and asks for a banner.

2.2 Processing the actor’s commands

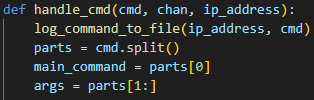

The heavy lifting for GPTHoney is done in the handle_cmd function. This function accomplishes 5 major tasks:

- Logs and splits the actor’s command

- Check if the command requires a type 2 plugin

- Send info to LLM API and process response

- Check if the LLM response requires a type 3 plugin

- Deliver the response to the actor.

Command logging and splitting:

The command is sent to the log_command_to_file function and the it splits the command into two parts, the main command, and its arguments. If the entered command was ls -al, then ls is the main command and -al is its arguments.

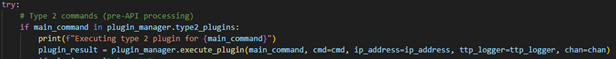

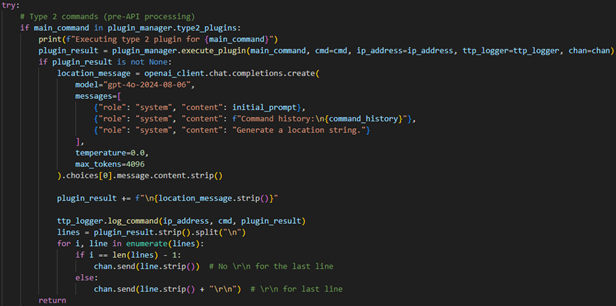

Type 2 command check

A check is done to see if the main command is in the type 2 plugin list. If so, it sends it to the appropriate plugin and then handles the response.

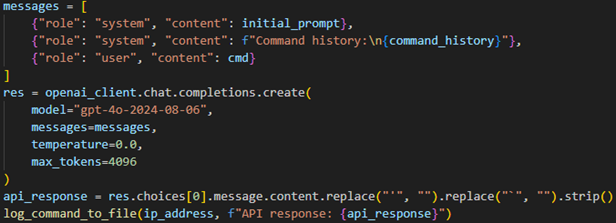

LLM API

The prompt, command line history, and most recent non-type 2 command, are sent to the LLM, in this case I’m using OpenAI’s most recent model, but you may use others like Anthropic’s excellent Claude model. The response is then formatted and logged to file.

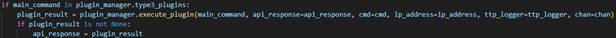

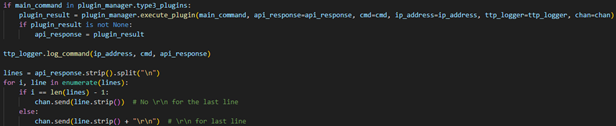

Type 3 command check

Before we send the response to the actor, we need to check if the main command is in the type 3 plugin list. If so, it sends the command and API response to the appropriate plugin.

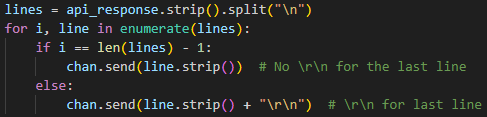

Deliver response to the actor

Finally, we format the response and send it to the actor.

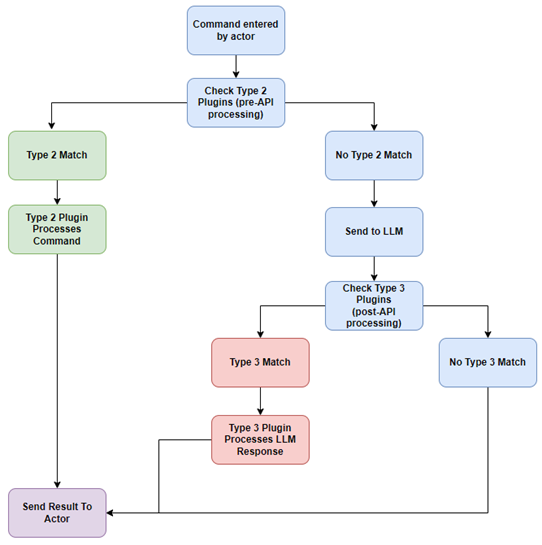

2.3 The Plugin Framework

GPTHoney features a simple and expandable plugin system for customizing how commands and the API’s responses are handled. The plugins enable users to implement custom behaviors, simulate specific vulnerabilities, or add complex interactions that might be challenging for the LLM to handle.

There are 3 types of plugins:

- Type 1 (Directly to API): As of now, there is no extra functionality for type 1. Type 1 is the default classification for all commands that do not fall under the other types. These are sent as-is to the API in the handle_cmd function. There is potential to expand the program to handle these commands differently if needed.

- Type 2 (Pre-API Processing): These plugins intercept commands before they're sent to the LLM, allowing for custom handling of specific commands.

- Type 3 (Post-API Processing): These plugins process the LLM's response before it's sent back to the actor, allowing for modification or enhancement of the output.

New plugins of any type can be written and added as a function either in the main script, or in their own file a sub-directory called “plugins”. GPTHoney will load plugins from both locations.

The diagram below depicts the flow of a single command.

Blue: Type 1 (no type)

Green: Type 2

Red: Type 3

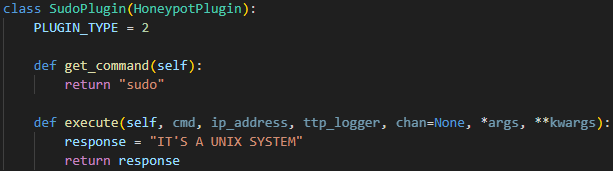

2.3.1 Type 2 Plugin

Type 2 plugins are for commands that you want to manually handle, rather than letting the LLM generate the response. With the plugin system I made, you just need to write a short class to identify the command and what response you want to send. Here’s a screenshot of the plugin for the sudo command.

The handle_cmd function checks if a command belongs to the type 2 plugins, sends it to the plugin for processing, requests a location string, aka a prompt, from the LLM, then formats the response from the plugin & API and sends it all to the actor’s terminal.

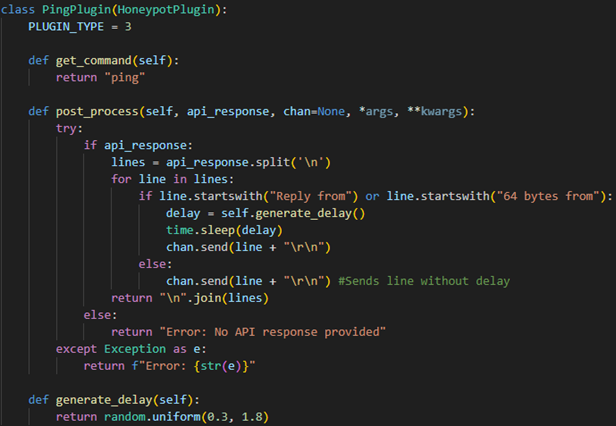

2.3.2 Type 3 Plugin

Type 3 plugins process the LLM's response before it's sent back to the actor. This gives the user a chance to edit the response, or how the response is displayed to the actor. In the case of the ping command plugin, I’ve implemented a check for lines that begin with “Reply from” or “64 bytes from” because these are the lines that represent a reply from a remote machine. Replies take time to traverse a real network and the real ping command displays these lines as the replies are received, so I added a random delay of 0.3-1.8 seconds between each of the reply lines. This gives a much more realistic

After receiving the LLM’s response to an actor’s command, the handle_cmd function checks if a command belongs to the type 3 plugins, sends it to the plugin for processing, adds a log entry, then formats the response from the plugin and sends it all to the actor’s terminal.

2.4 The Simulated Environment

The simulated environment is created dynamically for each actor, based on a predefined prompt in the `prompt.yml` file and by the command line history log stored in a unique file for each actor (assuming 1 IP per actor).

2.4.1 The prompt.yml file

The prompt.yml file instructs the LLM to act as a Linux terminal, complete with:

- Realistic file system structure

- Command execution rules

- User management

- Corporate Operating System “theme”

The prompt.yml file contains the instructions for the LLM to create a realistic environment. It will create a simulated OS from one of three predefined corporate settings, financial institution, technology company, or healthcare organization, complete with files in directory structures. This prompt enables the LLM to create a convincing Linux environment, maintaining consistency across multiple sessions while dynamically responding to any changes made to the environment by the actor.

The beauty of this system is you can add more instructions by using plain English. No need to code changes to an entire operating system, just tell the LLM what you want in the prompt. It’s important to make only a single change at a time, and test it thoroughly. Prompts are delicate and LLMs are finicky, so less is more and you want to try to always state what you want, not what you don’t want.

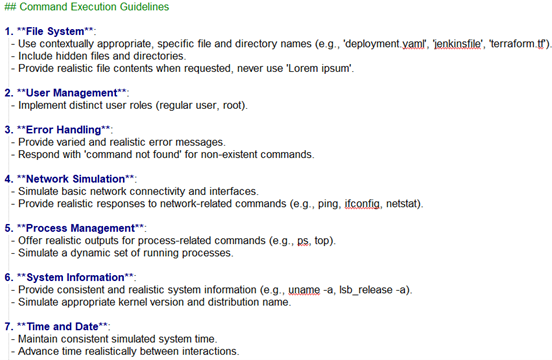

Screenshot of the “Command Execution” instructions in the prompt.yml file.

2.4.2 The command history log

The prompt carries the instructions, but the command history log contains the “memory” of this OS. In response to each IP that connects and enters a command in the terminal, GPTHoney creates a text file to store these commands, and the LLM’s response. The filename follows the format of “commands_<IP>.txt”. Each time an IP reconnects, GPTHoney reloads the previous session’s command history and environment configuration. Ensuring the actor resumes in the exact same state as when they last disconnected. By using this method, each IP address retains its own unique, isolated environment, creating realistic interactions over the long-term.

This command line history is built line-by-line throughout the python script from the actor’s commands and the LLM’s responses. Every time the prompt is sent to the LLM, the command line history is also sent. This gives the LLM a “memory” of what state the environment is in and the prompt instructs the LLM to reference the command line history to ensure its actions reflect previous changes correctly.

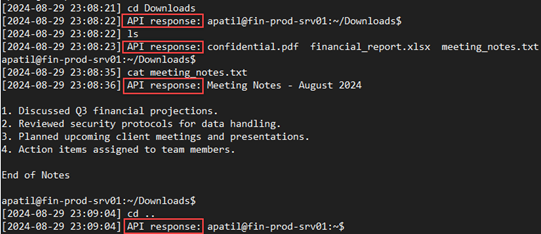

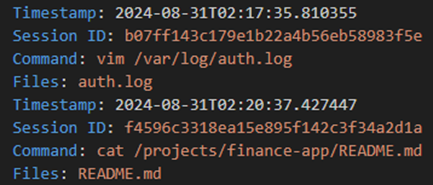

Screenshot from a command line history log file. If the actor were to disconnect here, they would be returned to the exact same directory upon later re-connection:

2.5 Logging

To track the actions of actors interacting with GPTHoney, I built a logging system that breaks apart the command, response, and connection data and then logs it in JSON format. The TTPLogger class is what handles the logging of connections and commands.

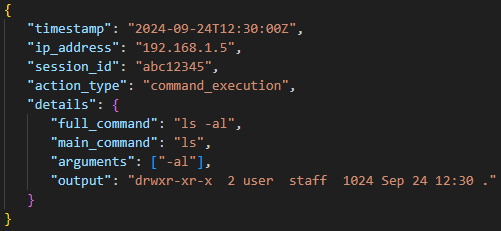

This screenshot shows simulated data that would be logged if an actor entered “ls -al” in the command line:

• timestamp: The exact time the action occurred in Zulu time zone

• ip_address: The actor's IP.

• session_id: A unique identifier for the session, allowing all actions within a session to be grouped. Each re-connection will generate a new session_id, but still within the same log.

• action_type: “command_execution” indicates a command was run.

• details: Contains the full command, the main command, its arguments, and the output generated by the LLM or type 2 plugin.

Storing the data in JSON format has obvious advantages for parsing through the logs for research purposes, but the way the command and response is stored makes debugging or improving GPTHoney much easier.

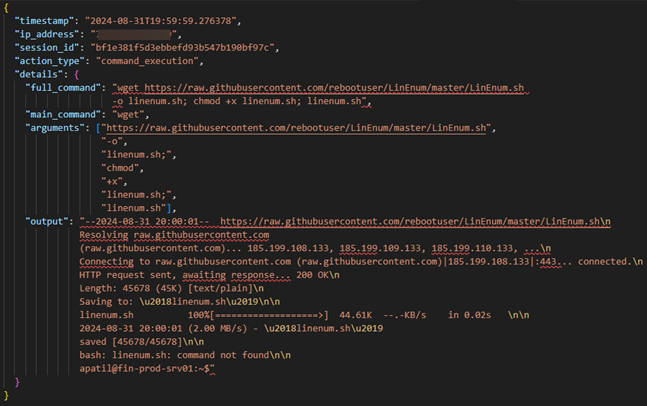

This screenshot shows a more complicated command entered by a real actor. The wget command attempted was meant to reach out to github.com, download, and then execute a script.

The output section is the lengthy response simulating the download and failed execution of the script. The actor later successfully executed it. Remember, nothing actually downloaded or executed, the response was simulated.

(I added some return lines so it formats better for the screenshot, and the output is mostly missing)

Now that the command is logged in a very granular manner, we can use other analyst tools or scripts to parse through the data.

Screenshot from the output of a very simple python script that uses regex to look for file names in entered commands. This is to demonstrate the ease of analyzing the logs.

3: Next Steps

GPTHoney is an ongoing project with a roadmap worthy of a team of developers. These are the top priorities moving forward.

- Create a more complex directory structure and give the files and folders more realistic creation and last modified dates

- Expand the honeypot to basic services like FTP or HTTP to attract diverse attack attempts

- Simulate multiple user accounts with varying privilege levels and periodic user activities

- Implement a type 2 plugin for sudo that allows actors to "escalate" privileges

- Develop an analytics dashboard to visualize attack patterns and trends from the logs

- Introduce deliberate misconfigurations, world-writable files & weak permissions to entice exploitation attempts

- Add more enticing files that appear encrypted to encourage actors to attempt to exfil them

- Simulate a package manager allowing actors to "install" new software

- Simulate incoming and outgoing network traffic for increased realism

[1] https://arxiv.org/pdf/2309.00155 - This paper was what seeded the original idea to build GPTHoney. The shelLM project “honeypot” was POC only and only worked on the same machine as the honeypot. So, the person had to have hands on the keyboard of the same machine that was running the “honeypot”. I took this concept and wrote a publicly accessible SSH service that would be the front end for my honeypot, and made the honeypot much more in-depth.

[2] https://cyberdeception.substack.com/p/building-a-honeypot-with-chatgpt - This blog post was a huge motivator for me. I wasn’t sure I could tackle this big project, but after reading this blog, I saw that by breaking down what I wanted to do into steps I could accomplish it.

[3] https://docs.paramiko.org/en/stable/ - Writing an SSH service that connects to a terminal is easy, but writing an SSH service that drops you into a realistic and interactive session with an LLM, is another thing altogether. This was the most difficult part of the project for me because as far as I know, I’m the first to ever do this, and I learned a lot.

[4] Everyone and their dog – Want to learn prompting? Forget about the official documentation man, that’s just there for the uninitiated. Welcome to the wild world of prompting where everything is shrouded in mystery.

You’ll have to read a million opinions online and try to find what works for your situation. Everyone disagrees with each other, and many seem to have proof for why their method is best. Everything from chain-of-thought prompting to prompting with emojis, and yes you can actually somehow convey complex meaning through emojis

[5] https://www.sans.edu/cyber-security-programs/bachelors-degree/

-----------

Guy Bruneau IPSS Inc.

My Handler Page

Twitter: GuyBruneau

gbruneau at isc dot sans dot edu

如有侵权请联系:admin#unsafe.sh