![]()

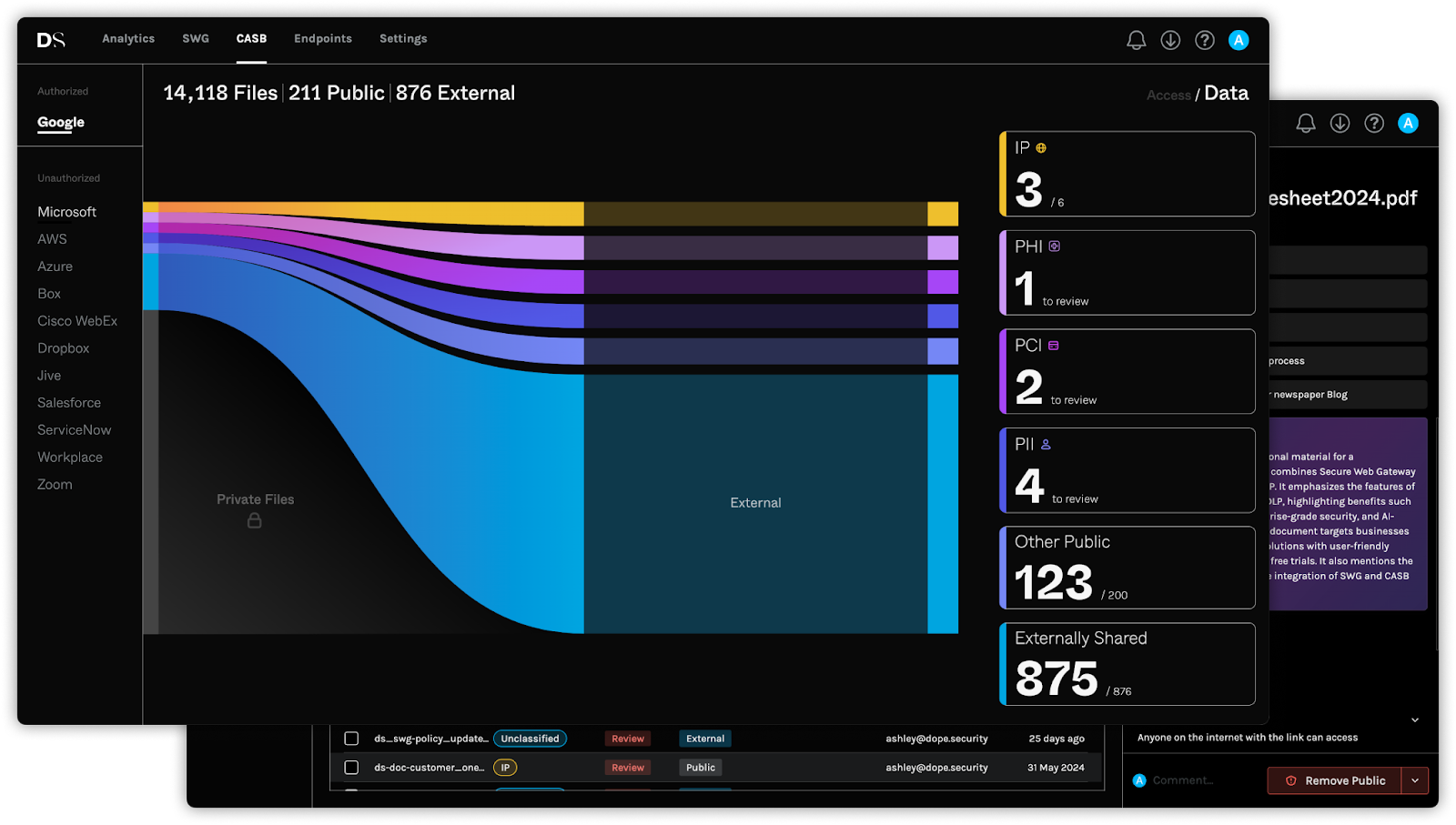

dope.security this week added a cloud access security broker (CASB) to its portfolio that identifies any externally shared file and leverages a large language model (LLM) to identify sensitive data.

Company CEO Kunal Agarwal said CASB Neutral provides an approach to data loss prevention (DLP) that is more precise than legacy offerings that rely on pattern-matching techniques that surface too many false positives to be practical to use.

In contrast, CASB Neutral makes use of the LLM, dubbed Dopamine DLP, to create a human-readable document summary that provides cybersecurity teams with the ability to render a file unshareable via a single click, he added.

Previous iterations of CASBs have not gained traction because they are not able to actually read a document the same way an LLM can be used to identify sensitive data, said Agarwal.

CASB Neutral complements a secure Web gateway (SWG) the company launched in 2023 that runs natively on an endpoint versus requiring network traffic to be first routed through a server installed in a data center. That capability reduces the need to incur the expense of back-hauling network traffic through a data center in a way that ultimately adversely impacts cloud application performance, noted Agarwal.

In general, the focal point should be on the endpoint to both eliminate complexity and reduce costs, he added. Rather than requiring organizations to replace virtual private networks (VPNs), the security technologies developed by dope.security are designed to be deployed alongside in a way that enables inspection to occur on the endpoint device, said Agarwal. It should not require a Ph. D. in networking to secure data, he added.

It’s not clear to what degree LLMs might transform data security but cybersecurity teams should become more effective using artificial intelligence (AI) technologies that make it simpler to understand how sensitive data is being accessed. Armed with those insights, it then becomes simpler to more granularly apply controls in a way that is less disruptive to the business.

Hopefully, as it becomes easier to identify instances where sensitive data is being exposed the number of data breaches will drop. Additionally, organizations should be able to maintain compliance with various regulations that if violated inevitably lead to yet another fine being potentially levied. In comparison to those potential costs, the time, effort and money required to apply AI to data security is relatively trivial.

Of course, there will never be such a thing as perfect security, but AI technologies should help level the playing field for cybersecurity teams that are currently overwhelmed by the amount of data they are supposed to keep secure. End users, unfortunately, don’t always have the appropriate level of regard for ensuring that sensitive data isn’t inadvertently exposed so the amount of data being exposed is more than most anyone cares to admit.

The issue, as always, is that no matter who is at fault it will be the cybersecurity teams that will be held accountable for allowing it to occur in the first place.

Recent Articles By Author