2024-11-6 20:59:54 Author: securityboulevard.com(查看原文) 阅读量:2 收藏

Machine learning (ML) models are transforming industries—from personalized recommendations to autonomous driving and healthcare diagnostics. As businesses increasingly rely on ML models to automate complex tasks and make data-driven decisions, the need to protect these models from emerging threats has become critical.

At ReversingLabs, we’re proud to introduce a major update to our Spectra Assure™ product, with enhanced capabilities for detecting and mitigating ML malware. The term “ML Malware” here specifically refers to malicious code embedded within serialized ML models rather than within training datasets. This new feature is designed to safeguard your ML models from threats posed by malicious actors who exploit unsafe serialization formats to distribute malware. Here, we’ll walk you through what ML models are, why serialization/deserialization is risky, and how Spectra Assure now protects against these hidden dangers.

What Is an ML Model?

At its core, an ML model is a mathematical representation of a process that learns patterns from data and makes predictions or decisions based on those patterns. When you hear the term “AI” thrown around, this is usually what they’re referring to. These models are trained on large datasets, and once trained, they can be deployed to perform a variety of tasks.

Real-World Use Cases of ML Models:

- Recommendation Systems: ML models suggest products or content based on your browsing history

- Autonomous Vehicles: Self-driving cars use ML to recognize road signs, pedestrians, and other vehicles

- Healthcare: Models predict patient outcomes, diagnose diseases and assist doctors in treatment decisions

- Finance: Used for fraud detection, where they analyze real-time transaction data to identify suspicious activity and prevent financial fraud

Software developers are incorporating Large Language Model (LLM) functionality to create these features inside their applications.

In recent years, we’ve seen explosive growth in the use of (LLMs), such as OpenAI’s ChatGPT, Google’s Gemini, and Meta’s LLaMA. These models are increasingly becoming integral to AI-powered services like virtual assistants and customer support chatbots. As their use proliferates, so do the risks.

Fig 1: Top: SaaS/API-only models | Bottom: Flexible (self hosted/ on-prem) deployment models

The Need for Sharing and Saving ML Models

Training a machine learning model is an expensive process—it requires large datasets, immense computing power, and significant time. To save resources, companies and researchers often share their pre-trained models so that others can reuse these third-party models without having to retrain from scratch. This is where serialization and deserialization come into play.

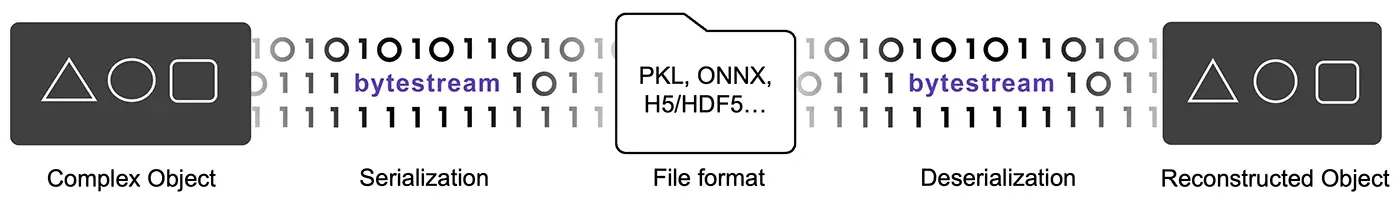

Serialization is the process of converting a trained model into a file format that can be saved, shared, or stored for later use. Deserialization is the reverse process, where the file is loaded back into memory so that the model can be used again. This allows teams to share ML models across projects or organizations, accelerating development.

However, this convenience brings unique security risks. Vulnerabilities in serialization and deserialization are common across programming languages and applications, and they present specific challenges in machine learning workflows. For instance, formats like Pickle, frequently used in AI, are especially prone to such risks.

Serialization & Deserialization: The Hidden Security Risk

When you serialize an ML model, you’re essentially packing it into a file format that can be shared. It’s similar to compressing a complex software application into a single file for easy distribution. But here’s the problem: certain file formats allow code execution during deserialization.

Fig 2: Serialization and Deserialization process

One of the most common formats used in Python is Pickle. While it’s efficient and widely adopted, Pickle is inherently unsafe because it allows embedded Python code to run when the model is loaded. Sometimes this is necessary. Python itself uses this feature to deserialize non-trivial data objects. However, this feature also opens the door to malicious actors, who can abuse it to inject harmful code into the model files.

These security risks are hidden and not covered by traditional SAST tools because they’re not analyzing code for intent, only weaknesses and known vulnerabilities.

Real-World Example: Imagine you download a pre-trained ML model from a popular platform like Hugging Face. Unknown to you, this model contains hidden Python code that runs as soon as you deserialize it. The code could:

- Execute malicious commands, infecting your machine with malware

- Open-network connections, sending sensitive data to an attacker

- Create new processes in the background, compromising your system

- Access system interfaces like the camera, microphone, or file system

- Corrupt other Pickle files on your machine

In fact, incidents have already been reported where malicious models were uploaded to platforms like Hugging Face, posing a serious threat to organizations that unknowingly downloaded and deployed them.

Spectra Assure’s New ML Malware Detection: How It Works

Given the growing risks associated with malicious ML models, our latest update to Spectra Assure enhances your security with advanced ML malware detection, ensuring your systems are protected from threats hidden in serialized models.

1. Supported Formats

We identify and parse serialized model formats, including Pickle (PKL), Numpy Array (NPY), Compressed Numpy Arrays (NPZ). By covering these widely used formats, we ensure broad protection against potentially malicious code embedded in the model files.

2. Behavioral Analysis

After parsing, we extract behaviors from the model, such as attempts to create new processes, execute commands, or establish network connections. This behavioral analysis flags unusual activities that could indicate malicious intent, bypassing traditional signature-based detections.

3. Mapping Unsafe Function Calls

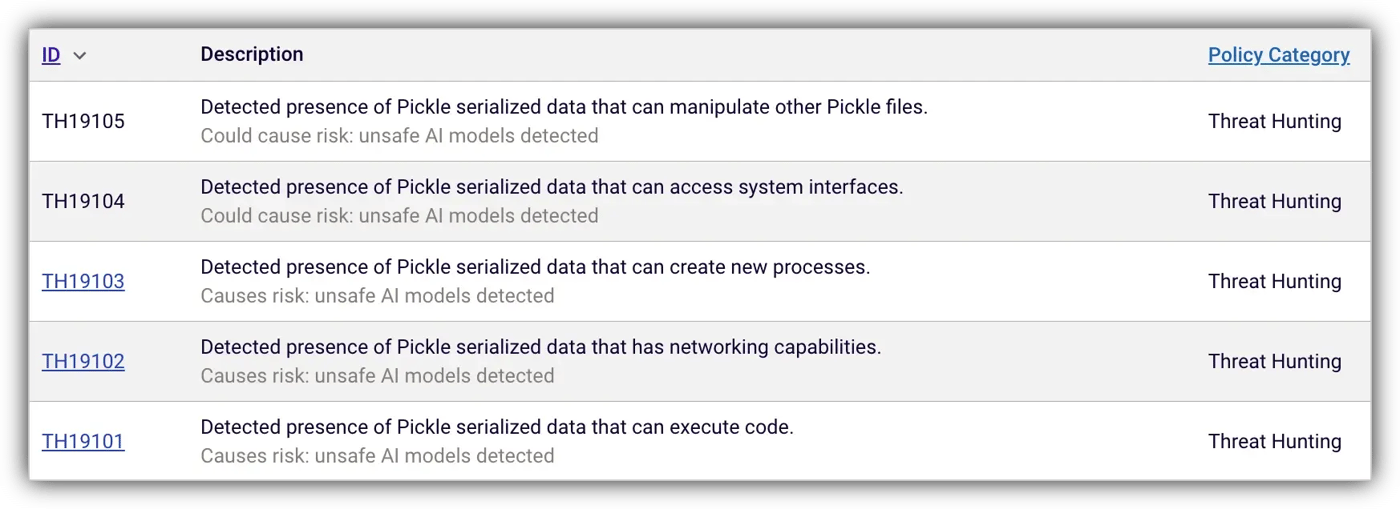

Specific behaviors are mapped into threat hunting policies. Our engine detects unsafe function calls during deserialization, especially in formats like Pickle, where malicious code can be triggered. This mapping helps us monitor for direct indicators of malicious activity.

4. Automated Classification

Once a model is flagged for suspicious behaviors, Spectra Assure automatically classifies it into a priority and risk category. This automated response ensures rapid detection, classification, and mitigation, preventing malware from spreading across your infrastructure.

Fig 3: Spectra Assure demo showing malware detection in ML model.

Malicious Techniques Hidden in Pickle Files

Here’s a breakdown of the malicious techniques attackers commonly use within serialized files:

- When deserialized, hidden code within the Pickle file can automatically run on your system. This could install malicious payload, manipulate your settings, or infect other files

- The malicious code may establish network connections, sending sensitive data to an attacker. This could include everything from system logs to confidential information

- The attacker’s code could spawn background processes that consume system resources or perform unauthorized tasks on your machine

Fig 4: Spectra Assure policies detecting risks in AI models.

- By exploiting system interfaces, the code could gain control over your camera, microphone, or even your file system, giving attackers unprecedented access to your machine

- Attackers may modify or corrupt other Pickle files on your system, making it difficult to detect or remove the malware

How Spectra Assure Protects You

With the rise of AI and machine learning, securing the ecosystem around ML models is more critical than ever. Spectra Assure’s new ML malware detection capabilities ensure that your environment remains safe at every stage of the ML model lifecycle:

- Before you bring a 3rd party LLM model into your environment, use Spectra Assure to check for unsafe function calls and suspicious behaviors and prevent hidden threats from compromising your system

- Before you ship or deploy an LLM model that you’ve created, use Spectra Assure to ensure it is free from supply chain threats by thoroughly analyzing it for any malicious behaviors

- Models saved in risky formats, such as Pickle, are meticulously scanned to detect any potential malware before they can impact your infrastructure

With these protections, you can confidently integrate, share, and deploy ML models without risking your system’s security.

Conclusion

As machine learning drives the next generation of technology, the security risks associated with model sharing and serialization are becoming increasingly significant. At ReversingLabs, we’re dedicated to staying ahead of these evolving threats with advanced detection and mitigation solutions, such as our ML malware protection. With the latest Spectra Assure update, you can confidently deploy ML models without concern for hidden malware threats.

Spectra Assure is a comprehensive software supply chain security solution that identifies and mitigates risks across software components, detecting malware, tampering, and exposed secrets throughout development and deployment. By integrating advanced ML malware detection into its robust feature set, Spectra Assure ensures your software ecosystem remains resilient and secure at every stage.

Stay tuned for further updates as we continue evolving our platform to address the needs of an ever-changing security landscape.

Are you ready to secure your machine learning workflows?

Contact us today to learn more about how Spectra Assure can help keep your models safe from emerging threats.

![]()

*** This is a Security Bloggers Network syndicated blog from ReversingLabs Blog authored by Dhaval Shah. Read the original post at: https://www.reversinglabs.com/blog/spectra-assure-malware-detection-in-ml-and-llm-models

如有侵权请联系:admin#unsafe.sh