TLDR: Tonic’s file connector is a quick and easy way to get de-identified data to power your development. Simply upload data in files (such as CSV) to detect and mask sensitive data, and then generate new output files for safe, fast use.

Tonic has consistently been the go-to test data platform for detecting and protecting your sensitive data in databases. The platform offers broad, native support for the most common relational and NoSQL databases as well as data warehouses.

But we know that data doesn’t just live in your databases. Files with sensitive data that people use for testing directly are also floating all over your organization. You have useful, production-level data in .csv, .tsv, .json, and .xml files that you need to use to test migrations and applications. These files could live on local filesystems or in the cloud, such as in an Amazon S3 bucket or in Google Cloud Storage (GCS).

We know this because Stack Overflow is flooded with people struggling with powershell or Java scripts to get the full value from their data stored in files, however, they don’t yet realize a more effective solution exists.

The Tonic File Connector: No Database, No Problem

Tonic’s file connector solves the challenge of accessing the valuable yet sensitive data stored in different file types outside of your databases. With this capability, users can take advantage of Tonic without connecting to a database by directly uploading CSV, XML or JSON files from various locations, including Amazon S3 file storage, Google file storage, or files from their local computers.

File connector is a valuable tool for many different use cases:

- De-identify files received via email and then send them to teams for data analysis or testing.

- Test your file-based data pipelines with realistic data.

- Enable offshore teams and external contractors with safe file dumps.

Tonic provides you with the data management power to view, detect, and mask the data using the files’ inherent schema without additional database tooling. It’s one of the fastest ways to get started on Tonic, no matter where your data originates.

How to Mask Data In Files with Tonic

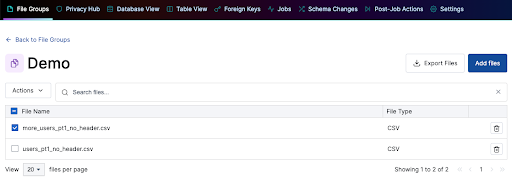

Under the File Groups tab,

In this tab, you can organize the data from your files into file groups by format (CSV, XML, JSON) and schma (same columns and delimiters in CSV).

Select one or more files to create a file group. When selected you will see previews of the file content. For CSV files, you also tell Tonic how to parse the files, for example, do the files have headings? How are the columns delimited?

You can add files to a group at any time. When you add new files, Tonic makes sure they have the correct structure and schema. The data from the new files becomes new rows in the Tonic file group “tables.”

Have a file with a different structure? Not to worry!

You can always add a new file group to accommodate these differences and still ensure that your data remains consistent across these groups, allowing you to expand and add new information as needed.

After a file group is created, your Tonic application functions pretty much like it does with any relational database. Tonic treats each file group as a table. In these tables, you can detect sensitive data in your files, configure the appropriate generators, run data generation, and view the generation job details once complete.

When you kick off a generation, Tonic generates de-identified copies of your real files. You can then download them to your local drive, or, if you use cloud storage, you can access the de-identified files from there.

To learn more, check out our video tutorial which provides an overview of the file connector and how to manage file groups.

Real-World Value from File Connector

The file connector supports as wide of a variety of use cases as there are for a file itself. We have seen this being especially beneficial in the healthcare and finance industries.

For healthtech companies, patient data is typically received in file-format (admissions info, clinical supplies info, etc.) that needs to be ingested into backend pipelines to inform testing for clinical applications. Being able to test applications and data pipelines on production-like data allows developers to delineate issues with the data from issues with their code to help them improve their product faster.

A lot of fin-tech companies have processes (applications, code, etc.) running downstream of their files, so having de-identified files in lower environments helps them to model their production environment to test code changes and perform troubleshooting. Further, this data can come from different sources. The file connector helps maintain continuity, as well as compliance.

Beyond these applications, the file connector speeds up Tonic’s time to value by simplifying onboarding since it doesn’t require database connections or credentials. It’s great for prototyping a masking process, or completing a one-off de-identification job for a new or unsupported database. File connector is the latest feature we’ve added to help you get all of the benefits of realistic, safe data to accelerate your development process, increase dev workflow efficiency, and boost software quality. It’s real fake data, just as you need it. Sign up for a free trial of Tonic to start de-identifying your files in minutes.

*** This is a Security Bloggers Network syndicated blog from Expert Insights on Synthetic Data from the Tonic.ai Blog authored by Expert Insights on Synthetic Data from the Tonic.ai Blog. Read the original post at: https://www.tonic.ai/blog/how-to-mask-sensitive-data-in-files-from-csv-to-json