2022-6-17 16:56:15 Author: blog.avast.com(查看原文) 阅读量:24 收藏

This incident shows a fundamental problem of stopping disinformation on the internet: disinformation is global.

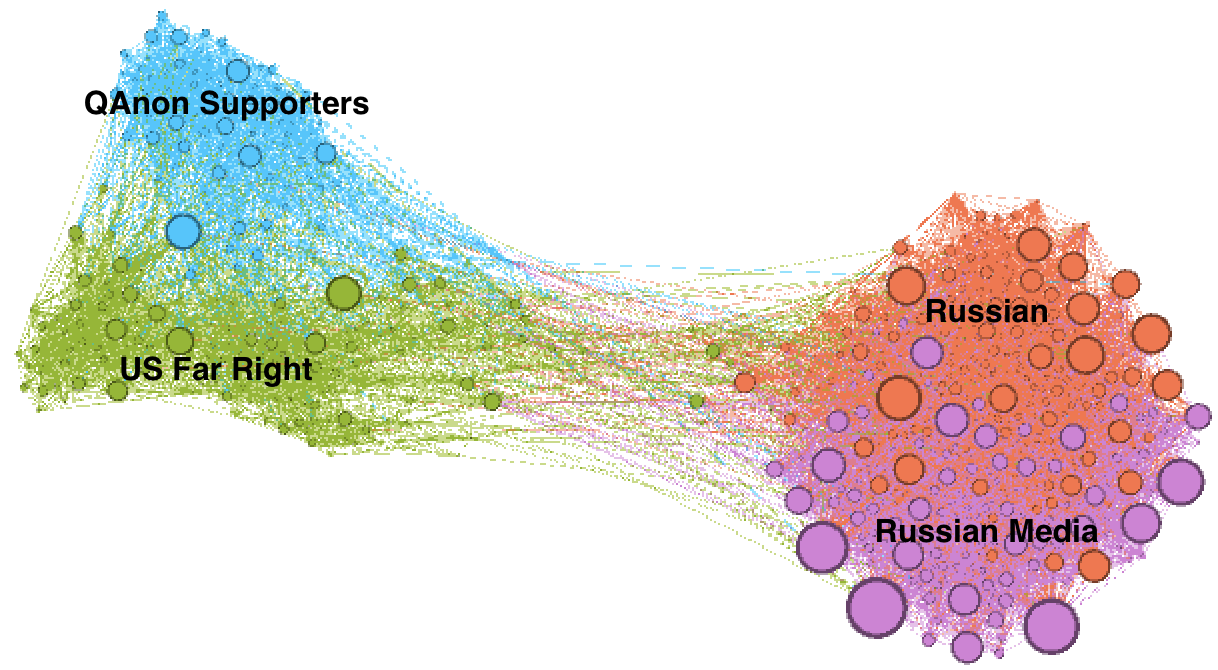

Authors: Sadia Afroz and Vibhor Sehgal. On February 24, a conspiracy theory emerged that Russia attacked Ukraine to destroy a clandestine U.S. weapons program. This narrative, started by a QAnon follower on Twitter, quickly became one of the “official” reasons for invading Ukraine. The Russian Embassy in Sarajevo posted on Facebook about it. Since then, media networks in other countries – including China, India, and the US – started boosting the Biolabs conspiracy theory to millions of internet users. With the flurry of disinformation coming from Russia, the EU banned Russian government-backed websites. Social media companies including YouTube, Facebook, Instagram, and Twitter blocked Russian disinformation accounts, banned ads from Russian state-backed media, and started labeling posts linked to Russian media. However, this conspiracy theory, along with many others, is still running rampant in many US far-right news media and social networks. This incident shows a fundamental problem of stopping disinformation on the internet: disinformation is global. Russian disinformation doesn’t necessarily originate in Russia. Disinformation that starts as an innocent query takes a life of its own as it spreads and evolves into a full-blown conspiracy theory. And, once spread, disinformation can crowd out real news. While Western companies blocked some of these disinformation sources, they are slow to block all disinformation spreaders, especially the ones originating in Western countries. To effectively mitigate disinformation, we need a global effort to limit its spread. Telegram is a free instant messaging service available for desktop and mobile devices. Currently, Telegram has 550 million monthly active users and 55.2 million daily users. Telegram allows all users to create channels with an unlimited number of subscribers, which makes it a powerful tool for mass communication. Telegram channels are content feeds, where admins of a channel post messages for their subscribers. A channel can be either private (requires an invitation) or public (free to join). Telegram provides no algorithmic feed and no targeted advertising, which is attractive to many users frustrated with mainstream social media platforms. However, the lack of content moderation has made it a breeding ground for disinformation. In the wake of the Russian invasion of Ukraine, Telegram became one of the main sources of information regarding the invasion. The Ukrainian Government adopted Telegram to communicate with the Ukrainians. At the same time, Russian supporters started using the same platform to spread their propaganda. For us scientists, Telegram became a perfect microcosm to study disinformation. To discover who is spreading disinformation about the Russian invasion, we focused on message forwarding on Telegram. Channels on Telegram can post new messages they created and can also forward messages from other Telegram channels. Message forwarding amplifies a piece of particular information across Telegram and can cause a viral spread. The repeated message forwarding activities among channels can reveal a connection. We also suspect that users find similar channels by following forwarded messages since Telegram doesn’t provide any automated algorithmic feed to users. The message-forwarding relationship can help label the activities of an unknown channel: if a channel is always forwarding messages from known disinformation channels, it’s highly likely to be a disinformation spreader. To create the network of Russian disinformation spreaders, we start with one known Russian government-controlled channel on Telegram: Donbass-Insider, and then automatically crawl all the public channels from which Donbass-Insider forwarded messages. We crawled Telegram twice at two different times: once at the beginning of March and again at the end of April, to understand the evolution of the disinformation communities over time. Figure 1: Mapping of Telegram channels. Each dot represents a channel and a connection between two channels indicates that at least 20 messages from one channel were forwarded to the other channel. Channels are grouped into communities based on the prevalence of message forwarding among them. The two graphs show the channels' relationship in March (top) and April (bottom). As time progresses, Russian and US far-right groups become the main spreaders of Russian disinformation. The network of channels shows an interesting dynamic: Far-right groups from the US and France are spreading Russian disinformation, along with known Russian allies from China. Let’s focus on the pink cluster consisting mostly of the US far-right groups. One of the most active channels in this cluster is called “TheStormHasArrived**” (the real name is masked), which has over 137k followers. This channel is associated with the QAnon conspiracy, as evident from the use of the most popular QAnon parlance “The storm.” This channel is supporting the Russian invasion by spreading misinformation related to Nazis and Biolabs in Ukraine and, at the same time, accusing US President Joe Biden of funding the Biolabs. Figure 2. A post from TheStormHasArrived** The French conspiracy theorists are also using Russian disinformation to propagate their agenda. One well-known conspiracy theorist, Silvano Trotta, was spreading misinformation about the Covid vaccine, and now started spreading the narrative that the humanitarian crisis in Ukraine caused by the war is fake (Figure 3). Labeling disinformation requires a tremendous amount of manual effort, which makes it hard to quash it as soon as it starts spreading. One observation can help solve the labeling issue: entities sharing disinformation are closely connected. This turned out to be true in the case of the domains that share disinformation. To see if the same is true on Telegram, we take a close look at the map of the different types of channels. Let’s, for example, check out the map of two particular channels: a known pro-Ukraine channel (UkraineNOW) and a known disinformation channel (Donbass-Insider). UkraineNOW forwarded messages from other pro-Ukraine and government channels. Donbass-Insider forwarded messages from other pro-Russian propaganda channels, including Intelslava (a known disinformation channel) (link 1, link 2, link 3). This phenomenon–channels sharing similar information are connected–is true for most channels in our dataset. Figure 4: Message forwarding map of UkraineNOW (top) and DonbassInsider (bottom). The circles represent channels and an edge between Channel A to B means channel A forwarded a message from Chanel B. The color of the channels corresponds to the types of channels (orange represents pro-Ukraine channels, purple represents pro-Russian channels, and green represents US far-right). The size of the circles represents the number of channels that forwarded messages from the channel. Where is the disinformation on Telegram coming from? To answer this question, we look at the URLs shared on Telegram. We collected 479,452 unique URLs from 5,335 channels. ~10% of these URLs come from 258 unique disinformation domains identified by two popular fact-checking organizations Media Bias/Fact Check (MBFC) and EUvsDisinfo. The 258 domains constitute 22% of the disinformation domains MBFC and EUvsDisinfo found. Out of the 258, 83.72% of domains (216) were mentioned on Media Bias/Fact Check as fake news domains and the remaining 16.27% (42) were flagged on EUvsDisinfo for disinformation articles. These domains seem to be hosted all around the world, targeting people in countries from India, China, Israel, Syria, France, the UK, the USA, and Canada. This list includes eight .news domains that usually focus on US far-right conspiracies and are closely connected with other .news domains known for sharing disinformation. We also found many other domains spreading disinformation even though these were not labeled by any public sources as disinformation. Telegram has millions of users and channels. ~5,000 might seem like an insignificant number to understand the space, but it already reveals several insights that can help tackle disinformation on a large scale: “Homo sapiens is a post-truth species, whose power depends on creating and believing fictions,” Yuval Harari said in “21 lessons for the 21st century.” Alternative narratives existed before the invention of the internet. Disinformation, in essence, is just yet another fiction. Tech companies are under tremendous pressure to tackle disinformation, but completely blocking all alternative narratives might be impossible, or even undesirable, as it might limit users' freedom of speech. Is the battle against disinformation already lost, then? No! We recommend three new directions that tech companies can focus on to tackle the problem: Research shows that when users are shown a verdict about a piece of news, they want to know why. Users also want to know who made the decision. Context can change the meaning of a piece of information. For example, knowing where a piece of information was published, the Onion (a popular satire news site), or the New York Times, can change people’s perspective about it. On social media, we often believe people based on their background such as professional titles and affiliations with different groups. Unearthing the background information regarding online entities, their activities, and who else they are connected with can take a long time for a regular user. This is where automated technical approaches can expedite the data collection and synthesis process. However, the real challenge is to present the data in a way that convinces users. In collaboration with Georgia Tech, we developed a way to visualize the hyperlink connectivity between websites. Our user study with 139 users demonstrated that hyperlinking connectivity helps the majority of the users assess a website’s reliability and understand how the site may be involved in spreading false information. Social media platforms have struggled to curb the viral disinformation as the platforms are designed to help content that increases user engagement go viral. Disinformation often increases user engagement and thus goes viral before fact-checking can catch up. Indeed, false information spreads further on Twitter than factual information. Research shows that notifying users about inaccurate content can help slow its spread. Research also shows that even making users aware of the inaccuracies of the content they see, helps slow the spread. However, warning labels alone might decrease the spread slightly but don’t completely stop the spread of disinformation, as Facebook research noticed. On Twitter, hard interventions, such as outright blocking the content were more useful to limit the spread than soft interventions (such as showing warning labels). The most pressing problem for disinformation is timely labeling. Manual labeling cannot keep up with viral disinformation. Automated labeling can help, but can be easily bypassed by changing the content slightly. To keep up with disinformation, we need a semi-automated approach where machine learning-based systems can help select potentially damaging disinformation, which will be labeled by many regular internet users. Automated systems can then help synthesize the crowdsourced labels. Many open research questions need to be answered to implement such a system, such as how to convince users to participate in accurate labeling, and how to protect the labeling process from unwanted malicious actors.Can we map the spread of disinformation?

Telegram as a testbed

Who is spreading disinformation?

Figure 3: Covid and Ukraine disinformation from Silvano TrottaSources of disinformation

Strategies to effective intervention

Provide context

Slow the spread

Crowdsource community consensus

如有侵权请联系:admin#unsafe.sh