Many interesting problems in software design boil down to “I need my client application to know a secret, but I don’t want the user of that application (or malware) to be able to learn that secret.“

Some examples include:

- Digital Rights Management encryption keys

- Passwords stored in a Password Manager

- API keys to use web services

- Private keys for local certificate roots

…and likely others.

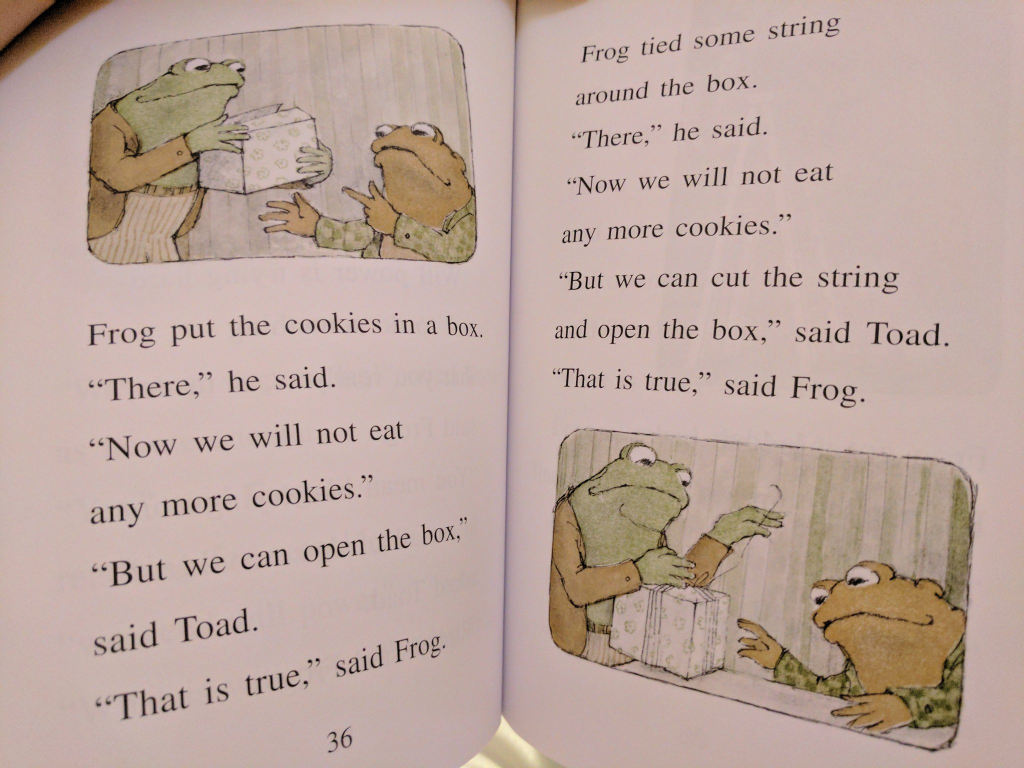

In general, if your design relies on having a client protect a secret from a local attacker, you’re doomed. As eloquently outlined in the story “Cookies” in 1971’s Frog and Toad Together, anything the client does to try to protect a secret can also be undone by the client:

For example, a “sufficiently motivated” attacker can steal hardware-stored encryption keys directly off the hardware. An user can easily read passwords filled by a password manager out of the browser’s DOM, or malware can read it out of the encrypted storage when it runs inside the user’s account with their encryption key. An attacker can read keys by viewing or debugging a binary that contains them, or it can watch API keys flow by in outbound HTTPS traffic. Etc.

However, just because a problem cannot be solved does not mean that developers won’t try.

“Trying” isn’t entirely madness — believing that every would-be attacker is “sufficiently motivated” is as big a mistake as believing that your protection scheme is truly invulnerable. If you can raise the difficulty level enough at a reasonable cost (complexity, performance, etc), it may be entirely rational to do so.

Some approaches include:

- Move the encryption key off the client. E.g. instead of having your client call the service provider’s web-service directly, have it call a proxy on your own website that adds the key before forwarding along the request. Of course, an attacker might still masquerade as your application (or automate it) to hit the service through your proxy, but at least they will be constrained in what calls they can make, and you can apply rate-limits, IP reputation, etc to mitigate abuse.

- Replace the key with short-lived tokens that are issued by an authenticated service. E.g. the Microsoft Edge VPN feature requires that the user be logged in with their Microsoft account (MSA) to use the VPN. The feature uses the user’s credentials to obtain tokens that are good for 1GB of VPN traffic quota apiece. An attacker wishing to abuse the VPN service has to generate fake Microsoft accounts, and there are robust investments in making that non-trivially expensive for an attacker.

- Use hardware to make stealing secrets more difficult. For example, you can store a private key inside a TPM which makes it very difficult to steal and move to a different device. Keep in mind that locally-running malware could still use the key by treating the compromised device as a sock puppet.

- Similarly, you can use a Secure Enclave/Virtual Secure Mode (available on some devices) to help ensure that a secret cannot be exported and to establish controls on what processes can request the enclave use the key for some purpose. For example, Windows 11’s Enhanced Phishing Protection stores a hashed version of the user’s Login Password inside a secure enclave so that it can evaluate whether recently typed text contains the user’s password, without exposing that secret hash to arbitrary code running on the PC.

- Derive protection from other mechanisms. For instance, there’s a Microsoft Web API that demands that every request bear a matching hash of the request parameters. An attacker could easily steal the hash function out of the client code. However, Microsoft holds a patent on the hash function. Any application which contains this code contains prima facie evidence of patent infringement, and Microsoft can pursue remedies in court. (Assuming a functioning legal system in the target jurisdiction, etc, etc).

- If the threat is from a compromised device but not a malicious user, enlist the user in helping to protect the secret. For example, reencrypt the data with a “main password” known only to the user, require off-device confirmation of credential use, etc.

-Eric

Impatient optimist. Dad. Author/speaker. Created Fiddler & SlickRun. PM @ Microsoft 2001-2012, and 2018-2022, working on Office, IE, and Edge. Now a SWE on Microsoft Defender Web Protection. My words are my own, I do not speak for any other entity. View more posts