Loading...

7 min read

Cloudflare is officially a teenager. We launched on September 27, 2010. Today we celebrate our thirteenth birthday. As is our tradition, we use the week of our birthday to launch products that we think of as our gift back to the Internet. More on some of the incredible announcements in a second, but we wanted to start by talking about something more fundamental: our identity.

Like many kids, it took us a while to fully understand who we are. We chafed at being put in boxes. People would describe Cloudflare as a security company, and we'd say, "That's not all we do." They'd say we were a network, and we'd object that we were so much more. Worst of all, they'd sometimes call us a "CDN," and we'd remind them that caching is a part of any sensibly designed system, but it shouldn't be a feature unto itself. Thank you very much.

And so, yesterday, the day before our thirteenth birthday, we announced to the world finally what we realized we are: a connectivity cloud.

The connectivity cloud

What does that mean? "Connectivity" means we measure ourselves by connecting people and things together. Our job isn't to be the final destination for your data, but to help it move and flow. Any application, any data, anyone, anywhere, anytime — that's the essence of connectivity, and that’s always been the promise of the Internet.

"Cloud" means the batteries are included. It scales with you. It’s programmable. Has consistent security built in. It’s intelligent and learns from your usage and others' and optimizes for outcomes better than you ever could on your own.

Our connectivity cloud is worth contrasting against some other clouds. The so-called hyperscale public clouds are, in many ways, the opposite. They optimize for hoarding your data. Locking it in. Making it difficult to move. They are captivity clouds. And, while they may be great for some things, their full potential will only truly be unlocked for customers when combined with a connectivity cloud that lets you mix and match the best of each of their features.

Enabling the future

That's what we're seeing from the hottest startups these days. Many of the leading AI companies are using Cloudflare's connectivity cloud to move their training data to wherever there's excess GPU capacity. We estimate that across the AI startup ecosystem, Cloudflare is the most commonly used cloud provider. Because, if you're building the future, you know connectivity and the agility of the cloud are key.

We've spent the last year listening to our AI customers and trying to understand what the future of AI will look like and how we can better help them build it. Today, we're releasing a series of products and features borne of those conversations and opening incredible new opportunities.

The biggest opportunity in AI is inference. Inference is what happens when you type a prompt to write a poem about your love of connectivity clouds into ChatGPT and, seconds later, get a coherent response. Or when you run a search for a picture of your passport on your phone, and it immediately pulls it up.

The models that power those modern miracles take significant time to generate — a process called training. Once trained though, they can have new data fed through them over and over to generate valuable new output.

Where inference happens

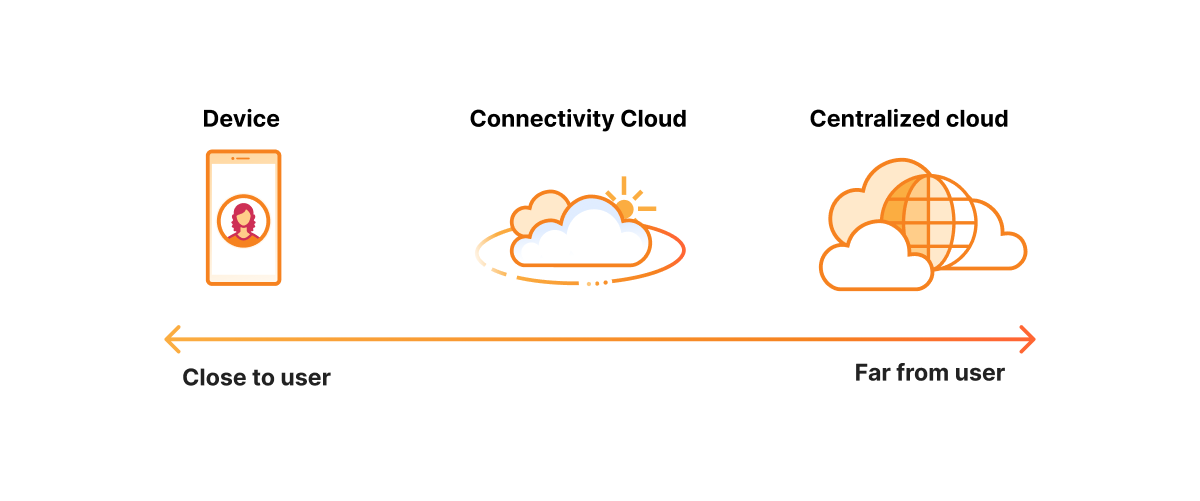

Before today, those models could run in two places. The first was the end user's device — like in the case of the search for “passport” in the photos on your phone. When that's possible it's great. It's fast. Your private data stays local. And it works even when there's no network access. But it's also challenging. Models are big and the storage on your phone or other local device is limited. Moreover, putting the fastest GPU resources to process these models in your phone makes the phone expensive and burns precious battery resources.

The alternative has been the centralized public cloud. This is what’s used for a big model like OpenAI’s GPT-4, which runs services like ChatGPT. But that has its own challenges. Today, nearly all the GPU resources for AI are deployed in the US — a fact that rightfully troubles the rest of the world. As AI queries get more personal, sending them all to some centralized cloud is a potential security and data locality disaster waiting to happen. Moreover, it's inherently slow and less efficient and therefore more costly than running the inference locally.

A third place for inference

Running on the device is too small. Running on the centralized public cloud is too far. It’s like the story of “Goldilocks and the Three Bears”: the right answer is somewhere in between. That's why today we're excited to be rolling out modern GPU resources across Cloudflare's global connectivity cloud. The third place for AI inference. Not too small. Not too far. The perfect step in between. By the end of the year, you'll be able to run AI models in more than 100 cities in 40+ countries where Cloudflare operates. By the end of 2024, we plan to have inference-tuned GPUs deployed in nearly every city that makes up Cloudflare's global network and within milliseconds of nearly every device connected to the Internet worldwide.

(A brief shout out for the Cloudflare team members who are, as of this moment, literally dragging suitcases full of NVIDIA GPU cards around the world and installing them in the servers that make up our network worldwide. It takes a lot of atoms to move all the bits that we do, and it takes intrepid people spanning the globe to update our network to facilitate these new capabilities.)

Running AI in a connectivity cloud like Cloudflare gives you the best of both worlds: nearly boundless resources running locally near any device connected to the Internet. And we've made it flexible to run whatever models a developer creates, easy to use without needing a dev ops team, and inexpensive to run where you only pay for when we're doing inference work for you.

To make this tangible, think about a Cloudflare customer like Garmin. They make devices that need to be smart but also affordable and have the longest possible battery life. As explorers rely on them literally to navigate out of harrowing conditions, tradeoffs aren't an option. That's why, when they heard about Cloudflare Workers AI, they immediately knew it was something they needed to try. Here's what Aaron Dearinger, Edge Architect at Garmin International said to us:

"Garmin is alongside our users for all their runs, workouts, and outdoor adventures, and we want to ensure that our watches and devices' power can last weeks and months instead of hours or days. We're excited for Cloudflare's innovations to ensure AI is performant, fast, close to users, and importantly privacy-first. This holds a lot of potential to us as we aim to bring more advanced fitness, wellness, and adventure insights to our users as they explore."

They are one of the limited set of customers we gave an early sneak peek to, all of whom immediately started running off ideas of what they could do next and clamoring to get more access. We feel like we’ve seen it and are here to report: the not-so-distant future is super cool.

The spirit of helping build a better Internet

Over the years we've announced several things on our birthday that have gone on to change the future of the Internet. On our first birthday, we announced an IPv6 gateway that has helped the Internet scale past its early protocol decisions. On our fourth birthday, we announced that we were making encryption free and doubled the size of the encrypted web in a day. On our seventh birthday, we launched the Workers platform that has revolutionized how modern applications are developed. On our eleventh birthday we announced R2 and further freed data from the captivity clouds.

This year, as we celebrate Cloudflare's thirteenth birthday, we set out to have as dramatic an impact on AI development as we have in other areas we've focused on in the past: providing tools that bring affordable, powerful AI inference to anyone, anywhere on Earth.

Closing with a poem

And so, to commemorate that, let's close with a poem about the connectivity cloud. This one was generated using a tiny bit of code and Meta's Llama 2 LLM model running on Cloudflare's Workers AI platform in a data center not far from where we're writing this…

Prompt: “Write a poem that talks about the connectivity cloud and contrast it with the captivity cloud”

The connectivity cloud, a wondrous sight to see,

Where data flows and knowledge is set free,

A place where minds meet and ideas take flight,

A world of endless possibility in sight.

But then there's the captivity cloud, a darker place,

Where thoughts are trapped and minds are in disgrace,

A prison of the mind, where freedom's lost,

A world of endless sorrow and cost.

So let us choose the connectivity cloud,

And let our minds be free and allowed,

To soar and glide and reach for the sky,

And never be held back, but always fly.

We protect entire corporate networks, help customers build Internet-scale applications efficiently, accelerate any website or Internet application, ward off DDoS attacks, keep hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.