‘Twas the week before Christmas and I ran across a deterministic-build bug. And then another one. One was in Chromium, and the other was in Microsoft Windows. It seemed like a weird coincidence so I thought I’d write about both of them (the second one can be found here).

A deterministic build is one where you get the same results (bit identical intermediate and final result files) whenever you build at the same commit. There are varying levels of determinism (are different directories allowed? different machines?) that can increase the level of difficulty, as described in this blog post. Deterministic builds can be quite helpful because they allow caching and sharing of build results and test results, thus reducing test costs and giving various other advantages.

It may seem that deterministic builds would happen automatically – don’t computers produce the same results every time? – but in fact deterministic builds can be quite challenging. Lots of tools embed timestamps and file paths in output files and those have to be dealt with. The same blog post describes these challenges and others.

Chromium

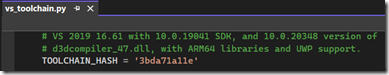

Luckily for me various smart software developers have worked hard to make Chromium builds deterministic. If you and a friend both check out the Chromium source code at the same commit and build chrome.dll (or any other output binary) with the same build arguments then you will get identical .obj and .dll and .exe output. Well, this is mostly true.  Google developers have a build package that contains all of the Visual Studio and Windows SDK headers/libs/tools that we depend on so that we all use exactly the same build files so we get determinism automatically. For you and your friend to get identical results you need to make sure you have the same Visual Studio and Windows SDK versions. But if you do that you will get identical build results, and that is a Good Thing™.

Google developers have a build package that contains all of the Visual Studio and Windows SDK headers/libs/tools that we depend on so that we all use exactly the same build files so we get determinism automatically. For you and your friend to get identical results you need to make sure you have the same Visual Studio and Windows SDK versions. But if you do that you will get identical build results, and that is a Good Thing™.

I knew all this. And I knew that we had build machines that tested this. So when I added a new build argument and I wanted to check that it wasn’t affecting the build output I knew that I could just build Chromium twice, once with and once without the flag, and confirm that the builds were identical.

They weren’t.

I found that chrome.dll was different, and not in a benign or trivial way. The size of the .text section, that contains all of the code, was varying by about 10 KB. The set of functions included in the .text section was varying. This was a determinism failure of the first order.

Since Chromium builds are deterministic I knew that the new build flag had to be the problem, so I filed a bug. I was told that the build flag shouldn’t be changing the output and I was asked to test again to see if use_pdb_alt_paths=true was the problem, since that does (by design) cause non-determinism. I turned that off and the builds were still non-deterministic. Then I turned off the build flag that I was testing and the builds were still non-deterministic. Then I thought that the directory name might be causing a problem (it shouldn’t!!!) and eventually I found that two subsequent builds in the same directory were different.

This all seemed… impossible. Why was I getting non-deterministic builds while our determinism-verification builders were happily green?

This all seemed… impossible. Why was I getting non-deterministic builds while our determinism-verification builders were happily green?

It’s worth mentioning I was doing my builds using our distributed build system, goma. It is supposed to guarantee reproducibility, and it is used on our bots that do reproducibility testing. However I had found that disabling goma made the problem go away. This was obviously a clue, but I didn’t yet know what to make of it.

Since I found the problem I was, by default, responsible for investigating it until I could figure out who to hand it off to. I needed to get scientific. I wrote a simple batch file that built a small test target, copied the results elsewhere, built again, and then compared all of the result files. Some, like .ninja_log and .ninja_deps contain timestamps and would be different every time, but all of the other files were supposed to be the same.

I focused on the .obj files since you can’t have deterministic DLLs without deterministic .obj files. My assumption was that the .obj files were different because of differences in generated source code and that looking at the .obj files would let me find the variably generated source. After a few runs of my batch file I found that there were about ten .obj files that varied between builds, but they weren’t always the same files!

Twelve down, one to go?

So far I had come up with about a dozen guesses and hypotheses and they had all been wrong. Oh well – that means that there were a dozen causes that I had eliminated, and I must be getting closer.

My next step was to compare a couple of the .obj files that had changed. I ran “dumpbin /disasm win_util.obj” and then compared the output to find clues. I found the differences, and they were all of this form:

< lea rdx,[??_C@_0BM@MFMJGBJM@?4?4?1?4?4?1base?1scoped_generic?4h?$AA@]

> lea rdx,[??_C@_0BM@LFHBMLEF@?4?4?1?4?4?2base?1scoped_generic?4h?$AA@]

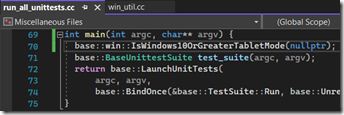

Huh. I ran an undecorate command on that mangled name and it just told me it was a string. I thought it would be useful to see what string it was, but the code in question wasn’t even called in the test binary that I had built, so I modified the test binary to call the function so that I could step

Huh. I ran an undecorate command on that mangled name and it just told me it was a string. I thought it would be useful to see what string it was, but the code in question wasn’t even called in the test binary that I had built, so I modified the test binary to call the function so that I could step  in using a debugger. I found that the string was “../../base/scoped_generic.h” (obvious in hindsight), and it was being referenced in a CHECK statement in the ScopedGeneric destructor in that header file.

in using a debugger. I found that the string was “../../base/scoped_generic.h” (obvious in hindsight), and it was being referenced in a CHECK statement in the ScopedGeneric destructor in that header file.

Further inspection showed that all of the differences in the disassembly matched this pattern, varying only in the source file being referenced by the string.

The string in question clearly came from the __FILE__ macro which is used in our CHECK statements in order to indicate the location of errors. The long names for the strings were the symbols generated by the compiler so that the linker could pull in the necessary string constants. I now knew that the symbolic names for the __FILE__ macro were being generated inconsistently, but I didn’t know why.

At this point I had no choice. I had to invoke the Feynman Problem Solving Algorithm. I’d already written down the problem, so I thought really hard, and then I remembered something. When building with goma the compile steps are sent off to a data center in order to let me do 1,000-way build parallelism. However some compile steps are also run  locally. These local compiles use otherwise idle CPU cores (have I mentioned that I have a workstation with 48 logical CPUs?) and if they complete first then their output is used instead of the result from the data center. In other words, goma builds have a by-design race condition. Both paths are supposed to produce the same results, but if the two paths produced different results then this would perfectly explain the randomly different .obj files.

locally. These local compiles use otherwise idle CPU cores (have I mentioned that I have a workstation with 48 logical CPUs?) and if they complete first then their output is used instead of the result from the data center. In other words, goma builds have a by-design race condition. Both paths are supposed to produce the same results, but if the two paths produced different results then this would perfectly explain the randomly different .obj files.

I searched through my archives and found that “set GOMA_USE_LOCAL=false” would disable local compilation, re-ran my tests, and suddenly the builds were deterministic again!

Huzzah!

Thirteenth time is the charm, and finally one of my guesses was correct. So, it appears that goma builds and local builds are slightly different. They create different symbolic names for __FILE__ macros. This means that if two different .obj files use __FILE__ in a header file (like the ScopedGeneric destructor in ../../base/scoped_generic.h) then they might get different symbolic names. The linker does all of its work based on these symbolic names so if the names vary then the linker doesn’t know that the strings are identical, so it pulls in two copies of one string. If one of these copies is in the same COMDAT as a function then pulling in the __FILE__ string may also pull in some code, and suddenly this subtle difference in the .obj files leads to different DLLs and EXEs. That is, some __FILE__ strings seem to be attached to code, so pulling in an extra __FILE__ string may pull in extra code (this suggests that we may be routinely pulling in a bit more code than we need – that’s worth investigating).

Thirteenth time is the charm, and finally one of my guesses was correct. So, it appears that goma builds and local builds are slightly different. They create different symbolic names for __FILE__ macros. This means that if two different .obj files use __FILE__ in a header file (like the ScopedGeneric destructor in ../../base/scoped_generic.h) then they might get different symbolic names. The linker does all of its work based on these symbolic names so if the names vary then the linker doesn’t know that the strings are identical, so it pulls in two copies of one string. If one of these copies is in the same COMDAT as a function then pulling in the __FILE__ string may also pull in some code, and suddenly this subtle difference in the .obj files leads to different DLLs and EXEs. That is, some __FILE__ strings seem to be attached to code, so pulling in an extra __FILE__ string may pull in extra code (this suggests that we may be routinely pulling in a bit more code than we need – that’s worth investigating).

Update: A more plausible way that the different __FILE__ constants could break determinism would be by breaking /OPT:ICF. If a template function is compiled from two different translation units with two different type parameters then the generated code may end up being the same, and the functions may get “folded” together due to the /OPT:ICF option. If the generated code references different __FILE__ strings then this optimization opportunity will be lost. That is, functions like the one below may get compiled with two different __FILE__ constants and will then not be folded together, leading to extra functions in the binary.

template <typename T> void Foo(T* p) {

printf(“%s%p”, __FILE__, p);

}

When I started writing this I didn’t know why the symbolic names for scoped_generic.h varied between local and remote compiles, but while writing this blog post I figured it out. There’s a hint in the paragraph with the screenshot of some assembly language – go take a look. I’ll wait.

The text refers to ../../base/scoped_generic.h but the screenshot refers to ../..\\base/scoped_generic.h. The text came from the bug report which was debugging a goma build. The screenshot came from a repro on my home machine, which used a non-goma build. And the difference is the middle slash, which is a slash on goma builds, and a back-slash on non-goma builds. That is, the strings have different symbolic names because they are actually different! This is obvious in hindsight, but then again, that is generally how hindsight works. The strings are generated by the compiler when it finds base/scoped_generic.h in the ../.. directory, so the difference is simply in how those two paths are joined together.

The text refers to ../../base/scoped_generic.h but the screenshot refers to ../..\\base/scoped_generic.h. The text came from the bug report which was debugging a goma build. The screenshot came from a repro on my home machine, which used a non-goma build. And the difference is the middle slash, which is a slash on goma builds, and a back-slash on non-goma builds. That is, the strings have different symbolic names because they are actually different! This is obvious in hindsight, but then again, that is generally how hindsight works. The strings are generated by the compiler when it finds base/scoped_generic.h in the ../.. directory, so the difference is simply in how those two paths are joined together.

The fix will probably be to teach the compiler to more aggressively use slashes instead of backslashes when it concatenates paths, and then improve our determinism tests to exercise this case.

A mystery isn’t truly solved until you understand why this wasn’t previously noticed, so I looked at our build machines. I found that they use GOMA_USE_LOCAL=false (for unknown reasons), thus avoiding this bug. Most developers don’t use this flag but they would have no reason to notice this type of build indeterminism.

That’s all for now. I’ll publish my other build determinism story in a day or two and link to it from here.

Post-mortem

A few days after publishing this post I got CCed on another build determinism bug. I couldn’t reproduce it, but after looking at the right spot in the bot output I found the problem, in some generated JavaScript files. Roughly speaking the difference was:

< // Copyright 2021 The Chromium Authors…

> // Copyright 2022 The Chromium Authors…

Whoops! Due to bad timing something triggered regeneration of these JavaScript files on a build that spanned the change of year, so some of the second-build files got different copyright dates. These files got embedded into resources and nothing matched anymore.

The failure persisted for at least a week because nothing else triggered regenerating the JavaScript files – the first/second build files continued to be different. The solution was to take the last-commit date (which we use for build timestamps) and pass that to the code-gen scripts so that they can use that to get the year.

Time and dates are hard, and anytime you ask the system for the current time – in a build system or in a video game – you are laying the groundwork of a determinism bug.

如有侵权请联系:admin#unsafe.sh