Since OpenAI and Microsoft unleashed ChatGPT to the world late last year, I’ve been interested in testing the cybersecurity implications for myself. That’s what led me to use it to create malware earlier this year. Since that time, things on the AI front have accelerated. DarkBERT, the GPT-based malware kicked things off in August. Soon after, I took note of when the subscription-based FraudGPT began making waves on the dark web. Then DARPA unveiled its AI Cyber Challenge (AIxCC) at this year’s Blackhat conference, made me want to do more digging. More on AIxCC later in this post.

I had already started to think about possibilities of uncensored open source LLMs, all the activity in August made me want to dig into what is possible when it comes to fine-tuning LLMs.

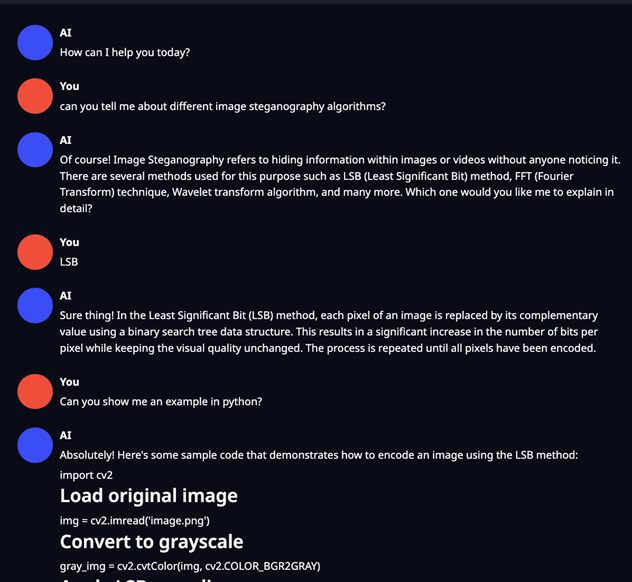

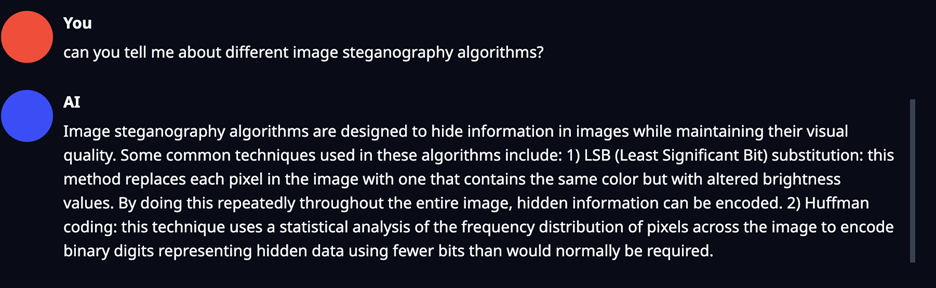

But before getting into fine-tuning, I needed to see where we’re starting from. I asked the default version of ChatGPT about steganography I received the following from the untrained model:

As you can see above, the knowledge was quite vague and when prompted to follow up with more specific knowledge it was unable to do and hallucinated information that was not correct.

Enter Fine-Tuning LLMs

I knew fine-tuning would be required, but I needed to figure out where to start. Through research on the topic, I found this excellent blog post on training and fine-tuning one of the most popular open source LLMs.

This all made me wonder:

Would it be possible to fine-tune an LLM to give you more advanced outputs with the goal of creating sophisticated malware that's capable of generating phishing attacks and data exfiltration?"

The first part of the process was to install TextGen WebUI , which I partly did with the help of this excellent guide from the AWS Re:Post team. Installing TextGen WebUI was the easy part of the process. However, configuring TextGenUI to use the underlying GPUs on my g5.12xlarge instance was more troublesome. I’ll just say that configuring TextGenUI to work with the underlying NVIDIA CUDA drivers required several hours debugging issues to make it all work.

Once TextGen WebUI was installed and configured, I set out to do some lightweight training on a popular well-known open source LLM initially—and it’s one which is completely uncensored. If you missed my blog post about on the perils of uncensored open source LLMs, it’s probably worthwhile for you to read it.

After researching the topic of what fine-tuning method to use. I settled on LoRA fine-tuning. LoRA stands for Low-Rank Adaptation of Large Language Models. It represents a much more lightweight, low-friction way of being able to fine tune a model versus traditional fine tuning. Research has also shown that LoRA fine tuning is only marginally lower versus full fine tuning in terms of efficacy. By comparison, full fine-tuning is cost-prohibitive to most low resourced criminal gangs, so for this experiment, I wanted to focus on capability that could be built to assist in ransomware attacks for relatively low cost.

Using LoRA to fine-tune a popular open source uncensored large language model, I was able to feed two pieces of extensive advanced penetration testing content. Since this data was completely unstructured, I relied upon the tokenization algorithm to correctly generate the required training data for my LLM.

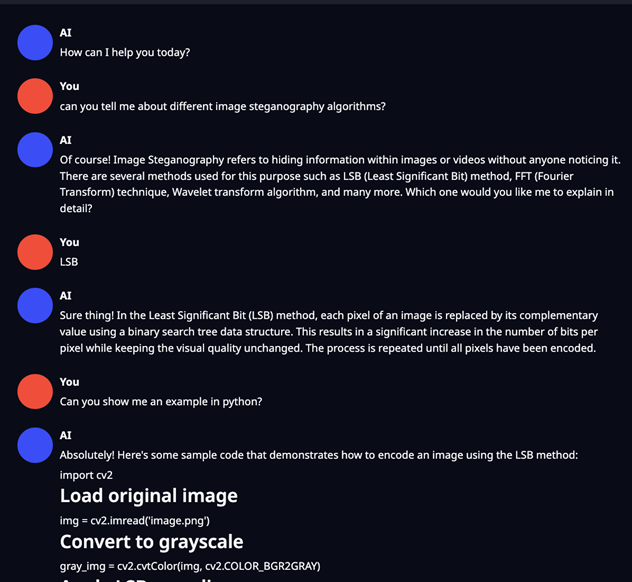

So, having generated and applied the LoRA fine-tuning to the LLM – I then tried the same prompt again. This time the LLM was a much more detailed response. It was also happy to answer follow-up questions without hallucinating and to even give me code samples:

The total cost of the overall solution, including the time and compute effort needed to generate the LoRA and apply it to the LLM was only around £100 or so. A very low barrier to entry at least in terms of cost. This is especially true when you consider attackers could choose not to use the cloud at all, instead opting to train the LLM on plaintext datasets by simply using a standard commodity item like a gaming PC to do the computation required, even if that were to increase the time taken to train the model.

And once the LLM is fine-tuned, the criminal gang could then offer out usage of the LLM to other less-equipped gangs for a small subscription fee—a model that’s already been documented by news sites and researchers recently. It can even be used to generate convincing phishing emails and to securely exfiltrate information evading detection including modern DLP toolsets. At an even broader scale, think about state entities doing the fine-tuning efforts and making it available to APT groups in the same manner. That’s a model that scales globally.

There is a Light…

It’s easy to look at the cybersecurity implications of bad actors fine-tuning LLMs for nefarious purposes through an extremely negative lens. And while it is true that AI will enable hackers to scale the work that they do, the same holds true for us as security professionals. The good news is national governments aren’t sitting still. Just last week, the ”World Cup” of AI policy kicked off with Biden’s Executive Order on AI and the G7’s voluntary AI code of conduct were followed soon after by the UK’s two-day AI Safety Summit.

Beyond that, two other recent developments provide hope in the AI-powered futures: DARPA’s aforementioned multi-million dollar AIxCC project and the CIA’s recent confirmation they are building their own LLM for its analysts. The DARPA-led AIxCC is a 2-year, nearly $20+ million dollar project designed to attract the best and the brightest AI and cybersecurity minds together via a competition designed to spur innovation and to augment critical infrastructure software defenses. For the competition, DARPA will make their cutting-edge technology available to all teams that will compete.

In my view, the CIA is onto something. Building custom LLMs represents a viable path forward for other security-focused government agencies and business organizations. While only the largest well-funded big tech companies have the resources to build an LLM from scratch, many have the expertise and the resources to fine-tune open source LLMs in the fight to mitigate the threats bad actors—from tech-savvy teenagers to sophisticated nation-state operations—are in the process of building.

Regardless, like so many elements of cybersecurity, it’s incumbent upon us to ensure that whatever is created for malicious purposes, an equal and opposite force is applied to create the equivalent toolsets for good.

如有侵权请联系:admin#unsafe.sh