2024-1-17 05:22:0 Author: securityboulevard.com(查看原文) 阅读量:12 收藏

We recently announced a new LLM-powered enhancement to our API Discovery feature called Spec Enrichment, which makes API descriptions easier for security teams to comprehend using simple, human language to describe endpoints and summaries based on API behavior.

Spec Enrichment marks the beginning of more AI-first features announcements to come!

In this post, I’ll share the innovation pipeline process we take when we develop new AI first features, and walk you through the actual steps we took in the journey—from experimentation with LLMs, to creating the desired security workflow, to executing the workflow automation, integration into our console, and finally production. I’ll also recap all the lessons we learned along the way and impart advice on what you should do if you should decide to do something similar.

Stay tuned to hear our latest product updates and discover what’s next for us with AI and other emerging technologies.

Discovering Our Process for Innovating AI-First Features

Step 1: Prompt Engineering

The first step we usually take in our innovation pipeline is to engage in prompt experimentation. In regards to AI, this involved utilizing public foundation models to explore the creative and problem-solving capabilities of different LLMs to address the problems we aim to solve for our customers.

Understanding the user’s problems and the tasks they need to accomplish is crucial. For us, this means focusing on the security engineers who seeks to better understand and secure their APIs. Through extensive customer discussions, we have identified various tasks and challenges that we target.

However, surface-level understanding is not enough. It is important to deeply comprehend the problem’s impact, how users currently address it using existing solutions, and the primary sources of friction. This level of understanding can only be achieved through customer discovery.

One specific problem we targeted was the lack of understanding among security engineers regarding their APIs and their functionality. Close collaboration between Impart engineers and early customers in organizing and improving their APIs helped us to become deeply familiar with this problem. And as we continued to refine our approach, we developed a customer playbook and in doing so recognized the potential for automation.

To explore the boundaries of API Discovery, we conducted an AI jam session with our team where we experimented with different prompts and various LLMs to assess the potential outputs of this analysis.

We first experimented with the latest version of ChatGPT, which we consider one of the most advanced and user-friendly LLMs available. However, due to the need to safeguard our proprietary insights and data, our exploration with different prompts was somewhat limited. Nonetheless, the results of this process convinced us of the potential of an LLM-based approach to Spec Enrichment, prompting us to proceed to the next step of our pipeline.

Agent UX Design with LLMPart

Step 2: Agent Design

In the next step of our development process, we focused on innovating the actual security workflow. We have consistently heard from customers that security engineers are tired of security tools that primarily generate alarms and create additional work, rather than helping them take actions that reduce their risk.

Focusing on the security workflow helped us think through what a security engineer truly needs to solve their problem, rather than providing them with additional context or information that only complicates their problem-solving process.

To test and iterate on security workflows securely, we created an internal general-purpose security LLM agent based on foundation models and integrated it with our company’s Slack. This provides a convenient and private environment for anyone at Impart to experiment with prompts without the risk of exposure to shared ChatGPT models and hardware, or the need to set up specialized API testing infrastructure like Postman. We affectionately named this agent “LLMpart”.

Implementing this was not as simple as wiring up an API to Slack; it required actual technical development work. For example, in the initial version of LLMpart, our agent responded to every single message in any Slack channel. For a channel with two people, this behavior would have been acceptable, but it quickly became annoying in team channels, especially when it responded to every reply or threaded reply. As a result, we updated the logic so that our LLMpart bot could follow the conversation and would only reply when directly mentioned in Slack.

To innovate with Spec Enrichment, our team combined some of the prompts we developed in the previous step. This involved a human-led workflow planning process, with LLMs executing the tasks.

Once we felt comfortable with the Spec Enrichment workflows, we sought feedback from our early customers and trusted advisors. We repeated this process multiple times until we were confident in our approach.

Agent Engineering

Step 3: Agent Engineering

In the next step of our process, we focused on engineering an agent that could execute the workflow with minimal human guidance. We encountered several challenges in this step, including data retrieval, model performance, and costs.

Data Retrieval

Data retrieval was crucial for the agent to have a comprehensive understanding of the security context. We explored multiple approaches, including fine-tuning a foundation model, retrieval augmented generation, and prompt augmentation.

Ultimately, prompt augmentation proved to be the most suitable option for our Spec Enrichment use case, as it allowed us to use our available context, such as an API specification, to feed into the language model on a just-in-time basis.

Model Performance

Ensuring high-quality and trustworthy results from the language models was another challenge. We fine-tuned the prompts to obtain accurate answers to security questions and formatted the output in a way that could be easily handled by our systems. We also implemented validation checks and scores to ensure the reliability of the outputs.

To address the hallucination tendency of language models, we made the responses more deterministic and scalable by adjusting temperature settings. Additionally, we discovered that some foundation models had built-in controls that limited their ability to handle security use cases, which required us to find suitable workarounds.

Latency

Latency was a significant concern during this phase. Even the fastest language models had response times that were not ideal for integrated features outside of an in-product chatbot. To overcome this, we developed a system that pre-processed the language model prompts and updated our database with the results in a configurable and scalable manner.

Costs

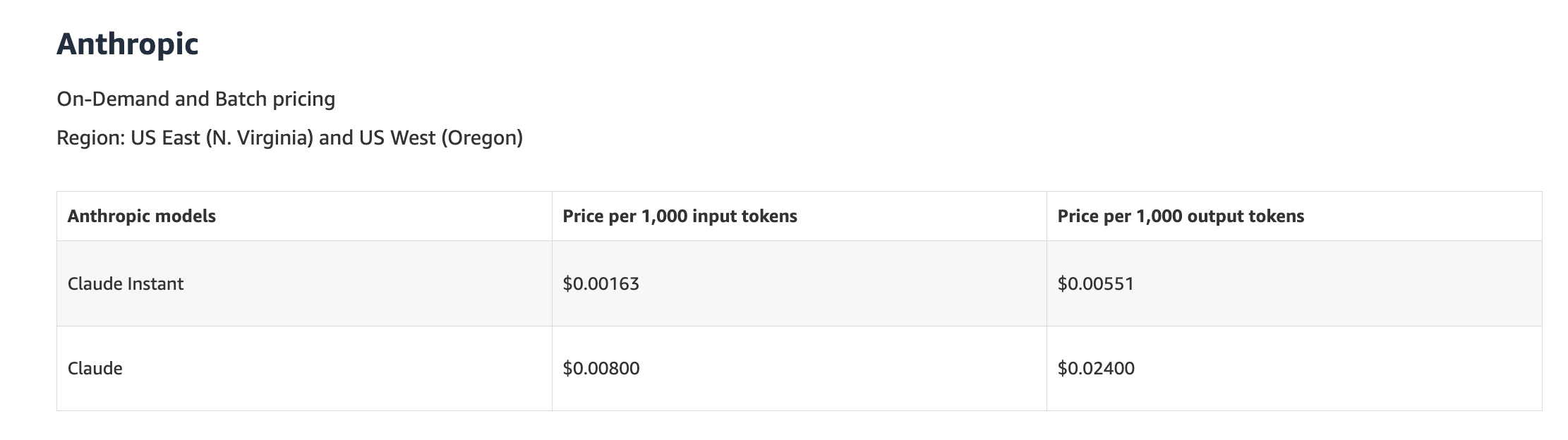

Cost optimization was also a consideration, as using language models in production with large amounts of data is quite costly. We carefully selected models based on their token costs, prioritizing those that met our quality requirements while still remaining efficient. We explored various options, including running models in local dev environments, hosting them in private infrastructure, and utilizing public models via API.

Lessons Learned from Executing the Workflow Automation

Throughout this process, we learned several valuable lessons. We recognized that AI is not a one-size-fits-all solution and requires skepticism. It is essential to have a deep understanding of user problems and focus on delivering solutions that address their needs. We also learned that model hallucination can present challenges in production, and investing in tooling and technology is crucial to ensure predictable and trustworthy outputs. Additionally, we discovered the importance of cost optimization and prompt engineering to scale our development team effectively.

Step 4: Console Integration

The next step was to integrate our agents with our console. Once we were satisfied with the performance of our agents, we wrapped them in an API, specifically a Spec Enrichment API. Wrapping the agent in an API allows us to connect the LLM to relevant customer data while leveraging our existing security and privacy controls, such as RBAC, that are already present in our console for our other APIs.

By using these agent APIs, our front-end team can focus on designing the best user experience for security engineers. In the case of spec integration, we made the decision to directly integrate LLM results into the API specification itself. This approach helps security engineers improve the quality of API documentation and keeps the insights closely tied to the rest of the API specification, where they are most relevant.

Step 5: Production & Reflection on Overall Lessons Learned

The production phase is really where we finally get to see all our hard work pay off. It involves a series of activities and considerations to ensure a smooth and successful transition from development to deployment.

During the production phase, we conducted robust reliability testing to prevent any potential issues or vulnerabilities, shipped behind-feature flags so that we could gather real-time feedback from our users, and then gathered overall insights from customers and advisors. All of this helps us to deliver a high-quality and user-centric product experience and enables us to continually enhance the effectiveness of our offerings.

Overall Lessons Learned from Building with AI

- AI Still Has a Long Way to GoAI still has a long way to go before it becomes a reliable solution for every use case. It’s not as simple as just wrapping a foundation model and calling it a day. I recently had a conversation with a CISO who expressed frustration with the constant talk of “another copilot for X” from various security vendors. The truth is, true product differentiation comes from intimately understanding the user and their problems. AI is just a tool, not the ultimate answer.

During our journey, we faced numerous challenges in dealing with low-quality LLM results, including hallucinations, slow responses, safety controls, and improperly formatted outputs.

- Invest in Robust Tools and Technologies – It was essential for us to invest in developing robust tools and technologies to ensure that our models behaved predictably and delivered trustworthy results. We had to implement various technical capabilities, such as data retrieval, prompt augmentation, prompt output validation, and the integration of different single-purpose agents to increase predictability.

- LLMs Can Be Expensive – Let’s not forget about the costs involved. LLMs can be quite expensive, and the results can vary depending on the specific model used. It was eye-opening for us to realize that the same prompts could yield vastly different outcomes when used with different models. While it’s exciting to experiment with the most capable models during R&D, it can also put a strain on CPU resources. We found that it’s best to start with the most capable models and then optimize for price performance once we have a predictable output.

So, let’s be real. AI is not a magic bullet. It’s a powerful tool that can help us solve problems, but it’s not the ultimate solution. It’s important to approach AI with a healthy dose of skepticism and focus on understanding and empathizing with the users, the security engineers.

By investing in the right tools, technologies, and technical capabilities, we can deliver great product experiences and reliable data. And by being mindful of the costs and optimizing our approach, we can make AI more accessible and cost-effective.

Embrace LLMs to Unlock More Innovation

I hope this has encouraged you to experiment with GenAI. It is currently a hot topic and for good reason. Due to the complexity and difficult nature of managing LLMs, experimentation and testing are absolutely necessary to fully leverage them and succeed.

Nevertheless, we strongly believe that widespread adoption of AI, including within our industry, is crucial for scaling security teams at all levels. This will help combat attacks on our software and safeguard our valuable information.

Schedule a demo to learn more about how Impart can protect your APIs—and to see our newest AI-first feature in action!

Want to learn more about API security? Sebscribe to our newsletter for updates.

Oops! Something went wrong while submitting the form.

如有侵权请联系:admin#unsafe.sh