A Beginners Guide to Understanding Protobuf & JSON

When you dive into the sphere of data serialization, you're likely to encounter two dominant players - Protobuf, the colloquial term for Protocol Buffers, and JSON, standing for JavaScript Object Notation. Both of these formats carry distinctive qualities, with each boasting specific advantages in data exchange scenarios. Through this primer, our goal is to dissect and explore the fundamentals of Protobuf and JSON, equipping you with the necessary groundwork for the more detailed reflections that will follow.

Protocol Buffers, or Protobuf as most individuals refer to it, was conceived and pursued by Internet behemoth Google. Popular for its binary serialization, Protobuf upholds the principles of brevity, nimbleness, and simplicity while not conceding on its expressive prowess. It's not constrained by programming language frontiers and offers compatibility with a multitude of languages such as Java, C++, Python, Go, and many others.

A basic Protobuf message would resemble this:

<code class="language-protobuf">message Person {

required string name = 1;

required int32 idNumber = 2;

optional string email = 3;

}</code>

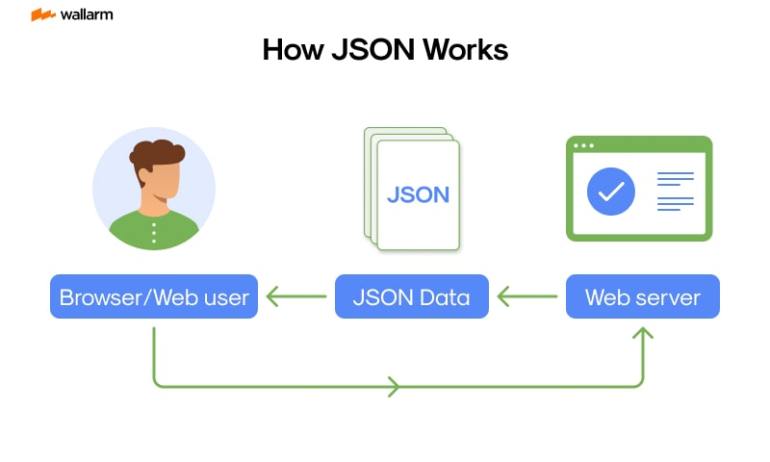

In contrast, JSON - a data interchange format rooted in text, stands out for its effortless comprehensibility by humans and ease of processing for machines. Drawing its core layout from JavaScript, it has established itself as a universal format, finding extensive use in web application data transmission between the server and client sides.

Converting the Protobuf message above into JSON would look something like this:

<code class="language-json">{

"name": "John Doe",

"idNumber": 331,

"email": "[email protected]"

}</code>

At first glance, Protobuf might appear more enigmatic than the user-oriented JSON, but it's the binary foundation of Protobuf that gifts it low latency and compactness, advantages that are significant under certain scenarios.

Below is a quick comparative rundown highlighting the disparities between Protobuf and JSON:

| Characteristic | Protobuf | JSON |

|---|---|---|

| Format | Binary | Text-based |

| Understandability | Slightly Tougher | Simpler |

| Size | Compact | Sizable |

| Speed | Faster | Comparatively slower |

| Language Compatibility | Universal | Universal |

| Typical Usage | Inter-service communication, handling large data volumes | Web applications, configuration files |

As we delve deeper into the intricacies of Protobuf and JSON in the following sections, we will evaluate their pros and cons, as well as identify situations where each shines. Choosing between Protobuf and JSON ultimately depends on the specific needs of your project.

Unveiling Protobuf: Understanding the Essentials

Protobuf, short for Protocol Buffers, is a type of binary serialization pioneered by the techno visionary group, Google. It's uniquely crafted with traits like compactness, swiftness, and simplicity at its core, providing a distinct approach to data handling. It's utility cuts across particular coding languages, with integration seen in the likes of Java, C++, Python and others.

To comprehend Protobuf in-depth, one needs to scrutinize its main components, which are: the Protocol Buffers' Interface Definition Language (IDL), the specific Protobuf compiler (referred to as protoc), and the architectural makeup of Protobuf at runtime.

Framing Data with Protobuf's Interface Definition Language (IDL)

The IDL in Protobuf represents the mechanic that outlines your data structure. It's relatively straightforward, boasting a syntax similar to languages like C++ or Java. Let's understand a Protobuf message via an exemplar:

<code class="language-protobuf">message Person {

required string full_name = 1;

required int32 id_number = 2;

optional string email = 3;

}</code>

In this instance, a 'Person' message with three sections: full_name, id_number, and email is outlined. Every section possesses a kind (string or int32), a characteristic (required or optional), and a singular identifier.

Optimizing Data Layout through Protobuf's Compiler (protoc)

Once your data structure is defined with the IDL of Protobuf, the specific compiler of Protobuf (protoc) can produce data access assemblies in your preferred coding language. These assemblies establish intuitive pathways for each section (like getFullName() and setFullName()) along with methods to simplify the serialization/parsing of the complete structure to/from rudimentary bytes.

Understanding Protobuf's Runtime Structure

The runtime configuration of Protobuf is fundamentally a library that enforces the Protocol Buffer procedure. It presents capabilities for encoding and deciphering messages, regulating Input/Output processes, and memory allocation management. This runtime has been incorporated into various coding languages.

Comparing Protobuf to Traditional Data Structures

Protobuf outsmarts older data forms such as XML or JSON in several areas:

- Compactness: The encoding process in Protobuf is conducted in a binary format, making it far more compact than textual formats.

- Efficiency: Protobuf's encoding and parsing runtime is quicker due to its binary layout and the efficacy of its runtime.

- Schema Evolution: Protobuf allows for the inclusion of fresh sections into your data structure without disrupting existing applications.

However, it has some downsides:

- Human Unreadability: In contrast to formats like JSON and XML, Protobuf isn't very readable by humans.

- Less Global Acceptance: Protobuf doesn't command as much global support as JSON or XML.

In the subsequent subdivision, we will dissect JSON, shedding light on its fundamental aspects.

`

`

Unraveling JSON: Unearthing Essential Characteristics

Standing as an abbreviation of JavaScript Object Notation, JSON is a text-centric methodology extensively applied in data interactions. Its simplicity and lucidity make it a favorite among both human operators and computational apparatus. The rudimentary notions of JSON get their inspiration from conventions known to coders possessing proficiency in languages correlated with the likes of the C family. JSON's uniqueness prevails without compromising the borrowed derivatives from C, C++, C#, Java, JavaScript, Perl, Python, and more.

In the sphere of data conveyance, JSON garners significant backing from web navigators, harmonized with JavaScript. It legitimizes data blueprints, encompassing objects and arrays. It grants a remarkable adaptability in devising data, even when they are tangled with complex correlations.

Digging into the kernel aspects of JSON:

1. Assorted Data Formats: JSON incorporates a diverse array of data formats, ranging from alphabetical chains and numerical constituents to entities, groupings, binary states (flagged as true or false), and the null element. The ensuing sample demonstrates the practical application of these formats within JSON:

<code class="language-json">{

"terminology": "This is a sequence of alphabets",

"numeric": 123,

"entity": {"unit": "element"},

"group": [1, 2, 3],

"dual": true,

"absentee": null

}</code>

2. Compositional Code: The architectural constraints of JSON are based on the basic concepts of JavaScript object notation. Though, JSON complies with a text-centric framework. The origin and reformation of archives for JSON data can be executed by any programming dialect. The following is a rudimentary exhibition:

<code class="language-json">{

"persona": "Jane",

"interval": 30,

"region": "London"

}</code>

3. Units & Assemblies: In terms of data configuration, JSON utilizes units and assemblies. Units are wrapped inside {} brackets and represent a chain of key-value combinations. In contrast, assemblies are structured series of valuations, encased within square brackets [].

<code class="language-json">{

"assembly": [

{"givenName": "Alice", "familyName": "Brown"},

{"givenName": "Bob", "familyName": "Green"},

{"givenName": "Charlie", "familyName": "White"}

]

}</code>

4. Data Transformations: The unscrambling procedure for JSON is user-friendly as the majority of contemporary coding dialects either provide innate aid or propose libraries for this purpose. Here's an execution of JSON conversion utilizing JavaScript:

<code class="language-javascript">var instance = JSON.parse('{"entity":"Jane", "timeframe":30, "locale":"London"}');</code>

5. Serialization: The conversion of a JavaScript value into a JSON string is known as serialization. Here's an illustration of serialization executed with a JavaScript entity:

<code class="language-javascript">var instance = {entity: "Jane", cycle: 30, locale: "London"};

var myJSON = JSON.stringify(instance);</code>

JSON's straightforwardness and flexibility have propelled its wide adoption in APIs, setup files, and data administration. However, it does present certain limitations. In the subsequent chapter, we will conduct a paralleled study between JSON and Protobuf - another prominent data communication approach, to aid in determining the superior choice for your specific requirements.

Evaluating the Attributes of Protobuf and JSON

In this part of the dialogue, we embark on an in-depth evaluation between Protobuf and JSON, a pair of frequently employed data serialization methods. Our discourse will encompass their characteristics, their overall performance, and their applicability, with an emphasis on code excerpts, comparison table, and main points to elaborate their differences and resemblances.

1. Organizing Data and Coding Structure:

Protobuf:

The utilization of binary configuration by Protocol Buffers, or Protobuf in short, results in compactness yet lacks humanness. It mandates a blueprint (a .proto document) to outline the data organization. Contemplating a Protobuf blueprint would be helpful:

<code class="language-protobuf">message PersonalInfo {

required string fullname = 1;

required int32 identity = 2;

optional string emailaddr = 3;

}</code>

JSON:

On the other hand, JSON, an abbreviation for JavaScript Object Notation, is a model which is interpretable and comprehensible by both humans and machines. It does not need a predetermined blueprint. An illustrative JSON object would look like this:

<code class="language-json">{

"fullname": "Jane Doe",

"identity": 456,

"emailaddr": "[email protected]"

}</code>

2. Performance:

Protobuf:

Commonly, Protobuf outshines JSON in performance and swiftness due to its binary formation and the facility to retrieve a specific area without needing to decrypt the entire data organization.

JSON:

Being textual in nature, JSON is slightly inferior when it comes to data performance and speed. However, for abundant web procedures, this small disparity is unlikely to present a problem.

3. Usability:

Protobuf:

Protobuf obligates a compilation stage to generate data access classes in preferred coding languages. Despite the added complexity, this process provides benefits like type substantiation.

JSON:

Owing to the lack of prerequisites for compilation or blueprint definition, JSON exhibits ease of use, but the absence of type substantiation could potentially increase the risk of errors.

4. Interoperability:

Protobuf:

Protobuf functions irrespective of the coding language or system, which makes it very suitable for intersystem communication.

JSON:

In a similar vein, JSON is free of language and platform constraints. Its textual attribute makes it highly convenient for web-based applications and APIs.

5. Annotations Support:

Protobuf:

The feature of accommodating annotations in Protobuf blueprints proves beneficial for assembling documentation.

JSON:

Contrastingly, JSON does not allow for comments or annotations.

All things considered, Protobuf shines in terms of performance and solid type substantiation, making it an excellent choice for large, complex systems. Conversely, JSON stands out for its straightforward implementation and versatility, defining it as an ideal selection for a range of web-based applications and APIs. The choice between Protobuf and JSON will primarily depend on the exact demands of your project.

An Analytical Comparison: JSON vs Protobuf

Within the confines of this chapter, our focus revolves around dissecting the merits and demerits of Protobuf and JSON. By thorough examination of their functionality, hassle-free usage, adaptability, and several such factors, we aim to provide an analytical comparison. This insightful study aids you in deciding the optimal data structure for your particular requirements.

1. Dissecting Protobuf: Advantages and Disadvantages

Merits of Protobuf:

a. Proficiency: Protobuf boasts impressive speed and size efficiency, courtesy of its binary serialization. This attribute leads to condensed payloads in contrast to JSON, making Protobuf a prime choice for high-performance systems and network communication.

<code class="language-python"># Sample Protobuf message

message Individual {

string username = 1;

int32 userId = 2;

string userEmail = 3;

}</code>

b. Dynamic Typing: Protobuf emphasizes data types hence impeding errors. It necessitates the predefined structure and type of your data prior to its usage.

c. Versatility in Compatibility: Protobuf furnishes the leeway to modify fields in your data structure sans affecting the older codebase. This proves advantageous in sustaining extensive applications.

Demerits of Protobuf:

a. Intricacy: The binary format of Protobuf elevates its complexity compared to JSON. Protobuf requirements include the utilization of the Protobuf compiler (protoc) for generating data access classes.

b. Confinement in Human readability: Contrary to JSON, human editing or reading Protobuf documents is a challenging task due to its binary format.

2. Breaking Down JSON: Strengths and Weaknesses

Merits of JSON:

a. User-friendliness: JSON is a breeze to operate. Its syntax is comprehensible even for novices and eliminates the prerequisite of a compilation step.

<code class="language-json">{

"username": "Jane Doe",

"userId": 321,

"userEmail": "[email protected]"

}</code>

b. Clarity for Humans: As JSON utilizes a text-based format, it offers easy readability and editability for individuals.

c. Universality: Almost all modern programming languages support JSON, enhancing its appeal as a flexible option for data interchange.

Demerits of JSON:

a. Efficacy: In terms of efficacy, JSON lags behind Protobuf. The representation of the same data consumes more bytes, and the parsing process typically takes more time.

b. Relaxed Typing: Compared to Protobuf, JSON's data typing has a more liberal approach. This could give rise to errors when data does not adhere to the correct formatting.

c. Absence of Inbuilt Version Support: JSON lacks an inbuilt version support feature. Thus, modifications in the data structure might disrupt the older codebase.

Wrapping up, it's evident that both Protobuf and JSON possess distinct merits and demerits. Protobuf emerges as a victor in high-performance systems where proficiency and dynamic typing hold significance. In contrast, JSON's ease of usage and clarity for humans prove useful for web APIs and configuration tasks. The final call between Protobuf and JSON depends largely on your specific context.

Real-world Implementations: Observing Protobuf and JSON in Action

Protobuf and JSON have emerged as winning candidates in the arena of data serialization. Each one brings to the table peculiar advantages and drawbacks with diverse adaptability depending upon the necessary project requirements. Let's unravel the instances where they have demonstrated their efficacy.

1. Web Designing and Application Programming Interfaces (APIs)

In the domain of web data communication, JSON clings as an unopposed champion. This acceptance is primarily because of its compatibility with JavaScript and the human-readable format making it an integral part of web designing and APIs. For instance, in the Twitter API, JSON is employed for fetching tweets, user-related information, and much more.

<code class="language-javascript">{

"created_at": "Thu Apr 06 15:24:15 +0000 2017",

"id_str": "850006245121695744",

"text": "1/ Today we’re sharing our vision for the future of the Twitter API platform!",

...

}</code>

Such JSON responses are comprehensible, thus making them an excellent choice for web-based applications.

2. Systems with Extreme Performance

In contrast, Protobuf, due to its compressed binary format and swift serialization/deserialization capabilities, is commonly favored for high-efficiency systems. To illustrate, Google utilizes Protobuf extensively for its internal RPC systems. A basic Protobuf message portrayed below:

<code class="language-protobuf">message SearchRequest {

required string query = 1;

optional int32 page_number = 2;

optional int32 result_per_page = 3;

}</code>

The above message, when converted to binary format, becomes size-efficient and fast to manipulate as compared to its JSON counterpart.

3. IoT Appliances

In the sphere of IoT, Protobuf is favorable, thanks to its compact architecture and high speed. Given the limited resources of IoT devices, compact and quick data formats are ideal. An intelligent thermostat, for example, might use Protobuf to transmit thermal information to servers.

<code class="language-protobuf">message TemperatureData {

required float temperature = 1;

optional bool is_heating_on = 2;

}</code>

In such a way, the Protobuf message ensures it is economical and prompt, making it perfectly suitable for IoT appliances.

4. Big Data Handling and Data Crunching

JSON, due to its ease of use and versatility, is the go-to format for large data and analytics realms. For instance, log details could potentially be stored in JSON for straightforward inspection and processing.

<code class="language-javascript">{

"timestamp": "2022-01-01T00:00:00Z",

"level": "INFO",

"message": "User logged in",

"user_id": "12345"

}</code>

This JSON log entry is straightforward to understand and inquire, making it the preferred choice for big data and statistical analysis.

Ultimately, the decision on the use of Protobuf or JSON relies heavily on your project's individuality. The easy-to-read and versatile nature of JSON makes it suitable for web designing, APIs, and expansive datasets. On the other hand, the conciseness and efficiency of Protobuf make it an apt choice for high-efficiency mechanisms and IoT devices.

`

`

Summing It Up: Deciphering Between Protobuf and JSON

When observing data serialization, deciding whether to implement Protobuf or JSON isn't a straightforward or standard selection. Each contributes in its distinctive style and poses its individual drawbacks, with the quintessential choice resting heavily on what your project specifically entails. In this terminal chapter, we encapsulate the salient features of our discourse and navigate some pointers on how to discern between two pervasive data formats.

1. Velocity and Optimization

Suppose the necessities of your task lean towards superior velocity and optimization in context with data volume and computation rapidity. In that case, Protobuf rises victoriously. The binary format of Protobuf leads to a reduced volume of data relative to the textual nature of JSON. This becomes vital in network transmissions where data capacity raises concern.

<code class="language-python"># Message Serialization in Protobuf indiv_message = indiv_pb2.Individual(name="John", id=123, email="[email protected]") serialized_msg = indiv_message.SerializeToString() # Message Serialization in JSON individual_dictionary = {"name": "John", "id": 123, "email": "[email protected]"} serialized_msg = json.dumps(individual_dictionary)</code>

In these given Python code structures, the serialized message in Protobuf will be presented in a condensed form relative to its JSON counterpart.

2. Transparency and Debugging Provisions

Conversely, if your task necessitates uncomplicated transparency and debugging options, JSON secures a superior position. The legible format of JSON eases the comprehension and debugging of data.

<code class="language-json">{

"name": "John",

"id": 123,

"email": "[email protected]"

}</code>

The aforementioned JSON data is comprehensible effortlessly, while a similar Protobuf dataset would be represented in a binary structure and wouldn't be user-friendly.

3. Schema Progression

While both Protobuf and JSON offer support for schema progression, Protobuf gives a more fortified method. Protobuf enables the addition of novel fields to message structures without disrupting legacy applications unfamiliar with them.

4. Support for Various Languages

Protobuf and JSON both share extensive language support. Nonetheless, given JSON's integration with JavaScript, it boasts inherent support in web browsers and JavaScript-centric technologies such as Node.js.

5. Interoperability

Thanks to its text-centric nature, JSON has superior interoperability vis-à-vis Protobuf, making it compatible with any programming language that can manipulate text. Protobuf, however, involves the deployment of generated code for serializing and deserializing data.

To round off, the selection between Protobuf and JSON is centered on your project's specific requisites. If your project's foundation lies in superior performance and optimization, and solid schema progression, Protobuf becomes the preferred candidate. However, for simpler reading capabilities, efficient debugging, and enhanced interoperability, JSON stands as the favourable alternative. Grasping these divergences and making an educated choice are paramount, making it strictly dependent on your project's respective requirements.

如有侵权请联系:admin#unsafe.sh