2024-2-16 22:30:32 Author: securityboulevard.com(查看原文) 阅读量:11 收藏

By Paweł Płatek (GrosQuildu)

AWS Nitro Enclaves are locked-down virtual machines with support for attestation. They are Trusted Execution Environments (TEEs), similar to Intel SGX, making them useful for running highly security-critical code.

However, the AWS Nitro Enclaves platform lacks thorough documentation and mature tooling. So we decided to do some deep research into it to fill in some of the documentation gaps and, most importantly, to find security footguns and offer some advice for avoiding them.

This blog post focuses specifically on enclave images and the attestation process.

First, here’s a tl;dr on our recommendations to avoid security footguns while building and signing an enclave:

- Minimize implicit trust relationships when building an enclave image.

- Check the kernel version and hash.

- Review the kernel configuration and boot command line.

- Verify the code of the pre-compiled binaries (the

initexecutable, NSM driver, andlinuxkittool). - Verify that the correct Docker image is used to build the enclave image.

- Get the AWS root certificate from a trusted source and verify its hash.

- Ensure that the threat model of your system takes into account the fact that AWS is a centralized point of trust.

- Check PCR-1 and PCR-2 in addition to PCR-0.

- Be aware that an EIF’s metadata section is not attested.

- Do not parse EIF signatures—reconstruct and verify them instead.

- Do not push unprotected private keys to EC2 instances for enclave signing.

- Do not use the

nitro-cli describe-eifcommand on untrusted EIFs.

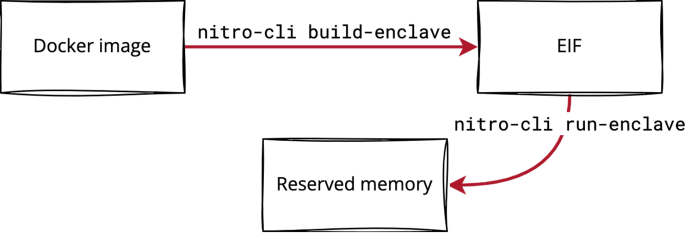

Running an enclave

To run an enclave, use SSH to connect to an AWS EC2 instance and use the nitro-cli tool to do the following:

- Build an enclave image from a Docker image and a few pre-compiled files.

- Docker is used to create an archive of files for the enclave’s user space.

- The pre-compiled binaries are described later in this blog post.

- Start the enclave from the enclave image.

The enclave image is a binary blob in the enclave image file (EIF) format.

Figure 1: The flow of building an enclave

This is what’s happening under the hood when an enclave is started:

- Memory and CPUs are freed from the EC2 instance and reserved for the enclave.

- The EIF is copied to the newly reserved memory.

- The EC2 instance asks the Nitro Hypervisor to start the enclave.

The Nitro Hypervisor is responsible for securing the enclave (e.g., clearing memory before it’s returned to the EC2 instance). The enclave is attached to its parent EC2 instance and cannot be moved between EC2 instances. All of the code that is executed inside the enclave is provided in the EIF. So what does the EIF look like?

The EIF format

The best “specification” for the EIF format that we have is the code in the aws-nitro-enclaves-image-format repo. The EIF format is rather simple: a header and an array of sections. Each section is a header and a binary blob.

Figure 2: The header and sections of an EIF

The CRC32 checksum is computed over the header (minus 4 bytes reserved for the checksum itself) and all of the sections (including the headers).

There are five types of EIF sections:

| Section type | Format | Description |

|---|---|---|

| Kernel | Binary | A bzImage file |

| Cmdline | String | The boot command line for the kernel |

| Metadata | JSON | The build information, such as the kernel configuration and the Cargo and Docker versions used |

| Ramdisk | cpio | The bootstrap ramfs, which includes the NSM driver and init file |

| The user space ramfs, which includes files from the Docker image | ||

| Signature | CBOR | A vector of tuples in the form (certificate, signature) |

So with an EIF, we have all that’s needed to run a VM: a kernel image, a command line for it, bootstrap binaries (the NSM driver and init executable), and a user space filesystem.

But where does this data come from, and can you trust it?

Who do you trust?

Before we get into the details, you should know that there are quite a few implicit trust relationships involved in the data that flows into an EIF when it is created. For that reason, it is important to verify how data gets into your EIF images.

To verify dataflows into an EIF image, we need to look into the enclave_build package that is used by the nitro-cli tool.

A kernel image (which is a bzImage file), the init executable, and the NSM driver are pre-compiled and stored in the /usr/share/nitro_enclaves/blobs/ folder (on an EC2 instance). They are pulled to the instance when the aws-nitro-enclaves-cli-devel package is installed.

Figure 3: Part of the Nitro Enclaves CLI installation documentation

The pre-compiled binaries of the kernel image, the init executable, and the NSM driver are generated by the code in the aws-nitro-enclaves-sdk-bootstrap repo, according to the repo’s README (though we have no way to verify this claim). That code does the following:

- Downloads and builds the kernel, using a custom kernel configuration

- Verifies the kernel’s signature with the gpg2 tool (trusting keys belonging to [email protected] and [email protected])

- Builds the

initexecutable that will be used to bootstrap the system - Builds the NSM driver that will be used by the enclave to communicate with the Nitro Hypervisor

The binaries can also be found in the aws-nitro-enclaves-cli repo. We can compare SHA-384 hashes of the pre-compiled binaries from the three sources—the EC2 instance, the aws-nitro-enclaves-cli repo, and those generated by the aws-nitro-enclaves-sdk-bootstrap repo (for nitro-cli version 1.2.2):

| In the EC2 instance | In aws-nitro-enclaves-cli | Built with aws-nitro-enclaves-sdk-bootstrap | |

|---|---|---|---|

| Kernel | 127b32...9821c4 |

127b32...9821c4 |

4b3719...016c58 |

| Kernel config | e9704c...7d9d35 |

e9704c...7d9d35 |

9e634d...663f99 |

| Cmdline | cefb92...ab0b0f |

cefb92...ab0b0f |

N/A |

init |

7680fd...a435bb |

e23a90...4272ea |

601ec5...d4b25e |

| NSM driver | 2357cb...8192c |

993d1f...657b50 |

96d0df...4f5306 |

linuxkit |

31ed3c...035664 |

581ddc...2ee024 |

N/A |

The kernel source code is obtained securely and the hashes are consistent. A manually built kernel has a different hash than that of the pre-compiled kernel probably because its configuration is different. We can manually verify the kernel’s configuration and boot command line, so their hashes are not so important.

Interestingly, the hashes of the init and the NSM driver are completely off. To ensure that these executables were not maliciously modified, we would have to build them from the source code and debug the differences between the freshly built and pre-compiled versions (with a tool like GDB or Ghidra). Alternatively, we have to trust that the pre-compiled files are safe to use.

Next, there are the ramdisk sections, which are simply cpio archives that store binary files. There are at least two ramdisks in every EIF:

- The first ramdisk contains the

initexecutable and the NSM driver. initinstalls the NSM driver,chroots to therootfs/directory, and callsexecvpeon the.cmdfile with the environment variables from the.envfile.- The second ramdisk is created from the Docker image provided to the

nitro-clicommand. - It stores a command that

inituses to pivot (in the.cmdfile), environment variables (in the.envfile), and all files from the Docker image (in therootfs/directory). - The command and environment variables are parsed from the Dockerfile.

To construct cpio archives for ramdisks, the nitro-cli tool uses the linuxkit tool, which is downloaded along with the other pre-compiled files. AWS uses “a slightly modified” version of the tool (that’s why the hashes don’t match). linuxkit downloads the Docker image and extracts files from it, trying to make identical, reproducible copies of them. Notably, nitro-cli uses version 0.8 of linuxkit, which is outdated.

Figure 4: A depiction of how an EIF is created

Here’s how nitro-cli gets the Docker image used to build an EIF:

nitro-clibuilds the image locally if the--docker-dircommand line option is provided.- Otherwise,

nitro-clichecks if the image is locally available. - If it’s not, then it pulls the image using the

shipliftlibrary and credentials from a local file. linuxkitalso tries to use locally available images; if images are not locally available, it pulls them from a remote registry using credentials obtained through thedocker logincommand.

Producing enclaves from Docker files in a reproducible, transparent, and easy-to-audit way is tricky—you can read more about that fact in Artur Cygan’s “Enhancing trust for SGX enclaves” blog post. When building EIFs, you should at least make sure that nitro-cli uses the right image. To do so, consult the Docker build logs (as Docker images and the daemon do not store information about image origin).

What do you attest?

The main feature of AWS Nitro Enclaves is cryptographic attestation. A running enclave can ask the Nitro Hypervisor to compute (measure) hashes of the enclave’s code and sign them with AWS’s private key, or more precisely with a certificate that is signed by a certificate that is signed by a certificate… that is signed by the AWS root certificate.

You can use the cryptographic attestation feature to establish trust between an enclave’s source code and the code that is actually executed. Just make sure to get the AWS root certificate from a trusted source and to verify its hash.

What’s important is the fact that AWS owns both the attestation key and the infrastructure. This means that you must completely trust AWS. If AWS is compromised or acts maliciously, it’s game over. This security model is different from the SGX architecture, where trust is divided between Intel (the attestation key owner) and a cloud provider.

When the Hypervisor signs an enclave’s hashes, it’s specifically signing a CBOR-encoded document specified in the aws-nitro-enclaves-nsm-api repo. There are a few items in the document, but for now we are interested in the platform configuration registers (PCRs), which are measurements (cryptographic hashes) associated with the enclave. The first three PCRs are the hashes of the enclave’s code.

Figure 5: The first three PCRs of an enclave

PCRs 0 through 2 are just SHA-384 hashes over the sections’ data:

- PCR-0:

sha384(‘\0’*48 | sha384(Kernel | Cmdline | Ramdisk[:])) - PCR-1:

sha384(‘\0’*48 | sha384(Kernel | Cmdline | Ramdisk[0])) - PCR-2:

sha384(‘\0’*48 | sha384(Ramdisk[1:]))

As you can see, there is no domain separation between the sections’ data—sections are simply concatenated. Moreover, PCR hashes do not include the section headers. This means that we can move bytes between adjacent sections without changing PCRs. For example, if we strip bytes from the beginning of the second ramdisk and append them to the first one, the PCR-0 measurement won’t change. That’s a ticking pipe bomb, but it is currently not exploitable. Regardless, we recommend checking PCR-1 and PCR-2 in addition to PCR-0 whenever possible.

One more observation is that the metadata section of the EIF is not attested. It’s unspecified how and when users should use that section, so it’s hard to imagine an exploit scenario for this property. Just make sure your system’s security doesn’t depend on content from that section.

Where do you sign?

Finally, we’ll discuss the signature section of the EIF. This section contains a CBOR-encoded vector of tuples, each of which is a certificate-signature pair. The signature is a CBOR-encoded COSE_Sign1 structure that contains the encoded payload (tuples of PCR index-value pairs), the actual signature over the payload, and some metadata. The certificate is in PEM format.

Section = [(certificate, COSE structure), (certificate, COSE structure), …]

COSE structure = COSE_Sign1([(PCR index, PCR value), (PCR index, PCR value), …])

COSE_Sign1(payload) = structure {

payload = payload

signature = sign(payload)

metadata = signing algorithm (etc)

}

In the current version of the EIF format, the section contains only the signature for PCR-0, the hash of the entire enclave image. (But note that you can make an EIF with many signature elements; it will still be run by the Hypervisor, but it won’t validate signatures after the first one.)

The signing code is implemented by the aws-nitro-enclaves-cose library.

PCR-8 is a hash of the EIF file’s signing certificate and is computed as follows. The certificate first is decoded from its original PEM format and encoded as DER.

PCR-8 = sha384(‘\0’*48 | sha384(SignatureSection[0].certificate))

Now, how do you validate the signature? The documentation instructs users to decrypt the payload from the COSE_Sign1 object to get the PCR index-value pair and compare the PCR value with the expected PCR. We think there is a terminology issue here and that they mean to verify the actual signature, and then extract the PCR from the payload and compare it with the expected one. However, we instead recommend reconstructing the COSE_Sign1 payload from the expected PCR and verifying the signature against that. That should save you from encountering bugs due to invalid parsing. (We discuss such bugs in the next section.)

The official way to sign an enclave is to use the nitro-cli tool on an EC2 instance (figure 6). That forces you to push a private key to the instance (figure 7). That’s really not an ideal way to handle private keys. Even worse, the AWS documentation doesn’t instruct users to protect their keys with passphrases…

But there’s nothing stopping you from running nitro-cli outside of an EC2 instance, or even from running it in an offline environment. After all, the EIF is just a bunch of headers and binary blobs—the Nitro Hypervisor is not required to build and sign the image. The AWS repository even has an example of building an EIF in a Docker container. Moreover, there is pending PR in the aws-nitro-enclaves-cli repository that will enable EIFs to be signed with KMS once merged.

Figure 6: The AWS documentation states that nitro-cli must be run on an EC2 instance.

nitro-cli build-enclave --docker-uri hello-world:latest --output-file hello-signed.eif --private-key key_name.pem --signing-certificate certificate.pem

Figure 7: Private keys must be stored in a local file.

Overall, we recommend not following the AWS documentation when it comes to signing EIFs. Instead, here are a few options to ensure that EIFs are signed securely (in order of recommendation):

- Push your private key and Docker image to an offline environment and sign the EIF there.

- Modify

nitro-clito enable more secure signing (with HSM, KMS, keyring, etc.). - Wait for the

nitro-cliPR that will enable EIFs to be signed with KMS to be merged; that way, you won’t have to modifynitro-cliyourself to do so. - Push your private key to your EC2 instance and sign the EIF there, as AWS recommends, but protect the key with a passphrase first. (

nitro-cliwill ask for the passphrase while building the EIF.)

How do you parse?

Now that we know what an enclave image looks like, we’ll discuss how it is parsed. If you are familiar with security bugs in file format parsers, you’ve probably already spotted ambiguities and potential issues in the parsing process.

There are two EIF parsers:

- Public one: The

nitro-cli describe-eifcommand - Private one: Used by the Nitro Hypervisor to start an enclave

The parser we care about is the private one—it provides the Hypervisor with an actual view of the EIF. However, it is not open sourced, and there is no specification on the EIF format, so we don’t have any insight into how the private parser actually works. To get some understanding of the private parser’s behavior, we have to treat it as a black box and run experiments on it. By modifying valid EIFs and trying to run them on the Hypervisor, I came up with some answers to the following questions, some of which I included in an issue I submitted to the aws-nitro-enclaves-image-format repo:

- Is the CRC32 checksum verified? Yes. The enclave does not boot if the CRC32 checksum is invalid.

- Can an EIF have more than two ramdisk sections? Yes. All ramdisk sections are just concatenated together.

- Can you truncate (corrupt) a cpio archive in a ramdisk section? Yes! Some cpio errors are ignored by the Hypervisor.

- Can an EIF have more than a single kernel or cmdline section? Probably not, but it’s hard to ensure that something is not possible.

- Can you swap sections of different types (e.g., put the cmdline section before the kernel section)? Yes. Doing so changes the PCR-0 measurement.

- Are the section sizes indicated in the EIF header metadata validated against the sizes indicated in the sections’ actual headers? Yes.

- Can an EIF contain data between its sections? Yes. If so, the CRC32 checksum is also computed over that data.

- Is an EIF header’s

num_sectionsfield validated against items in the section sizes and section offsets? No. Items afternum_sectionsare ignored. - Do the sizes in the

section_sizesarray include section headers? No. The array stores data lengths only. - Can an EIF have more than one PCR index-value tuple in the signature section? No.

- Can an EIF use an empty PCR index-value vector? No.

- Can you sign a PCR other than PCR-0? It’s complicated, but no. The PCR index can be arbitrary data (not even a number), but the value must be a PCR-0 value.

- Can an EIF store more than one certificate-signature pair in the signature section? Yes.

- Are all certificate-signature pairs validated? No. Only the first pair is validated.

If you compare the findings above with the nitro-cli parser code you will see that the two parsers work differently. Maybe the most important difference is that the nitro-cli parser does not respect the header metadata like num_sections and the section offsets. Therefore, the nitro-cli parser may produce different measurements than the Hypervisor parser. We recommend not using the nitro-cli describe-eif command to learn the PCRs of untrusted EIFs. Instead, build your EIFs from sources or run them and use the nitro-cli describe-enclaves command. That command consults the Hypervisor for measurements.

Why is this relevant?

We run code in TEEs like AWS Nitro Enclaves when that code is highly security-critical, so we have to get the details right. But the documentation on AWS Nitro Enclaves is severely lacking, making it hard to understand those details. The feature also lacks mature tooling and contains several security footguns. So if you’re going to use AWS Nitro Enclaves, be sure to follow the checklist provided in the beginning of this post! And if you need further guidance, our AppSec team holds regular office hours. Contact us to schedule a meeting where you can ask our experts any questions.

To learn more about AWS, check out Scott Arciszewski’s blog post “Cloud cryptography demystified: Amazon Web Services” and Joop van de Pol’s blog post “A trail of flipping bits” about TEE-specific issues.

*** This is a Security Bloggers Network syndicated blog from Trail of Bits Blog authored by Trail of Bits. Read the original post at: https://blog.trailofbits.com/2024/02/16/a-few-notes-on-aws-nitro-enclaves-images-and-attestation/

如有侵权请联系:admin#unsafe.sh