03/04/2024

10 min read

Email continues to be the largest attack vector that attackers use to try to compromise or extort organizations. Given the frequency with which email is used for business communication, phishing attacks have remained ubiquitous. As tools available to attackers have evolved, so have the ways in which attackers have targeted users while skirting security protections. The release of several artificial intelligence (AI) large language models (LLMs) has created a mad scramble to discover novel applications of generative AI capabilities and has consumed the minds of security researchers. One application of this capability is creating phishing attack content.

Phishing relies on the attacker seeming authentic. Over the years, we’ve observed that there are two distinct forms of authenticity: visual and organizational. Visually authentic attacks use logos, images, and the like to establish trust, while organizationally authentic campaigns use business dynamics and social relationships to drive their success. LLMs can be employed by attackers to make their emails seem more authentic in several ways. A common technique is for attackers to use LLMs to translate and revise emails they’ve written into messages that are more superficially convincing. More sophisticated attacks pair LLMs with personal data harvested from compromised accounts to write personalized, organizationally-authentic messages.

For example, WormGPT has the ability to take a poorly written email and recreate it to have better use of grammar, flow, and voice. The output is a fluent, well-written message that can more easily pass as authentic. Threat actors within discussion forums are encouraged to create rough drafts in their native language and let the LLM do its work.

One form of phishing attack that benefits from LLMs, which can have devastating financial impact, are Business Email Compromise (BEC) attacks. During these attacks, malicious actors attempt to dupe their victims into sending payment for fraudulent invoices; LLMs can help make these messages sound more organizationally authentic. And while BEC attacks are top of mind for organizations who wish to stop the unauthorized egress of funds from their organization, LLMs can be used to craft other types of phishing messages as well.

Yet these LLM-crafted messages still rely on the user performing an action, like reading a fraudulent invoice or interacting with a link, which can’t be spoofed so easily. And every LLM-written email is still an email, containing an array of other signals like sender reputation, correspondence patterns, and metadata bundled with each message. With the right mitigation strategy and tools in place, LLM-enhanced attacks can be reliably stopped.

While the popularity of ChatGPT has thrust LLMs into the recent spotlight, these kinds of models are not new; Cloudflare has been training its models to defend against LLM-enhanced attacks for years. Our models’ ability to look at all components of an email ensures that Cloudflare customers are already protected and will continue to be in the future — because the machine learning systems our threat research teams have developed through analyzing billions of messages aren't deceived by nicely-worded emails.

Generative AI threats and trade offs

The riskiest of AI generated attacks are personalized based on data harvested prior to the attack. Threat actors collect this information during more traditional account compromise operations against their victims and iterate through this process. Once they have sufficient information to conduct their attack they proceed. It’s highly targeted and highly specific. The benefit of AI is scale of operations; however, mass data collection is necessary to create messages that accurately impersonate who the attacker is pretending to be.

While AI-generated attacks can have advantages in personalization and scalability, their effectiveness hinges on having sufficient samples for authenticity. Traditional threat actors can also employ social engineering tactics to achieve similar results, albeit without the efficiency and scalability of AI. The fundamental limitations of opportunity and timing, as we will discuss in the next section, still apply to all attackers — regardless of the technology used.

To defend against such attacks, organizations must adopt a multi-layer approach to cybersecurity. This includes employee awareness training, employing advanced threat detection systems that utilize AI and traditional techniques, and constantly updating security practices to protect against both AI and traditional phishing attacks.

Threat actors can utilize AI to generate attacks, but they come with tradeoffs. The bottleneck in the number of attacks they can successfully conduct is directly proportional to the number of opportunities they have at their disposal, and the data they have available to craft convincing messages. They require access and opportunity, and without both the attacks are not very likely to succeed.

BEC attacks and LLMs

BEC attacks are top of mind for organizations because they can allow attackers to steal a significant amount of funds from the target. Since BEC attacks are primarily based on text, it may seem like LLMs are about to open the floodgates. However, the reality is much different. The major obstacle limiting this proposition is opportunity. We define opportunity as a window in time when events align to allow for an exploitable condition and for that condition to be exploited — for example, an attacker might use data from a breach to identify an opportunity in a company’s vendor payment schedule. A threat actor can have motive, means, and resources to pull off an authentic looking BEC attack, but without opportunity their attacks will fall flat. While we have observed threat actors attempt a volumetric attack by essentially cold calling on targets, such attacks are unsuccessful the vast majority of the time. This is in line with the premise of BECs, as there is some component of social engineering at play for these attacks.

As an analogy, if someone were to walk into your business’ front door and demand you pay them \$20,000 without any context, a reasonable, logical person would not pay. A successful BEC attack would need to bypass this step of validation and verification, which LLMs can offer little assistance in. While LLMs can generate text that appears convincingly authentic, they cannot establish a business relationship with a company or manufacture an invoice that is authentic in appearance and style, matching those in use. The largest BEC payments are a product of not only account compromise, but invoice compromise, the latter of which are necessary for the attacker in order to provide convincing, fraudulent invoices to victims.

At Cloudflare, we are uniquely situated to provide this analysis, as our email security products scrutinize hundreds of millions of messages every month. In analyzing these attacks, we have found that there are other trends besides text which constitute a BEC attack, with our data suggesting that the vast majority of BEC attacks use compromised accounts. Attackers with access to a compromised account can harvest data to craft more authentic messages that can bypass most security checks because they are coming from a valid email address. Over the last year, 80% of BEC attacks involving \$10K or more involved compromised accounts. Out of that, 75% conducted thread hijacking and redirected the thread to newly registered domains. This is in keeping with observations that the vast majority of “successful” attacks, meaning the threat actor successfully compromised their target, leverages a lookalike domain. This fraudulent domain is almost always recently registered. We also see that 55% of these messages involving over $10K in payment attempted to change ACH payment details.

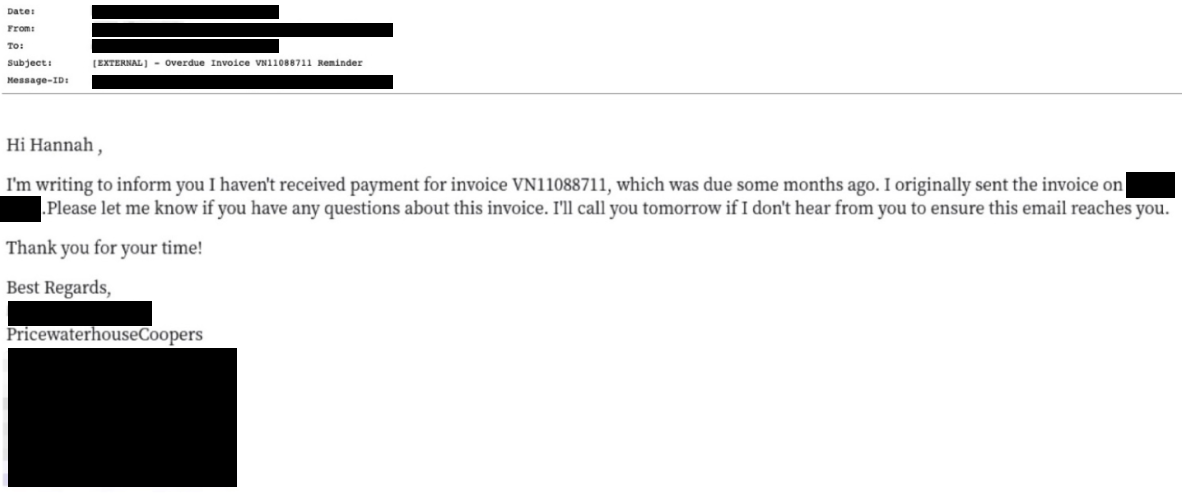

We can see an example of how this may accumulate in a BEC attack below.

The text within the message does not contain any grammatical errors and is easily readable, yet our sentiment models triggered on the text, detecting that there was a sense of urgency in the sentiment in combination with an invoice — a common pattern employed by attackers. However, there are many other things in this message that triggered different models. For example, the attacker is pretending to be from PricewaterhouseCoopers, but there is a mismatch in the domain from which this email was sent. We also noticed that the sending domain was recently registered, alerting us that this message may not be legitimate. Finally, one of our models generates a social graph unique to each customer based on their communication patterns. This graph provides information about whom each user communicates with and about what. This model flagged that, given the fresh history of this communication, this message was not business as usual. All the signals above plus the outputs of our sentiment models led our analysis engine to conclude that this was a malicious message and to not allow the recipient of this message to interact with it.

Generative AI is continuing to change and improve, so there’s still a lot to be discovered in this arena. While the advent of AI-created BEC attacks may cause an ultimate increase in the number of attacks seen in the wild, we do not expect their success rate to rise for organizations with robust security solutions and processes in place.

Phishing attack trends

In August of last year, we published our 2023 Phishing Report. That year, Cloudflare processed approximately 13 billion emails, which included blocking approximately 250 million malicious messages from reaching customers’ inboxes. Even though it was the year of ChatGPT, our analysis saw that attacks still revolved around long-standing vectors like malicious links.

Most attackers were still trying to get users to either click on a link or download a malicious file. And as discussed earlier, while Generative AI can help with making a readable and convincing message, it cannot help attackers with obfuscating these aspects of their attack.

Cloudflare’s email security models take a sophisticated approach to examining each link and attachment they encounter. Links are crawled and scrutinized based on information about the domain itself as well as on–page elements and branding. Our crawlers also check for input fields in order to see if the link is a potential credential harvester. And for attackers who put their weaponized links behind redirects or geographical locks, our crawlers can leverage the Cloudflare network to bypass any roadblocks thrown our way.

Our detection systems are similarly rigorous in handling attachments. For example, our systems know that some parts of an attachment can be easily faked, while others are not. So our systems deconstruct attachments into their primitive components and check for abnormalities there. This allows us to scan for malicious files more accurately than traditional sandboxes which can be bypassed by attackers.

Attackers can use LLMs to craft a more convincing message to get users to take certain actions, but our scanning abilities catch malicious content and prevent the user from interacting with it.

Anatomy of an email

Emails contain information beyond the body and subject of the message. When building detections, we like to think of emails as having both mutable and immutable properties. Mutable properties like the body text can be easily faked while other mutable properties like sender IP address require more effort to fake. However, there are immutable properties like domain age of the sender and similarity of the domain to known brands that cannot be altered at all. For example, let's take a look at a message that I received.

While the message above is what the user sees, it is a small part of the larger content of the email. Below is a snippet of the message headers. This information is typically useless to a recipient (and most of it isn’t displayed by default) but it contains a treasure trove of information for us as defenders. For example, our detections can see all the preliminary checks for DMARC, SPF, and DKIM. These let us know whether this email was allowed to be sent on behalf of the purported sender and if it was altered before reaching our inbox. Our models can also see the client IP address of the sender and use this to check their reputation. We can also see which domain the email was sent from and check if it matches the branding included in the message.

As you can see, the body and subject of a message are a small portion of what makes an email to be an email. When performing analysis on emails, our models holistically look at every aspect of a message to make an assessment of its safety. Some of our models do focus their analysis on the body of the message for indicators like sentiment, but the ultimate assessment of the message’s risk is performed in concert with models evaluating every aspect of the email. All this information is surfaced to the security practitioners that are using our products.

Cloudflare’s email security models

Our philosophy of using multiple models trained on different properties of messages culminates in what we call our SPARSE engine. In the 2023 Forrester Wave™ for Enterprise Email Security report, the analysts mentioned our ability to catch phishing emails using our SPARSE engine saying “Cloudflare uses its preemptive crawling approach to discover phishing campaign infrastructure as it’s being built. Its Small Pattern Analytics Engine (SPARSE) combines multiple machine learning models, including natural language modeling, sentiment and structural analysis, and trust graphs”. 1

Our SPARSE engine is continually updated using messages we observe. Given our ability to analyze billions of messages a year, we are able to detect trends earlier and feed these into our models to improve their efficacy. A recent example of this is when we noticed in late 2023 a rise in QR code attacks. Attackers deployed different techniques to obfuscate the QR code so that OCR scanners could not scan the image but cellphone cameras would direct the user to the malicious link. These techniques included making the image incredibly small so that it was not clear for scanners or pixel shifting images. However, feeding these messages into our models trained them to look at all the qualities about the emails sent from those campaigns. With this combination of data, we were able to create detections to catch these campaigns before they hit customers’ inboxes.

Our approach to preemptive scanning makes us resistant to oscillations of threat actor behavior. Even though the use of LLMs is a tool that attackers are deploying more frequently today, there will be others in the future, and we will be able to defend our customers from those threats as well.

Future of email phishing

Securing email inboxes is a difficult task given the creative ways attackers try to phish users. This field is ever evolving and will continue to change dramatically as new technologies become accessible to the public. Trends like the use of generative AI will continue to change, but our methodology and approach to building email detections keeps our customers protected.

If you are interested in how Cloudflare’s Cloud Email Security works to protect your organization against phishing threats please reach out to your Cloudflare contact and set up a free Phishing Risk Assessment. For Microsoft 365 customers, you can also run our complementary retro scan to see what phishing emails your current solution has missed. More information on that can be found in our recent blog post.

Want to learn more about our solution? Sign up for a complementary Phish Risk Assessment.

[1] Source: The Forrester Wave™: Enterprise Email Security, Q2, 2023

The Forrester Wave™ is copyrighted by Forrester Research, Inc. Forrester and Forrester Wave are trademarks of Forrester Research, Inc. The Forrester Wave is a graphical representation of Forrester's call on a market and is plotted using a detailed spreadsheet with exposed scores, weightings, and comments. Forrester does not endorse any vendor, product, or service depicted in the Forrester Wave. Information is based on best available resources. Opinions reflect judgment at the time and are subject to change.

We protect entire corporate networks, help customers build Internet-scale applications efficiently, accelerate any website or Internet application, ward off DDoS attacks, keep hackers at bay, and can help you on your journey to Zero Trust.

Visit 1.1.1.1 from any device to get started with our free app that makes your Internet faster and safer.

To learn more about our mission to help build a better Internet, start here. If you're looking for a new career direction, check out our open positions.