PartitionAlloc内存分配器是笔者迄今为止读过的最复杂的内存分配器,大约有3w+行代码。

1.1 PartitionRoot

PartitionRoot对象是PartitionAlloc内存管理总的对象入口。

partition_allocator/partition_root.hstruct PA_ALIGNAS(64) PA_COMPONENT_EXPORT(PARTITION_ALLOC) PartitionRoot {Bucket buckets[internal::kNumBuckets] = {};Bucket sentinel_bucket{};uintptr_t next_super_page = 0;uintptr_t next_partition_page = 0;uintptr_t next_partition_page_end = 0;SuperPageExtentEntry* current_extent = nullptr;SuperPageExtentEntry* first_extent = nullptr;DirectMapExtent* direct_map_list PA_GUARDED_BY(lock_) = nullptr;void Init(PartitionOptions);}

buckets是个Bucket类型的数组,它的每个元素代表特定的slot大小。

Init函数用于初始化PartitionAddressSpace以及ThreadCache。

1.2 PartitionAddressSpace

partition_allocator/partition_address_space.hclass PA_COMPONENT_EXPORT(PARTITION_ALLOC) PartitionAddressSpace {PA_ALWAYS_INLINE static std::pair<pool_handle, uintptr_t> GetPoolAndOffset(uintptr_t address);struct PoolSetup {union {struct {uintptr_t regular_pool_base_address_;uintptr_t brp_pool_base_address_;uintptr_t configurable_pool_base_address_;#if BUILDFLAG(ENABLE_PKEYS)uintptr_t pkey_pool_base_address_;#endif#if PA_CONFIG(DYNAMICALLY_SELECT_POOL_SIZE)uintptr_t regular_pool_base_mask_;uintptr_t brp_pool_base_mask_;#if PA_CONFIG(GLUE_CORE_POOLS)uintptr_t core_pools_base_mask_;#endif#endif // PA_CONFIG(DYNAMICALLY_SELECT_POOL_SIZE)uintptr_t configurable_pool_base_mask_;#if BUILDFLAG(ENABLE_PKEYS)int pkey_;#endif};#if BUILDFLAG(ENABLE_PKEYS)char one_page_[SystemPageSize()];#elsechar one_cacheline_[kPartitionCachelineSize];#endif};static PoolSetup setup_ PA_CONSTINIT;}

setup_字段保存了每个pool的类型和起始地址,通过GetPoolAndOffset来获取。

1.3 AddressPoolManager

PartitionAlloc通过POOL的概念管理内存池,一个进程有kNumPools个类型的pool,注意内存池是对整个进程共有的,不是线程专有。

enum pool_handle : unsigned {kNullPoolHandle = 0u,kRegularPoolHandle,kBRPPoolHandle,#if BUILDFLAG(HAS_64_BIT_POINTERS)kConfigurablePoolHandle,#endif#if BUILDFLAG(ENABLE_PKEYS)kPkeyPoolHandle,#endifkMaxPoolHandle};constexpr size_t kNumPools = kMaxPoolHandle - 1;

kBRPPoolHandle用于32位,kPkeyPoolHandle用于x86提供的pkey保护,kRegularPoolHandle和kConfigurablePoolHandle是我们关心的。

Pool依附于AddressPoolManager结构体内:

class PA_COMPONENT_EXPORT(PARTITION_ALLOC) AddressPoolManager {class Pool {public:uintptr_t address_begin_ = 0;bool TryReserveChunk(uintptr_t address, size_t size);struct {char pad_[PA_PKEY_ARRAY_PAD_SZ(Pool, kNumPools)] = {};Pool pools_[kNumPools];char pad_after_[PA_PKEY_FILL_PAGE_SZ(sizeof(Pool))] = {};} aligned_pools_ PA_PKEY_ALIGN;}void Add(pool_handle handle, uintptr_t address, size_t length);uintptr_t Reserve(pool_handle handle, uintptr_t requested_address, size_t length);}

Reserve函数通过mmap为pool保留一段特定大小的内存。

Add函数初始化一个pool的地址。

void AddressPoolManager::Add(pool_handle handle, uintptr_t ptr, size_t length) {Pool* pool = GetPool(handle);PA_CHECK(!pool->IsInitialized());pool->Initialize(ptr, length);}void AddressPoolManager::Pool::Initialize(uintptr_t ptr, size_t length) {address_begin_ = ptr;address_end_ = ptr + length;}

Add函数通过上面介绍的PartitionAddressSpace:init对所有的pool进行初始化赋值:

void PartitionAddressSpace::Init() {setup_.regular_pool_base_address_ =AllocPages(regular_pool_size, regular_pool_size,PageAccessibilityConfiguration(PageAccessibilityConfiguration::kInaccessible),PageTag::kPartitionAlloc, regular_pool_fd);AddressPoolManager::GetInstance().Add(kRegularPoolHandle, setup_.regular_pool_base_address_, regular_pool_size);}

1.4 PartitionBucket

partition_allocator/partition_bucket.hstruct PartitionBucket {SlotSpanMetadata<thread_safe>* active_slot_spans_head;SlotSpanMetadata<thread_safe>* empty_slot_spans_head;SlotSpanMetadata<thread_safe>* decommitted_slot_spans_head;uint32_t num_full_slot_spans : 24;PA_ALWAYS_INLINE uintptr_t AllocNewSuperPageSpan(PartitionRoot<thread_safe>* root,size_t super_page_count,unsigned int flags) PA_EXCLUSIVE_LOCKS_REQUIRED(root->lock_);PA_ALWAYS_INLINE SlotSpanMetadata<thread_safe>* AllocNewSlotSpan(PartitionRoot<thread_safe>* root,unsigned int flags,size_t slot_span_alignment) PA_EXCLUSIVE_LOCKS_REQUIRED(root->lock_);PA_ALWAYS_INLINE uintptr_t AllocNewSuperPage(PartitionRoot<thread_safe>* root,unsigned int flags)PA_EXCLUSIVE_LOCKS_REQUIRED(root->lock_);PA_ALWAYS_INLINE void InitializeSlotSpan(SlotSpanMetadata<thread_safe>* slot_span);}

一个bucket用于分配一个特定大小的内存块。Bucket由一个个类型为SlotSpanMetadata的slotspan链接起来,active_slot_spans_head链接当前活跃的slotspan,即半满状态的slotspan,empty_slot_spans_head链接为空的slotspan,decommitted_slot_spans_head为一种特殊的空slotspan,它里面的内存页处于decommitted状态,这是典型的slab结构。

1.5 SlotSpanMetadata

partition_allocator/partition_page.hstruct SlotSpanMetadata {private:PartitionFreelistEntry* freelist_head = nullptr;public:SlotSpanMetadata<thread_safe>* next_slot_span = nullptr;PA_ALWAYS_INLINE PartitionFreelistEntry* PopForAlloc(size_t size);PA_ALWAYS_INLINE PartitionFreelistEntry* get_freelist_head() const {return freelist_head;}PA_ALWAYS_INLINE void SetFreelistHead(PartitionFreelistEntry* new_head);}

SlotSpanMetadata是所有slot的管理结构,每个slot是由PartitionFreelistEntry表示。

partition_allocator/partition_freelist_entry.hclass PartitionFreelistEntry {PA_ALWAYS_INLINE void SetNext(PartitionFreelistEntry* entry) {encoded_next_ = EncodedPartitionFreelistEntryPtr(entry);}EncodedPartitionFreelistEntryPtr encoded_next_;}

SetNext设置下一个next entry。

class EncodedPartitionFreelistEntryPtr {private:PA_ALWAYS_INLINE constexpr explicit EncodedPartitionFreelistEntryPtr(std::nullptr_t): encoded_(Transform(0)) {}PA_ALWAYS_INLINE explicit EncodedPartitionFreelistEntryPtr(void* ptr): encoded_(Transform(reinterpret_cast<uintptr_t>(ptr))) {}PA_ALWAYS_INLINE PartitionFreelistEntry* Decode() const {return reinterpret_cast<PartitionFreelistEntry*>(Transform(encoded_));}PA_ALWAYS_INLINE constexpr uintptr_t Inverted() const { return ~encoded_; }PA_ALWAYS_INLINE constexpr void Override(uintptr_t encoded) {encoded_ = encoded;}PA_ALWAYS_INLINE constexpr explicit operator bool() const { return encoded_; }#if defined(ARCH_CPU_BIG_ENDIAN)uintptr_t transformed = ~address;#elseuintptr_t transformed = ReverseBytes(address);#endifreturn transformed;}uintptr_t encoded_;friend PartitionFreelistEntry;};

EncodedPartitionFreelistEntryPtr将entry进行了一些编码转换。

ProvisionMoreSlotsAndAllocOne用来初始化一个slotspan。

PA_ALWAYS_INLINE uintptr_tPartitionBucket<thread_safe>::ProvisionMoreSlotsAndAllocOne(PartitionRoot<thread_safe>* root,SlotSpanMetadata<thread_safe>* slot_span) {size_t num_slots = slot_span->num_unprovisioned_slots;

需要扩展的slot数目。

uintptr_t slot_span_start =SlotSpanMetadata<thread_safe>::ToSlotSpanStart(slot_span);

通过slot_span换算出第一个slot的内存地址。

PartitionFreelistEntry* prev_entry = nullptr;uintptr_t next_slot_end = next_slot + slot_size;size_t free_list_entries_added = 0;while (next_slot_end <= commit_end) {void* next_slot_ptr;if (PA_LIKELY(slot_size <= kMaxMemoryTaggingSize)) {next_slot_ptr = TagMemoryRangeRandomly(next_slot, slot_size);} else {next_slot_ptr = reinterpret_cast<void*>(next_slot);}auto* entry = PartitionFreelistEntry::EmplaceAndInitNull(next_slot_ptr);if (!slot_span->get_freelist_head()) {PA_DCHECK(!prev_entry);PA_DCHECK(!free_list_entries_added);slot_span->SetFreelistHead(entry);} else {PA_DCHECK(free_list_entries_added);prev_entry->SetNext(entry);}next_slot = next_slot_end;next_slot_end = next_slot + slot_size;prev_entry = entry;}}

循环调用SetNext填充下一个entry。

1.6 ThreadCache

每个线程有一个独立的ThreadCache,不需要上锁,以下是笔者提取出来的关键数据结构:

partition_allocator/thread_cache.hclass PA_COMPONENT_EXPORT(PARTITION_ALLOC) ThreadCache {static void Init(PartitionRoot<>* root);static ThreadCache* Get() {#if PA_CONFIG(THREAD_CACHE_FAST_TLS)return internal::g_thread_cache;#elsereturn reinterpret_cast<ThreadCache*>(internal::PartitionTlsGet(internal::g_thread_cache_key));#endistatic ThreadCache* Create(PartitionRoot<>* root);PA_ALWAYS_INLINE uintptr_t GetFromCache(size_t bucket_index,size_t* slot_size);void FillBucket(size_t bucket_index);ThreadCache* next_ PA_GUARDED_BY(ThreadCacheRegistry::GetLock());ThreadCache* prev_ PA_GUARDED_BY(ThreadCacheRegistry::GetLock());}

线程通过RegisterThreadCache注册自己的cache结构, 每个线程的cache通过双向链表链接。

GetFromCache函数从线程的当前cache中选取一个slot出来。

PA_ALWAYS_INLINE uintptr_t ThreadCache::GetFromCache(size_t bucket_index,size_t* slot_size) {auto& bucket = buckets_[bucket_index];internal::PartitionFreelistEntry* entry = bucket.freelist_head;internal::PartitionFreelistEntry* next =entry->GetNextForThreadCache<true>(bucket.slot_size);bucket.freelist_head = next;}

直接从bucket.freelist_head取出当前空闲的entry地址,并设置下一个空闲的entry。

FillBucket函数填充cache的bucket对象。

void ThreadCache::FillBucket(size_t bucket_index) {Bucket& bucket = buckets_[bucket_index];int count = std::max(1, bucket.limit.load(std::memory_order_relaxed) / kBatchFillRatio);for (int i = 0; i < count; i++) {uintptr_t slot_start = root_->AllocFromBucket(&root_->buckets[bucket_index],AllocFlags::kFastPathOrReturnNull | AllocFlags::kReturnNull,root_->buckets[bucket_index].slot_size /* raw_size */,internal::PartitionPageSize(), &usable_size, &is_already_zeroed);PutInBucket(bucket, slot_start);}

循环调用AllocFromBucket分配内存,调用PutInBucket填充进去。

PutInBucket函数将slot写入cache的buket对象里。

A_ALWAYS_INLINE void ThreadCache::PutInBucket(Bucket& bucket,uintptr_t slot_start) {slot_size_remaining_in_16_bytes = std::min(slot_size_remaining_in_16_bytes, distance_to_next_cacheline_in_16_bytes);static const uint32_t poison_16_bytes[4] = {0xbadbad00, 0xbadbad00,0xbadbad00, 0xbadbad00};#if PA_HAS_BUILTIN(__builtin_assume_aligned)void* slot_start_tagged = __builtin_assume_aligned(internal::SlotStartAddr2Ptr(slot_start), internal::kAlignment);#elsevoid* slot_start_tagged = internal::SlotStartAddr2Ptr(slot_start);#endifuint32_t* address_aligned = static_cast<uint32_t*>(slot_start_tagged);for (int i = 0; i < slot_size_remaining_in_16_bytes; i++) {memcpy(address_aligned, poison_16_bytes, sizeof(poison_16_bytes));address_aligned += 4;}

如果是x86_64架构,会将slot填充一些固定的posion值,防止UAF漏洞。作者在注释中说明只在cache中加入posion是为了提高性能。那么另一个问题arm64架构不使用cache posion的原因可能是内存大小问题了。

auto* entry = internal::PartitionFreelistEntry::EmplaceAndInitForThreadCache(slot_start, bucket.freelist_head);bucket.freelist_head = entry;}

将slot写入bucket.freelist_head。

2.1 DirectMapped

对于buckets数组中的内存块大小,PartitionAlloc根据其大小,可以直接使用叫做DirectMapped的技术,它要求待分配的内存大小不能超过2>>31 - 2mb大小,并且这部分内存直接使用mmap映射,使用ReservationOffsetTable对其进行引用。

partition_allocator/reservation_offset_table.hclass PA_COMPONENT_EXPORT(PARTITION_ALLOC) ReservationOffsetTable {struct _ReservationOffsetTable {uint16_t offsets[kReservationOffsetTableLength] = {};}struct _PaddedReservationOffsetTables {char pad_[PA_PKEY_ARRAY_PAD_SZ(_ReservationOffsetTable, kNumPools)] = {};struct _ReservationOffsetTable tables[kNumPools];char pad_after_[PA_PKEY_FILL_PAGE_SZ(sizeof(_ReservationOffsetTable))] = {};};static PA_CONSTINIT _PaddedReservationOffsetTablespadded_reservation_offset_tables_ PA_PKEY_ALIGN;}

offsets数组保存了一个DirectMapped内存块在对应的pool中的偏移。

PA_ALWAYS_INLINE uint16_t* GetReservationOffsetTable(pool_handle handle) {PA_DCHECK(kNullPoolHandle < handle && handle <= kNumPools);return ReservationOffsetTable::padded_reservation_offset_tables_.tables[handle - 1].offsets;}PA_ALWAYS_INLINE uint16_t* ReservationOffsetPointer(pool_handle pool,uintptr_t offset_in_pool) {size_t table_index = offset_in_pool >> kSuperPageShift;PA_DCHECK(table_index <ReservationOffsetTable::kReservationOffsetTableLength);return GetReservationOffsetTable(pool) + table_index;}

每个DirectMapped内存块通过双向链表链接,保存在root->direct_map_list。

DirectMapped内存结构如下:

2.2 Normal Map

SlotSpan中的一个slot通过FromAddr函数可以转为对应的SlotSpanMetadata管理结构。

PA_ALWAYS_INLINE PartitionPage<thread_safe>*PartitionPage<thread_safe>::FromAddr(uintptr_t address) {uintptr_t super_page = address & kSuperPageBaseMask;uintptr_t partition_page_index =(address & kSuperPageOffsetMask) >> PartitionPageShift();return PartitionSuperPageToMetadataArea<thread_safe>(super_page) +partition_page_index;}

话不多说,直接上图:

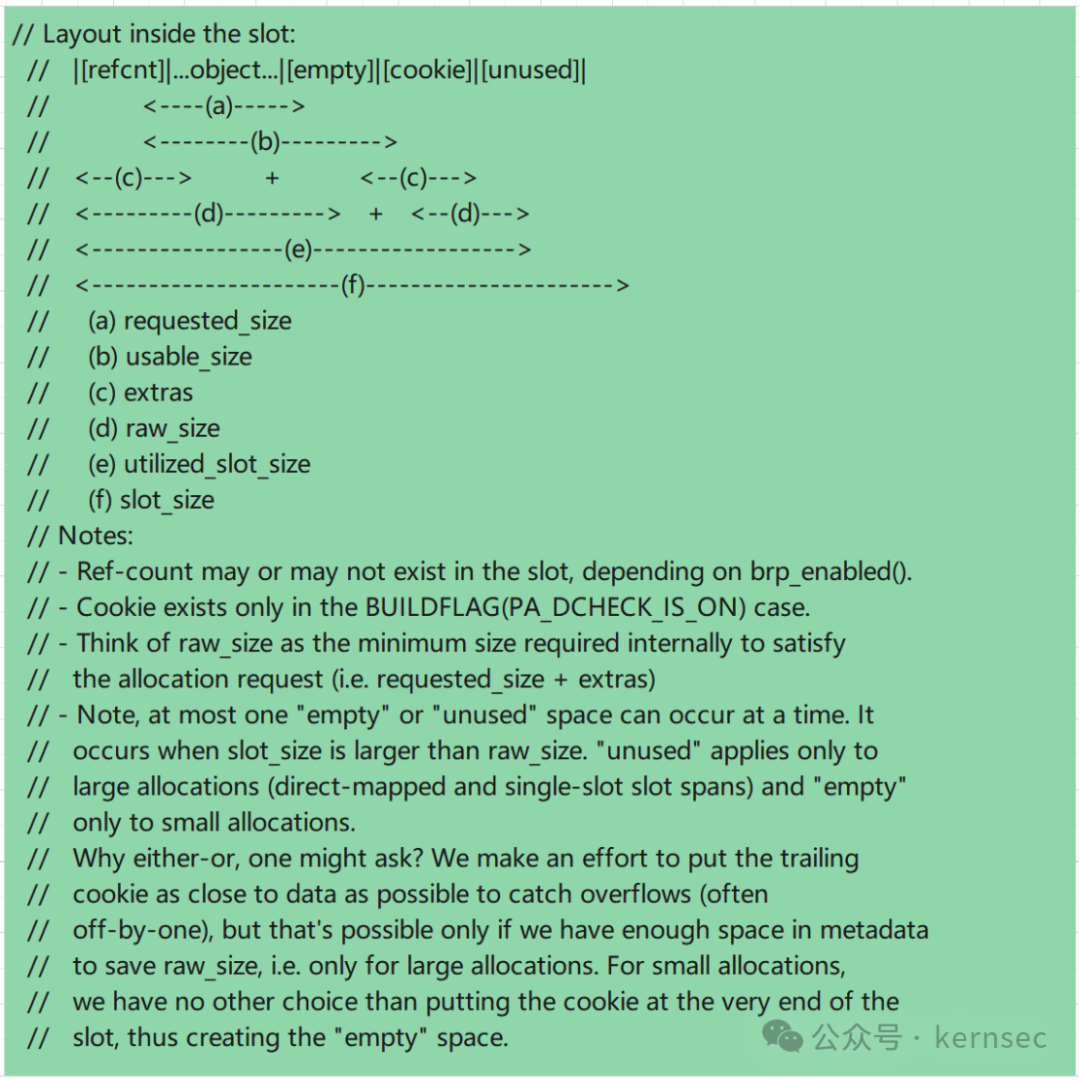

最终分配好的object在内存中的结构为:

如有侵权请联系:admin#unsafe.sh