2024-3-26 07:0:0 Author: blog.quarkslab.com(查看原文) 阅读量:6 收藏

This second article describes how to convert a Silo into a Server Silo in order to create a Windows Container. In addition, it dives into certain Kernel side Silo mechanisms.

Introduction

In the previous article, Reversing Windows Container, episode I: Silo, we introduced the notion of Windows Containers, which are also called Server Silos.

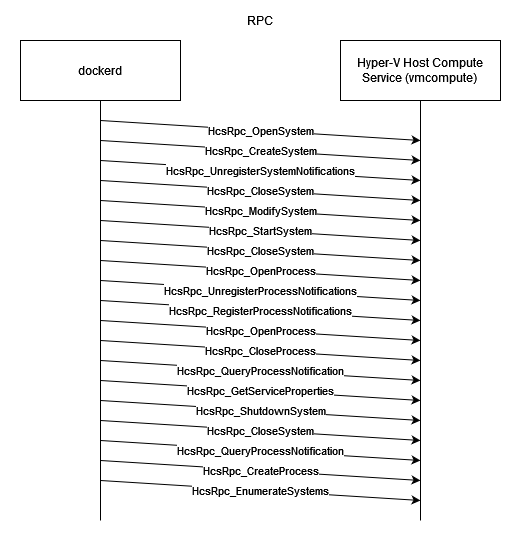

We have seen that Server Silos are a special kind of Silos and Silos are super Windows Job Objects. We took Docker as a practical test case to describe step by step the process of creating a Silo, starting from the RPC sent by the application to the HCS (Host Compute Service). Finally, we wrapped this first blog post by showing how to analyze the various actions carried out by this service, such as creating the Job Object (NtCreateJobObject) and customizing it (NtSetInformationJobObject).

In this article we will discuss how the Host Compute Service converts the newly created Silo into a Server Silo. Then, we will focus our attention on a few mechanisms in the Kernel, such as limiting the execution of syscalls from within Silos and the actual difference between a Server Silo Job Object and Silo Job Object.

Converting Silo Into Server Silo

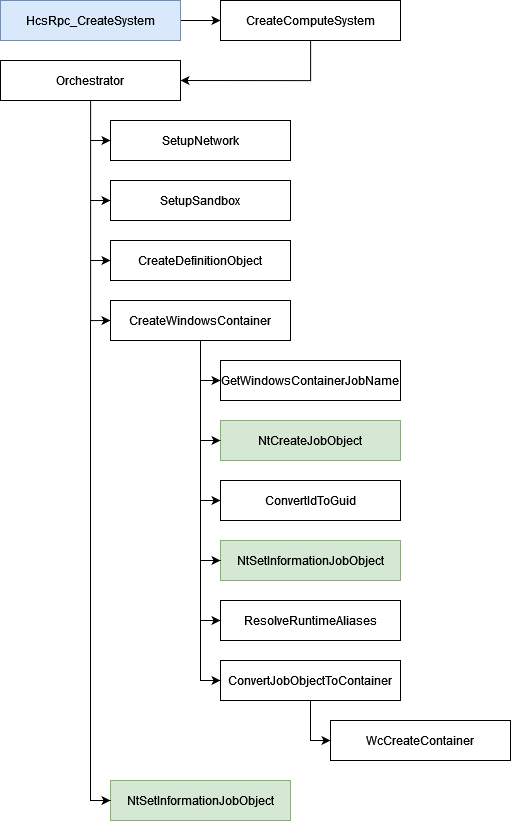

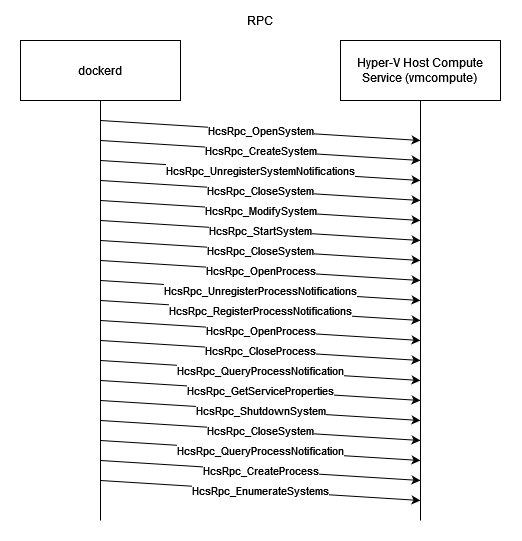

Once the Host Compute Service has created a Silo it has to convert it into a Server Silo. As discussed in the first blog post, the creation of the Silo is done during the call to the function HcsRpc_CreateSystem. However, converting Silo into Server Silo is done elsewhere, in another RPC named HcsRpc_StartSystem.

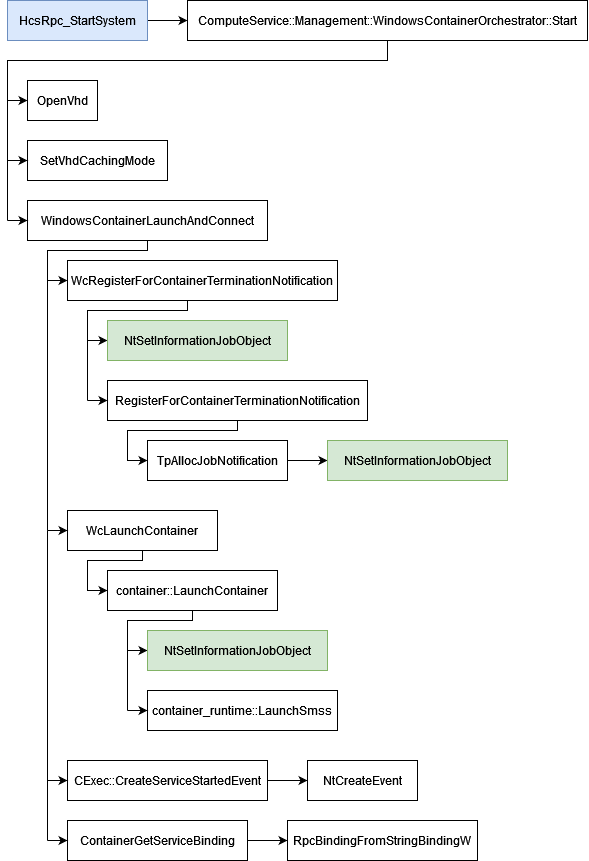

The following section will focus on the main functions in the transformation of the Server Silo, starting from HcsRpc_StartSystem.

HcsRpc_StartSystem

As with the HcsRpc_CreateSystem RPC, several operations such as event logging and the selection of the orchestrator (see WindowsContainerOrchestrator::Construct) are performed.

The Host Compute Service chooses the orchestrator in order to start the Silo with the function ComputeService::Management::WindowsContainerOrchestrator::Start.

Once ready, this function continues the preparation by looking for the virtual disk of the Silo (using functions OpenVhd and SetVhdCachingMode for instance). When the set-up is complete, HCS calls ComputeService::ContainerUtilities::WindowsContainerLaunchAndConnect.

WindowsContainerLaunchAndConnect

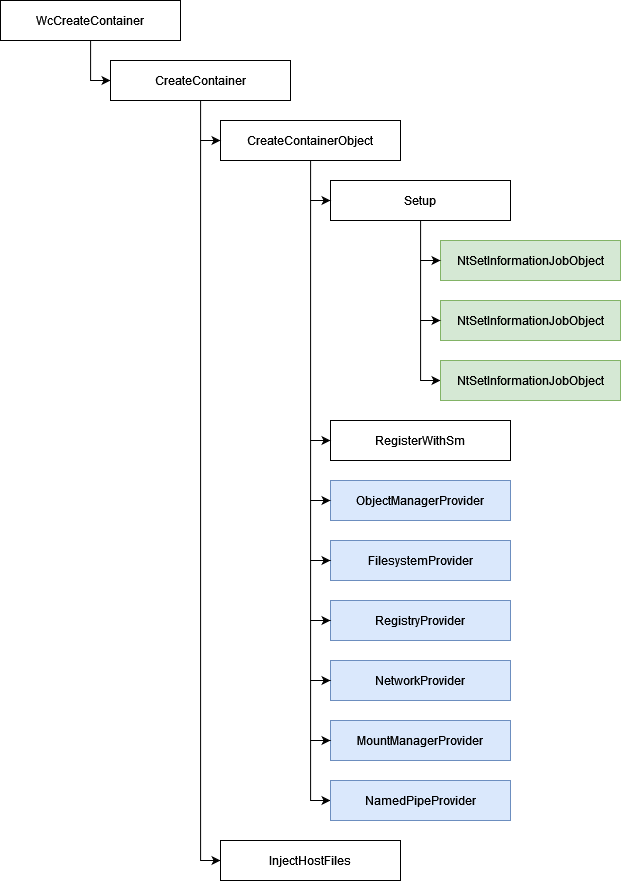

WindowsContainerLaunchAndConnect uses functions from the container.dll library to complete the conversion of the Silo.

The function WcRegisterForContainerTerminationNotification from container.dll is the first one called. It is in charge on defining the callback function named TerminationNotificationHandler in order to manage the termination of the container.

WcRegisterForContainerTerminationNotification uses NtSetInformationJobObject twice. NtSetInformationJobObject is the undocumented Windows API function used to interact/manipulate Job Objects.

The first time, NtSetInformationJobObject is called with the undocumented JOBOBJECTINFOCLASS 0x10. This information class is used to define the CompletionFilter field of the Job Object with the value 0x2010.

UINT32 ObjectInformation7 = 0x2010;

Status = NtSetInformationJobObject(

hJob,

(JOBOBJECTINFOCLASS)0x10,

&ObjectInformation7,

sizeof(ObjectInformation7)

);

The second NtSetInformationJobObject sets a Completion Port to the Job Object. This Completion Port is used to call container::TerminationNotificationHandler when the container stops.

A Completion Port is a Windows queue-based mechanism used for processing multiple asynchronous I/O requests.

JOBOBJECT_ASSOCIATE_COMPLETION_PORT ObjectInformation8;

// ObjectInformation8.CompletionKey = ...;

// ObjectInfomration8.CompletionPort = ...;

Status = NtSetInformationJobObject(

hJob,

JobObjectAssociateCompletionPortInformation,

&ObjectInformation8,

sizeof(ObjectInformation8)

);

Just after calling WcRegisterForContainerTerminationNotification, WindowsContainerLaunchAndConnect uses another function from container.dll named LaunchContainer. This one does a last call to the NtSetInformationJobObject function. The JOBOBJECTINFOCLASS 0x28 is still undocumented but, it is the one in charge of reaching the PspConvertSiloToServerSilo Kernel function.

UINT64 ObjectInformation9[2] = { 0, 1 };

Status = NtSetInformationJobObject(

hJob,

(JOBOBJECTINFOCLASS)0x28,

ObjectInformation9,

sizeof(ObjectInformation9)

);

At this point, the Silo has been converted into a Server Silo, but there is still more to do.

Firstly, we saw in the previous article that to manage the session inside the Server Silo, HCS uses SMSS. Now that the container is ready, HCS can ask SMSS to start managing the session thanks to the launchSmss function.

The Host Compute Service creates an event via a call to the NtCreateEvent function in order to create the CExecSvc service using CExec::CreateServiceStartedEvent.

The CExecSvc service is really important because it is used to create processes inside the container. You can read more about it in the article Understanding Windows Containers Communication by Eviatar Gerzi. Long story short, HCS communicates through RPC with the CExecSvc service in order to execute commands inside the container. CExecSvc exposes 4 RPC functions:

CExecCreateProcessCExecReizeConsoleCExecSignalProcessCExecShutdownSystem

Finally, to be able to communicate with CExecSrv, HCS creates an RPC server binding by calling the RpcBindingFromStringBindingW function using a String binding which looks like "ncalrpc:[\\Silos\\<SILO_ID>\\RPC Control\\cexecsvc]" for example.

RpcStringBindingComposeW(

NULL,

ProtSeq, // "nalpc"

NULL,

Endpoint, // "\Silo\<SILO_ID>\RPCConrol\cexecsvc"

NULL,

StringBinding

);

RpcBindingFromStringBindingW(

StringBinding,

Binding

);

You can read more about these functions at:

Et voilà! We finally managed to understand how the Host Compute Service creates and converts a Silo into Server Silo.

Kernel

So far, we have looked at Silos and Server Silos mainly from the Userland's point of view.

In this section we are going to dig a little deeper into Silos on the Kernel side.

Job Silo and Job Server Silo

As we now know, Silos or Server Silos are simply special Job Objects.

The PspCreateSilo Kernel function is in charge of transforming a Job Object into a Silo.

PspCreateSilo allocates memory in order to create a _PSP_STORAGE and stores it in the Storage field of the Job Object.

call PspAllocStorage

; ...

lock cmpxchg [rbx+5E8h], rdi ; RBX: nt!_EJOB

; +0x5e8 Storage : Ptr64 _PSP_STORAGE

; RDI: Address of the storage

Then, it sets flags into the JobFlags field.

lock or [rbx+5F8h], edx ; RBX: nt!_EJOB

; +0x5f8 JobFlags : Uint4B

; EDX = 0x40000000

Actually, the code snippet above only sets the Silo flag to 1 (+0x5f8 Silo: Pos 30, 1 Bit). The other flags are not related to Silos but to Job Objects.

The PspConvertSiloToServerSilo Kernel function takes care of converting a Silo into a Server Silo.

PspConvertSiloToServerSilo allocates memory in order to create a _ESERVERSILO_GLOBALS structure, which handles global information about the Server Silo.

Several fields are initialized at this time with the following values:

SiloRootDirectoryName:"\Silos\<SILO_ID>"ExistStatus=0x103IsDownlevelContainer=0x1State=0

At the end, this structure is stored inside the ServerSiloGlobals field of the Job Object.

mov [rsi+5C8h], rdi ; RSI: nt!_EJOB

; +0x5c8 ServerSiloGlobals : Ptr64 _ESERVERSILO_GLOBALS

; RDI: Address of the serversilo_globals

Here are the fields of the _ESERVERSILO_GLOBALS structure and the _SERVERSILO_STATE enumeration, retrieved using WinDBG on a Windows 11 23H2 22631.3155 machine:

dt nt!_ESERVERSILO_GLOBALS

+0x000 ObSiloState : _OBP_SILODRIVERSTATE

+0x2e0 SeSiloState : _SEP_SILOSTATE

+0x310 SeRmSiloState : _SEP_RM_LSA_CONNECTION_STATE

+0x360 EtwSiloState : Ptr64 _ETW_SILODRIVERSTATE

+0x368 MiSessionLeaderProcess : Ptr64 _EPROCESS

+0x370 ExpDefaultErrorPortProcess : Ptr64 _EPROCESS

+0x378 ExpDefaultErrorPort : Ptr64 Void

+0x380 HardErrorState : Uint4B

+0x388 ExpLicenseState : Ptr64 _EXP_LICENSE_STATE

+0x390 WnfSiloState : _WNF_SILODRIVERSTATE

+0x3c8 DbgkSiloState : _DBGK_SILOSTATE

+0x3e8 PsProtectedCurrentDirectory : _UNICODE_STRING

+0x3f8 PsProtectedEnvironment : _UNICODE_STRING

+0x408 ApiSetSection : Ptr64 Void

+0x410 ApiSetSchema : Ptr64 Void

+0x418 OneCoreForwardersEnabled : UChar

+0x420 NlsState : Ptr64 _NLS_STATE

+0x428 RtlNlsState : _RTL_NLS_STATE

+0x4e0 ImgFileExecOptions : Ptr64 Void

+0x4e8 ExTimeZoneState : Ptr64 _EX_TIMEZONE_STATE

+0x4f0 NtSystemRoot : _UNICODE_STRING

+0x500 SiloRootDirectoryName : _UNICODE_STRING

+0x510 Storage : Ptr64 _PSP_STORAGE

+0x518 State : _SERVERSILO_STATE

+0x51c ExitStatus : Int4B

+0x520 DeleteEvent : Ptr64 _KEVENT

+0x528 UserSharedData : Ptr64 _SILO_USER_SHARED_DATA

+0x530 UserSharedSection : Ptr64 Void

+0x538 TerminateWorkItem : _WORK_QUEUE_ITEM

+0x558 IsDownlevelContainer : UChar

dt nt!_SERVERSILO_STATE

SERVERSILO_INITING = 0n0

SERVERSILO_STARTED = 0n1

SERVERSILO_SHUTTING_DOWN = 0n2

SERVERSILO_TERMINATING = 0n3

SERVERSILO_TERMINATED = 0n4

Detecting Server Silo

How does Windows prevent or limit the execution of syscalls when the request comes from a Silo? In his article: What I Learned from Reverse Engineering Windows Containers, Daniel Prizmant explains it by taking the NtLoadDriver function as an example. However, he does not present in detail the functions that can be used to check whether a Silo is using a syscall and that is what we are going to do here.

In fact, there are a few functions that can be used by the Kernel to detect if the execution comes from inside a Silo.

PsIsProcessInSiloPsIsThreadInSiloPsIsCurrentThreadInServerSilo

It is also possible to check if a Silo is inside another Silo/Server Silo.

PspIsSiloInSiloPspIsSiloInServerSilo

Because a Server Silo is a special kind of Silo, it is possible for the Kernel to check if the execution takes place inside a Silo thanks to the PsIsThreadInSilo or PsIsProcessInSilo. However, PsIsCurrentThreadInServerSilo allows checking whether the current thread is inside a Server Silo and not just a Silo.

So, let's take a closer look at the PsIsCurrentThreadInServerSilo function.

PsIsCurrentThreadInServerSilo actually calls PsGetCurrentServerSilo which tells the system if the thread is linked to a Server Silo or not.

More specifically, PsGetCurrentServerSilo is able to find the Job Object associated with the current thread thanks to its _KTHREAD object.

mov rax, qword [gs:0x188] ; _KTHREAD

mov rcx, qword [rax+0x658] ; +0x658 seems to be the _EJOB

With the Job Object at hand, it can use the PsIsServerSilo function to find out whether it is a Server Silo or not. This verification is performed on the current Job Object but also on its parents to determine whether one of them is a Server Silo.

mov rcx, qword [rcx + 0x500] ; +0x500 ParentJob : Ptr64 _EJOB

Finally, PsIsServerSilo simply checks if the ServerSiloGlobals field of the _EJOB object is different from 0. As a reminder, we saw earlier that the PspConvertSiloToServerSilo function allocates a _ESERVERSILO_GLOBALS structure and sets it to this field. Therefore, if ServerSiloGlobals is different from 0 this means it is a Server Silo.

cmp qword [rcx+0x5c8], 0x0 ; +0x5c8 ServerSiloGlobals : Ptr64 _ESERVERSILO_GLOBALS

setne al

PsIsCurrentThreadInServerSilo is used by various functions in the Kernel, such as:

* IopLoadDriverImage

* NtImpersonateAnonymouseToken

* PspCreateSilo

* etc.

This summarizes how the Kernel knows whether a process is inside a Server Silo and thus authorizes it or not to execute a syscall.

What is interesting here is that there does not seem to be any general mechanisms for restricting access to syscalls globally, which means that the attack surface can be quite large. Indeed, even if it is possible for the Kernel to know if the execution comes from inside a Silo, each syscall must set up its checks (or not). In addition, some syscalls do not directly block access to Silos, but rather implement a different logic between a host and Silo context.

Side Note about NtSetInformationJobObject

As we have seen so far, the NtSetInformationJobObject function plays an important role in the specialization of a Job Object into a Silo or Server Silo. Some calls for this function are not documented. The following table is a summary.

| JOBOBJECTINFOCLASS | DOCUMENTED | USED FOR |

|---|---|---|

| JobObjectAssociateCompletionPortInformation (0x7) | ✔ | Associates Completion Port with a Job Object |

| JobObjectExtendedLimitInformation (0x9) | ✔ | Sets limits for a Job Object |

| 0x10 | ✘ | Sets the CompletionFilter |

| 0x23 | ✘ | Transforms Job into Silo |

| 0x25 | ✘ | Creates root directory in Silo |

| 0x28 | ✘ | Transforms Silo into Server Silo |

| 0x2A | ✘ | Sets up disk attribution |

| 0x2C | ✘ | Sets the ContainerTelemetryId |

| 0x2D | ✘ | Defines the system root path |

Conclusion And Acknowledgement

These two articles provide an overview about how an application such as Docker is able to create Windows Containers.

In a nutshell, this is done by using RPC to communicate with the Host Compute Service in order to ask it to set up a new container.

From Windows' point of view, this kind of containers is basically a Job Object specialized in Silo then converted into Server Silo. Moreover, several elements are added to this Job Object such as a vhdx disk for the file system, a registry hive for the registry and a virtual network adapter.

In this article, we saw the final steps to convert a Silo into a Server Silo, as well as the way HCS handles the termination of the container and the set-up of the main RPC server used to communicate with the container.

Also, we saw that a Server Silo is a Silo with a _ESERVERSILO_GLOBALS structure which stores information about the container and that a Silo is a Job Object with the Silo flag set in the JobFlags field. Of course, in addition to the Job Object, a Server Silo also has a virtual disk, a registry hive, a virtual network adapter, and so on.

Finally, we took a quick look at some Kernel functions. In particular, we analyzed a few functions that determine whether a thread is inside a Silo/Server Silo. These functions can be used in order to limit access to syscalls.

There is still a lot of exploring to do, both about Server Silo internals and vulnerability research. Stay tuned!

I would like to thank Gwaby for her proofreading and advice and also my colleagues at Quarkslab for their feedback and reviewing this article.

If you would like to learn more about our security audits and explore how we can help you, get in touch with us!

如有侵权请联系:admin#unsafe.sh