2024-3-29 02:11:52 Author: securityboulevard.com(查看原文) 阅读量:9 收藏

Cybersecurity is much like a relentless game of “whac-a-mole”–the advent of new technologies invariably draws out threats that security teams must quickly address.

Take, for example, the internet boom in the late 20th century. Businesses flocked to embrace newfound tech capabilities, revolutionizing how we conduct business, communicate globally, and share information. But with the tech advancements came new cyber threats, our “moles,” including malware, the infamous Morris Worm, the ILOVEYOU virus, email phishing, and many other malicious ploys. The counterstrikes—antivirus software, security awareness training for employees, the roll-out of new laws and regulations, and the launch of various security platforms—represented our collective effort to “whac” these threats and protect our organizations.

Now, fast forward to today.

Since OpenAI unveiled ChatGPT in November 2022, generative AI has exploded. With the new tech, we’re also experiencing a whole new crop of moles appearing faster than organizations can whac. How serious is this issue, and what does it take to stay ahead in a game that is quickly sprawling out of control?

The Growth of AI Apps

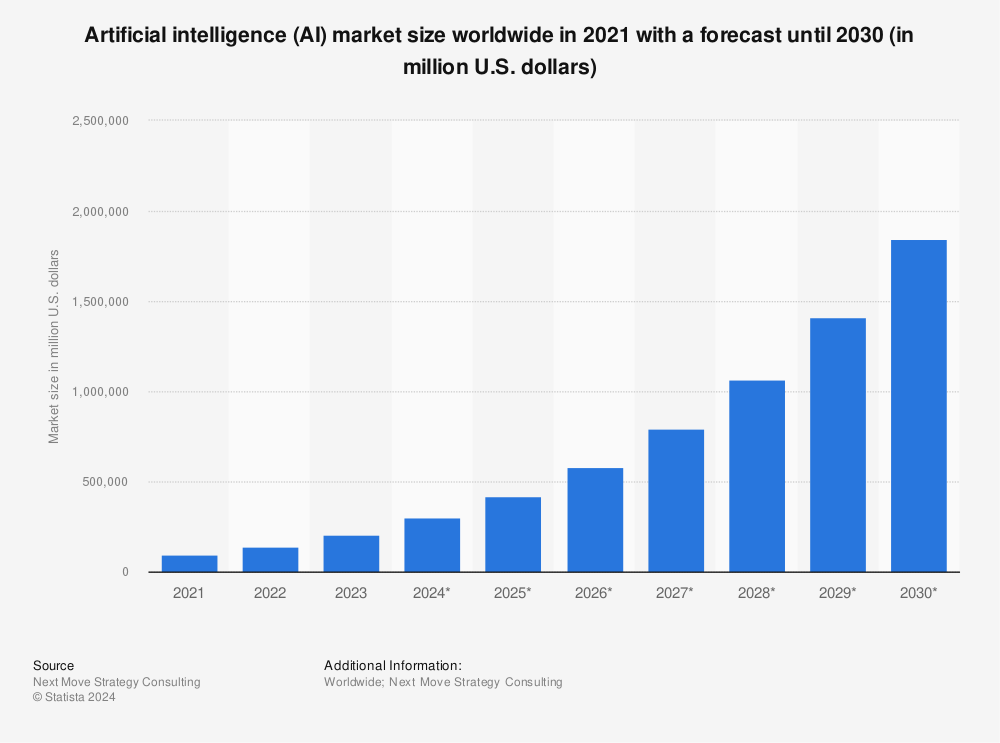

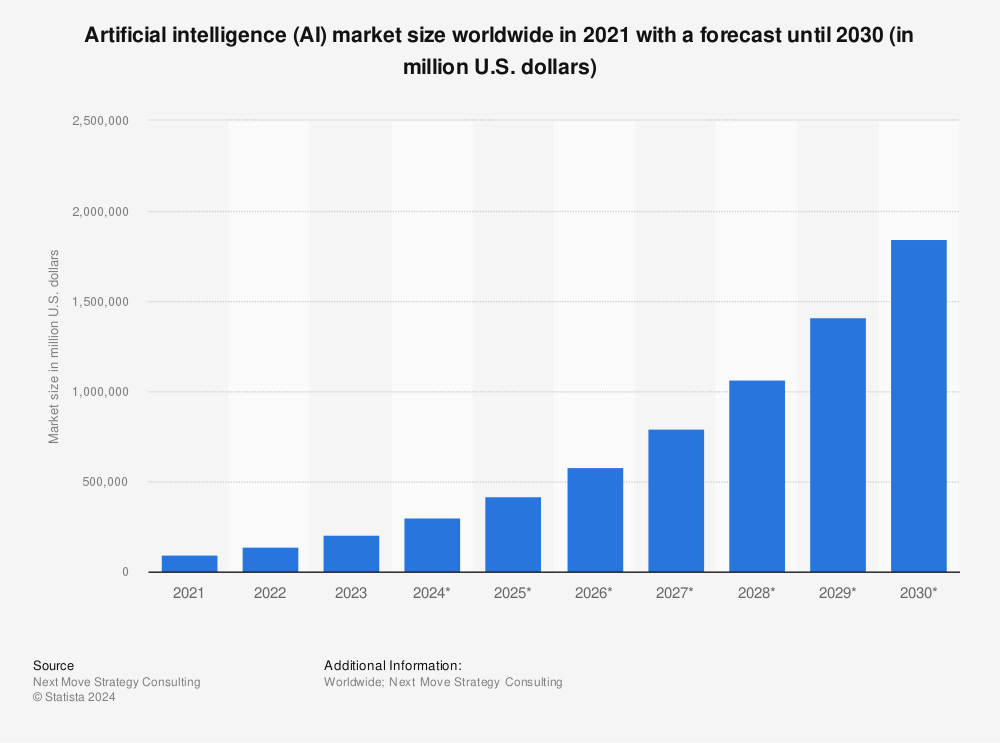

To say that AI technologies are growing in popularity is an understatement. Valued at $200 billion in 2023, the AI market is projected to reach $1.8 trillion by 2030.

Which AI app dominates the AI space? According to the traffic reports by SEMRush, ChatGPT holds 60% of the market share, which isn’t surprising. The popular chatbot is reported to have attracted 1 million users in the first five days of launch and surged to 100 million monthly active users just two months after launch, setting a record as the fastest-growing app in history.

Without question, ChatGPT set our world on fire, and as a result, new AI apps, features, and communities are rolling out at an unprecedented rate.

AI Communities

AI communities, from online forums and social media groups to academic collectives and professional organizations, serve as hubs for collaboration, learning, and sharing of AI creations. This popular Discord community, touted as the “largest AI community used by over 20 million humans,” promotes almost 13,000 AI tools to help with nearly 17,000 tasks and 5,000 jobs, growing at a rate of 1,000/month. To get an AI app featured in the community, developers upload their AI creations and pay a nominal listing fee. But security teams beware: few app creation guidelines exist, and security controls for the apps are unknown.

The ChatGPT Store

OpenAI has also launched the GPT Store, a digital marketplace where developers can share their GPT innovations, and GPT users can easily download and install the plugins. The store is estimated to have 1,000 plugins created by recognized software brands to individual developers. For AI users and enthusiasts, it’s like being a kid in a candy store—there are many options, and all are enticing.

All this is to communicate that AI apps are now mainstream, reshaping the business landscape as dramatically as the advent of the internet once did. Your employees are also taking advantage of the new options to improve their productivity and business outcomes. Similarly, with the surge in generative AI and AI user adoption, new security threats are surfacing faster than security teams can assess them. Let’s explore some of the risks.

AI Security Risks

With AI apps being so readily available and easy to access, employees have many options at their fingertips and are seizing the opportunities. A survey conducted by The Conference Board in September 2023 revealed that 56% of US employees are using gen AI apps to accomplish work-related tasks. Further, 38% say their organizations are either partially or not at all aware of their usage. Hence, your first risk and mole to whac: employees using AI tools without SecOps or IT knowledge, AKA “Shadow AI.”

Shadow AI

Like the rise of shadow SaaS, we can anticipate a similar, if not more rapid, increase in shadow AI. Indeed, AI adoption stats suggest that shadow AI could expand at an accelerated pace. What’s causing this lack of visibility for IT and security teams?

Fear of Tool Denial: Employees may worry that using AI tools could be disallowed or banned. In a focused UK study, Deloitte found that just 23% of employees thought their employers would approve of them using AI apps for work purposes.

We polled our LinkedIn community of security and risk professionals, and the response provided a different perspective. 50% responded positively that their organizations allow the use of GenAI apps, although the other half expressed more caution on the topic. 8% responded with a definitive “no,” while 42% said it depends on the pending review of the app, how it will be used, and the security (and legal) implications.

Employee Unawareness: Another rationale is that employees don’t see the need to inform security or IT teams, particularly if the AI functionality is a recent update to an already approved application.

This scenario is more common than you might think. Apps that have gone through your established security protocols and been sanctioned for usage are now rolling out new AI capabilities. Apps like Grammarly, Canva, Microsoft, Notion, and likely every other app you use—announce new AI-fueled features, and the notices promoting the new functionalities are sent directly to users. The users unknowingly begin using the features assuming that because the app had previously been sanctioned, any updates will be also.

Unclear AI Policies: When the rules for SaaS adoption—including AI tools—aren’t explicitly stated, employees will act as their own CIO to make decisions. Most employees do not realize all that goes on in your (security) world, the threats you “whac” on a daily basis, and the risks their AI choices impose. It’s essential to define your company’s policies and protocols surrounding AI app usage—and then communicate them across your enterprise.

The Conference Board’s study shows that organizations are progressing, but more work is still needed.

- 34% of employees surveyed said their organization does not have an AI policy

- 26% said their organization does have an AI policy

- 23% said an AI policy is under development

- 17% don’t know

Having a strong AI policy can also help manage the next AI-associated risk: data and privacy compliance.

Data and Privacy Compliance

AI models are often trained on public datasets. When new data is submitted to AI tools like ChatGPT, the information is collected and used to refine the model and improve the answers given to other users. ChatGPT does have the capability to turn off chat history, so your data won’t be used to train the model, but how many users know this and enable the feature?

With AI tools quickly entering the market, it’s hard to know if any security controls were factored into the app development. While ChatGPT has some data protection standards, there’s no guarantee the GPT spin-offs or apps downloaded from AI communities have the same safeguards.

Besides the app’s security controls, how the app will be used and integrated with your systems is essential to know:

- Does the tool push or process data? If so, what are the app’s data retention policies?

- Who will be using the AI app, and in what capacity? Will the app have access to files, content, or code?

- If the AI app is breached, what is your risk radius?

- Are there compliance concerns with the app?

RELATED: On Thin Ice: The Hidden Dangers of Shadow SaaS in Cybersecurity Compliance Standards

A lesson to learn from: Samsung employees accidentally exposed confidential information through ChatGPT, such as software details to troubleshoot a coding issue and internal meeting notes to compile the minutes. It’s important to monitor how your employees utilize AI tools to not only protect your company’s proprietary data, but to ensure adherence with compliance standards, too.

AI Risk Management

We began this article with an analogy likening cybersecurity to playing whac-a-mole. By now, you should have a clearer insight into the risks posed by the rapid adoption of AI and just how quickly these “moles” are emerging.

Defeating or “whacking” the moles with confidence requires a programmatic game strategy, one that’s centered on proven SaaS identity risk management (SIRM) principles. Grip SaaS Security Control Plane (SSCP) is uniquely positioned to solve the challenges of identifying and managing shadow AI and new AI features added to existing apps in your portfolio.

How it works:

Gen AI App Discovery: enables identity-based tracking of Gen AI SaaS utilization, linking app use directly to individual users and identifying the business owner.

Gen AI Risk Lifecycle Management: applies and enforces policies for adopting Gen AI apps, including pinpointing and revoking access for non-compliant users.

Gen AI Access Control: introduces secure access measures like SAML-less Single Sign-On (SSO), Multi-Factor Authentication (MFA), and Robotic Process Automation (RPA) that automatically rotates passwords, providing a layer of access control that works for unmanaged devices and newly discovered SaaS apps.

Gen AI Risk Measurement: evaluates and identifies existing apps in your SaaS portfolio that are Gen AI-enabled, triggering a review of the app’s risk and compliance status.

AI apps are intended to improve our work, not create more work (or stress) for you. The risks associated with generative AI are real—the technology is evolving so quickly that it’s difficult for security and IT teams to get ahead of the new AI apps being added and changes to the existing, already-sanctioned SaaS apps. But you can win our metaphoric game of whac-a-mole; to learn more about Grip’s SaaS Security Control Plane (SSCP) to solve gen AI risks, download our solutions brief, Modern SaaS Security for Managing Generative AI Risk now. To see the SSCP live and how Grip solves the challenges of modern-day SaaS usage, book time with our team.

如有侵权请联系:admin#unsafe.sh