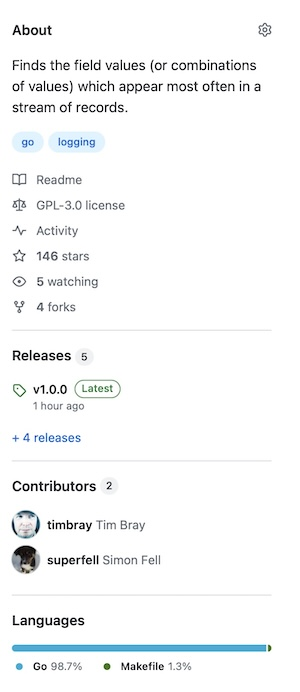

Back in 2021-22, I wrote a series of blog posts about a program called “topfew” (tf from your shell command-line). It finds the field values (or combinations of values) which appear most often in a stream of records. I built it to explore large-scale data crunching in Go, and to investigate how performance compared to Rust. There was plentiful input, both ideas and code, from Dirkjan Ochtman and Simon Fell. Anyhow, I thought I was finished with it but then I noticed I was using the tf command more days than not, and I have pretty mainstream command-line needs. Plus I got a couple of random pings about whether it was still live. So I turned my attention back to it on April 12th and on May 2nd pushed v1.0.0.

I added one feature: You can provide a regex field separator to override the default space-separation that defines the fields in your records. Which will cost you a little performance, but you’re unlikely to notice.

Its test coverage is much improved and, expectedly, there are fewer bugs. Also, better docs.

Plan · I think it’s pretty much done, honestly can’t think any useful new features. At some point, I’ll look into Homebrew recipes and suchlike, if I get the feeling they might be used.

Obviously, please send issues or PRs if you see the opportunity.

Who needs this? · It’s mostly for log files I think. Whenever I’m poking around in one of those I find myself asking questions like “which API call was hit most often?” or “Which endpoint?” or “Which user agent?” or “Which subnet?”

The conventional hammer to drive this nail has always been something along the lines of:

awk '{print $7}' | sort | uniq -c | sort -nr | head

Which has the advantage of Just Working on any Unix-descended computer. But can be slow when the input is big, and worse than

linear too.

Anyhow, tf is like that, only faster. In some cases, orders of magnitude faster. Plus, it has useful options

that take care of the grep and sed idioms that often appear upstream in the pipe.

Topfew’s got a decent README so I’m not going invest any more words here in explaining it.

But it’s worth pointing out that it’s a single self-contained binary compiled from standalone Go source code with zero dependencies.

Performance · This subject is a bit vexed. After I wrote the first version, Dirkjan implemented it in Rust and it was way faster, which annoyed me because it ought to be I/O-bound. So I stole his best ideas and then Simon chipped in other good ones and we optimized more, and eventually it was at least as fast as the Rust version. Which is to say, plenty fast, and probably faster than what you’re using now.

But you only get the big payoff from all this work when you’re processing a file, as opposed to a stream; then tf feels shockingly fast, because it divides the file up into segments and scans them in parallel. Works remarkably well.

Unfortunately that doesn’t happen too often. Normally, you’re grepping for something or teeing off another stream or whatever. In which case, performance is totally limited by reading the stream; I’ve profiled the hell out of this and the actual tf code doesn’t show up in any of the graphs, just the I/O-related buffer wrangling and garbage collection. Maybe I’m missing something. But I’m pretty sure tf will keep up with any stream you can throw at it.

Tooling · Over the years I’ve become an adequate user of GitHub CI. It’s good to watch that ecosystem become richer and slicker; the things you need seem to be there and for an OSS hobbyist like me, are generally free. Still, it bothers me that Everything Is On GitHub. I need to become aware of the alternatives.

I still live in JetBrains-land, in this case specifically Goland, albeit unfashionably in Light mode. It scratches my itches.

Anyhow, everything is easier if you have no dependencies. And our whole profession needs to be more thoughtful about its dependencies.

Dirty secret · I’ve always wanted to ship a two-letter shell command that someone might use. Now I have. And I do think tf will earn a home in a few folks’ toolboxes.

如有侵权请联系:admin#unsafe.sh