An open-source, prototype implementation of property graphs for JavaScript based on the esprima parser, and the EsTree SpiderMonkey Spec. JAW can be used for analyzing the client-side of web applications and JavaScript-based programs.

This project is licensed under GNU AFFERO GENERAL PUBLIC LICENSE V3.0. See here for more information.

JAW has a Github pages website available at https://soheilkhodayari.github.io/JAW/.

Release Notes:

- Oct 2023, JAW-v3 (Sheriff): JAW updated to detect client-side request hijacking vulnerabilities.

- July 2022, JAW-v2 (TheThing): JAW updated to its next major release with the ability to detect DOM Clobbering vulnerabilities. See

JAW-V2branch. - Dec 2020, JAW-v1 : first prototype version. See

JAW-V1branch.

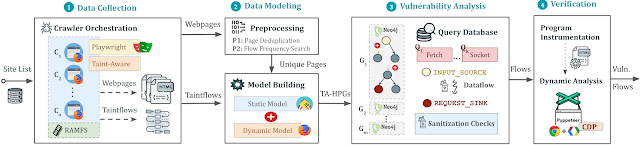

The architecture of the JAW is shown below.

Test Inputs

JAW can be used in two distinct ways:

-

Arbitrary JavaScript Analysis: Utilize JAW for modeling and analyzing any JavaScript program by specifying the program's file system

path. -

Web Application Analysis: Analyze a web application by providing a single seed URL.

Data Collection

- JAW features several JavaScript-enabled web crawlers for collecting web resources at scale.

HPG Construction

-

Use the collected web resources to create a Hybrid Program Graph (HPG), which will be imported into a Neo4j database.

-

Optionally, supply the HPG construction module with a mapping of semantic types to custom JavaScript language tokens, facilitating the categorization of JavaScript functions based on their purpose (e.g., HTTP request functions).

Analysis and Outputs

-

Query the constructed

Neo4jgraph database for various analyses. JAW offers utility traversals for data flow analysis, control flow analysis, reachability analysis, and pattern matching. These traversals can be used to develop custom security analyses. -

JAW also includes built-in traversals for detecting client-side CSRF, DOM Clobbering and request hijacking vulnerabilities.

-

The outputs will be stored in the same folder as that of input.

The installation script relies on the following prerequisites: - Latest version of npm package manager (node js) - Any stable version of python 3.x - Python pip package manager

Afterwards, install the necessary dependencies via:

$ ./install.sh

For detailed installation instructions, please see here.

Running the Pipeline

You can run an instance of the pipeline in a background screen via:

$ python3 -m run_pipeline --conf=config.yaml

The CLI provides the following options:

$ python3 -m run_pipeline -husage: run_pipeline.py [-h] [--conf FILE] [--site SITE] [--list LIST] [--from FROM] [--to TO]

This script runs the tool pipeline.

optional arguments:

-h, --help show this help message and exit

--conf FILE, -C FILE pipeline configuration file. (default: config.yaml)

--site SITE, -S SITE website to test; overrides config file (default: None)

--list LIST, -L LIST site list to test; overrides config file (default: None)

--from FROM, -F FROM the first entry to consider when a site list is provided; overrides config file (default: -1)

--to TO, -T TO the last entry to consider when a site list is provided; overrides config file (default: -1)

Input Config: JAW expects a .yaml config file as input. See config.yaml for an example.

Hint. The config file specifies different passes (e.g., crawling, static analysis, etc) which can be enabled or disabled for each vulnerability class. This allows running the tool building blocks individually, or in a different order (e.g., crawl all webapps first, then conduct security analysis).

Quick Example

For running a quick example demonstrating how to build a property graph and run Cypher queries over it, do:

$ python3 -m analyses.example.example_analysis --input=$(pwd)/data/test_program/test.js

Crawling and Data Collection

This module collects the data (i.e., JavaScript code and state values of web pages) needed for testing. If you want to test a specific JavaScipt file that you already have on your file system, you can skip this step.

JAW has crawlers based on Selenium (JAW-v1), Puppeteer (JAW-v2, v3) and Playwright (JAW-v3). For most up-to-date features, it is recommended to use the Puppeteer- or Playwright-based versions.

Playwright CLI with Foxhound

This web crawler employs foxhound, an instrumented version of Firefox, to perform dynamic taint tracking as it navigates through webpages. To start the crawler, do:

$ cd crawler

$ node crawler-taint.js --seedurl=https://google.com --maxurls=100 --headless=true --foxhoundpath=<optional-foxhound-executable-path>

The foxhoundpath is by default set to the following directory: crawler/foxhound/firefox which contains a binary named firefox.

Note: you need a build of foxhound to use this version. An ubuntu build is included in the JAW-v3 release.

Puppeteer CLI

To start the crawler, do:

$ cd crawler

$ node crawler.js --seedurl=https://google.com --maxurls=100 --browser=chrome --headless=true

See here for more information.

Selenium CLI

To start the crawler, do:

$ cd crawler/hpg_crawler

$ vim docker-compose.yaml # set the websites you want to crawl here and save

$ docker-compose build

$ docker-compose up -d

Please refer to the documentation of the hpg_crawler here for more information.

Graph Construction

HPG Construction CLI

To generate an HPG for a given (set of) JavaScript file(s), do:

$ node engine/cli.js --lang=js --graphid=graph1 --input=/in/file1.js --input=/in/file2.js --output=$(pwd)/data/out/ --mode=csvoptional arguments:

--lang: language of the input program

--graphid: an identifier for the generated HPG

--input: path of the input program(s)

--output: path of the output HPG, must be i

--mode: determines the output format (csv or graphML)

HPG Import CLI

To import an HPG inside a neo4j graph database (docker instance), do:

$ python3 -m hpg_neo4j.hpg_import --rpath=<path-to-the-folder-of-the-csv-files> --id=<xyz> --nodes=<nodes.csv> --edges=<rels.csv>

$ python3 -m hpg_neo4j.hpg_import -husage: hpg_import.py [-h] [--rpath P] [--id I] [--nodes N] [--edges E]

This script imports a CSV of a property graph into a neo4j docker database.

optional arguments:

-h, --help show this help message and exit

--rpath P relative path to the folder containing the graph CSV files inside the `data` directory

--id I an identifier for the graph or docker container

--nodes N the name of the nodes csv file (default: nodes.csv)

--edges E the name of the relations csv file (default: rels.csv)

HPG Construction and Import CLI (v1)

In order to create a hybrid property graph for the output of the hpg_crawler and import it inside a local neo4j instance, you can also do:

$ python3 -m engine.api <path> --js=<program.js> --import=<bool> --hybrid=<bool> --reqs=<requests.out> --evts=<events.out> --cookies=<cookies.pkl> --html=<html_snapshot.html>

Specification of Parameters:

<path>: absolute path to the folder containing the program files for analysis (must be under theengine/outputsfolder).--js=<program.js>: name of the JavaScript program for analysis (default:js_program.js).--import=<bool>: whether the constructed property graph should be imported to an active neo4j database (default: true).--hybrid=bool: whether the hybrid mode is enabled (default:false). This implies that the tester wants to enrich the property graph by inputing files for any of the HTML snapshot, fired events, HTTP requests and cookies, as collected by the JAW crawler.--reqs=<requests.out>: for hybrid mode only, name of the file containing the sequence of obsevered network requests, pass the stringfalseto exclude (default:request_logs_short.out).--evts=<events.out>: for hybrid mode only, name of the file containing the sequence of fired events, pass the stringfalseto exclude (default:events.out).--cookies=<cookies.pkl>: for hybrid mode only, name of the file containing the cookies, pass the stringfalseto exclude (default:cookies.pkl).--html=<html_snapshot.html>: for hybrid mode only, name of the file containing the DOM tree snapshot, pass the stringfalseto exclude (default:html_rendered.html).

For more information, you can use the help CLI provided with the graph construction API:

$ python3 -m engine.api -h

Security Analysis

The constructed HPG can then be queried using Cypher or the NeoModel ORM.

Running Custom Graph traversals

You should place and run your queries in analyses/<ANALYSIS_NAME>.

Option 1: Using the NeoModel ORM (Deprecated)

You can use the NeoModel ORM to query the HPG. To write a query:

- (1) Check out the HPG data model and syntax tree.

- (2) Check out the ORM model for HPGs

- (3) See the example query file provided;

example_query_orm.pyin theanalyses/examplefolder.

$ python3 -m analyses.example.example_query_orm

For more information, please see here.

Option 2: Using Cypher Queries

You can use Cypher to write custom queries. For this:

- (1) Check out the HPG data model and syntax tree.

- (2) See the example query file provided;

example_query_cypher.pyin theanalyses/examplefolder.

$ python3 -m analyses.example.example_query_cypher

For more information, please see here.

Vulnerability Detection

This section describes how to configure and use JAW for vulnerability detection, and how to interpret the output. JAW contains, among others, self-contained queries for detecting client-side CSRF and DOM Clobbering

Step 1. enable the analysis component for the vulnerability class in the input config.yaml file:

request_hijacking:

enabled: true

# [...]

#

domclobbering:

enabled: false

# [...]cs_csrf:

enabled: false

# [...]

Step 2. Run an instance of the pipeline with:

$ python3 -m run_pipeline --conf=config.yaml

Hint. You can run multiple instances of the pipeline under different screens:

$ screen -dmS s1 bash -c 'python3 -m run_pipeline --conf=conf1.yaml; exec sh'

$ screen -dmS s2 bash -c 'python3 -m run_pipeline --conf=conf2.yaml; exec sh'

$ # [...]

To generate parallel configuration files automatically, you may use the generate_config.py script.

How to Interpret the Output of the Analysis?

The outputs will be stored in a file called sink.flows.out in the same folder as that of the input. For Client-side CSRF, for example, for each HTTP request detected, JAW outputs an entry marking the set of semantic types (a.k.a, semantic tags or labels) associated with the elements constructing the request (i.e., the program slices). For example, an HTTP request marked with the semantic type ['WIN.LOC'] is forgeable through the window.location injection point. However, a request marked with ['NON-REACH'] is not forgeable.

An example output entry is shown below:

[*] Tags: ['WIN.LOC']

[*] NodeId: {'TopExpression': '86', 'CallExpression': '87', 'Argument': '94'}

[*] Location: 29

[*] Function: ajax

[*] Template: ajaxloc + "/bearer1234/"

[*] Top Expression: $.ajax({ xhrFields: { withCredentials: "true" }, url: ajaxloc + "/bearer1234/" })1:['WIN.LOC'] variable=ajaxloc

0 (loc:6)- var ajaxloc = window.location.href

This entry shows that on line 29, there is a $.ajax call expression, and this call expression triggers an ajax request with the url template value of ajaxloc + "/bearer1234/, where the parameter ajaxloc is a program slice reading its value at line 6 from window.location.href, thus forgeable through ['WIN.LOC'].

Test Web Application

In order to streamline the testing process for JAW and ensure that your setup is accurate, we provide a simple node.js web application which you can test JAW with.

First, install the dependencies via:

$ cd tests/test-webapp

$ npm install

Then, run the application in a new screen:

$ screen -dmS jawwebapp bash -c 'PORT=6789 npm run devstart; exec sh'

For more information, visit our wiki page here. Below is a table of contents for quick access.

The Web Crawler of JAW

Data Model of Hybrid Property Graphs (HPGs)

Graph Construction

Graph Traversals

Pull requests are always welcomed. This project is intended to be a safe, welcoming space, and contributors are expected to adhere to the contributor code of conduct.

Academic Publication

If you use the JAW for academic research, we encourage you to cite the following paper:

@inproceedings{JAW,

title = {JAW: Studying Client-side CSRF with Hybrid Property Graphs and Declarative Traversals},

author= {Soheil Khodayari and Giancarlo Pellegrino},

booktitle = {30th {USENIX} Security Symposium ({USENIX} Security 21)},

year = {2021},

address = {Vancouver, B.C.},

publisher = {{USENIX} Association},

}

Acknowledgements

JAW has come a long way and we want to give our contributors a well-deserved shoutout here!