2024-6-20 08:42:33 Author: hackernoon.com(查看原文) 阅读量:3 收藏

Authors:

(1) Xiaohan Ding, Department of Computer Science, Virginia Tech, (e-mail: [email protected]);

(2) Mike Horning, Department of Communication, Virginia Tech, (e-mail: [email protected]);

(3) Eugenia H. Rho, Department of Computer Science, Virginia Tech, (e-mail: [email protected] ).

Table of Links

Study 1: Evolution of Semantic Polarity in Broadcast Media Language (2010-2020)

Study 2: Words that Characterize Semantic Polarity between Fox News & CNN in 2020

Discussion and Ethics Statement

NLP researchers studying diachronic shifts have been leveraging word embeddings from language models to understand how the meaning of a given word changes over time (Kutuzov et al. 2018). In Study 1, we adapt methodological intuitions from such prior work to calculate how semantic polarization — the semantic distance between how two entities contextually use an identical word — evolves over time. While scholars have examined online polarization in the context of understanding partisan framing and public discourse around current events, our work is the first to quantify and capture the evolution of semantic polarization in broadcast media language.

Dataset

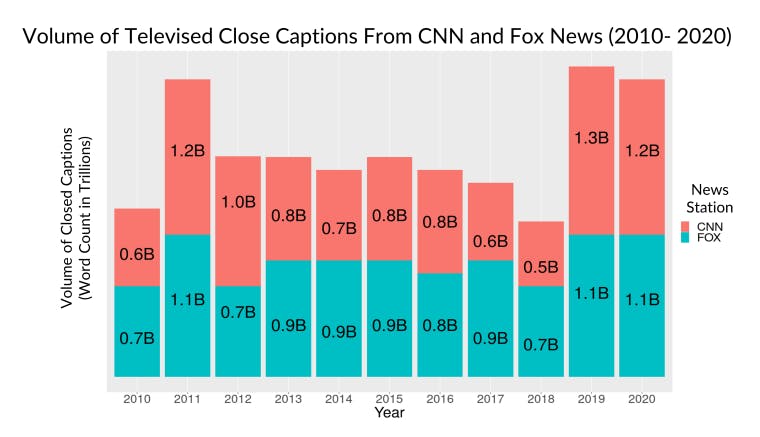

Our data consists of transcribed closed captions from news shows that were broadcast 24-7 by CNN and Fox News from January 1, 2010 to December 31, 2020, which was provided by the Internet Archive and the Stanford Cable TV News Analyzer. We computationally extracted closed captions pertaining to each televised segment from a total of 181K SRT (SubRip Subtitle) files — consisting of 23.8 million speaker turns or 297 billion words spoken across the decade (Figure 1). To focus our analysis on how socially important yet politically controversial topics were discussed between the two major news networks, we narrowed down our sample of speaker turns to those that contained keywords associated with six politically contentious topics: racism, Black Lives Matter, police, immigration, climate change, and health care (Table 1). We extracted all sentences containing one of the nine topical keywords, giving us a final corpus of 2.1 million speaker turns or 19.4 billion words. All closed captions pertaining to commercials were removed using a matching search algorithm. (Tuba, Akashe, and Joshi 2020).

Method and Analysis

With more recent advances in deep learning, researchers have leveraged the ability of transformer-based language models to generate contextualized embeddings to detect the semantic change of words across time (Kutuzov et al. 2018). Similarly, we leverage contextual word representations from BERT (Bidirectional Encoder Representations from Transformers) (Devlin et al. 2019) to calculate the semantic polarity in how CNN and Fox News use topical keywords over time.

First, we use BERT to learn a set of embeddings for each keyword from the hidden layers of the model for every year from 2010 to 2020, to quantify how much the embeddings for a given topical keyword from CNN semantically differ from those of Fox across an 11-year period. We extracted all contextual word representations from our corpus of 2.1 million speaker turns. Then, for every occurrence of the 9 topical keywords in the data, we calculated the semantic distance between each contextual word representation from CNN and Fox using cosine values. The use of cosine values of BERT word embeddings is standard in machine translation and other NLP domains for computing contextual similarities in how tokens are used in sentences (Zhang et al. 2020). Our calculation of semantic polarization between how CNN vs. Fox News uses a topical keyword for a given year is as follows.

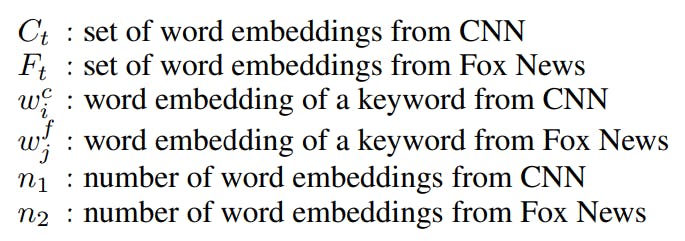

Given the set of word embeddings for a keyword in a given year t from CNN:

and the set of word embeddings for a keyword in a given year t from Fox News:

we define the semantic polarity (SP) between CNN and Fox News as:

where:

In Figure 2, we visualize our methodological framework to illustrate how we derive the semantic polarization (SP) score in how CNN vs. Fox News uses the keyword, “racist” in 2010. First, we extract all contextual word embeddings (each of which is a 768×1 tensor) associated with every occurrence of the word “racist” in our corpus of speaker turns from Fox and CNN in 2010. Next, we calculate the cosine distance between the tensors associated with the word representation “racist” in the CNN corpus and those from the Fox News corpus. Finally, we use Equation 3 to calculate the average of all cosine distance values to obtain the semantic polarization score or the semantic distance between how Fox News and CNN uses the word “racist” in 2010.

Results

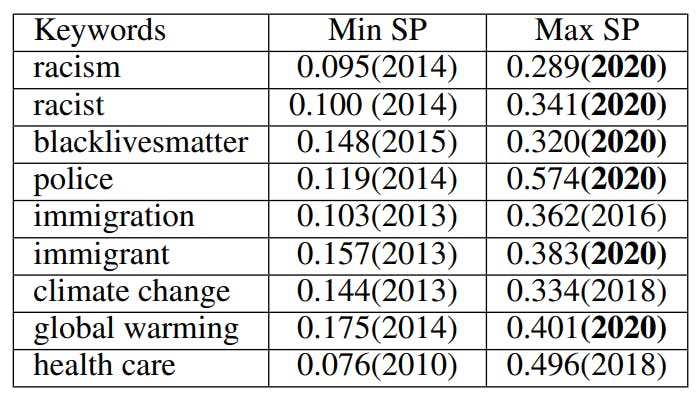

Between 2010 and 2020, semantic polarity between America’s two largest TV news stations increased with a significant upward trend starting in 2015 and 2016 (Figure 3). Over the past decade, CNN and Fox News became increasingly semantically divergent in how they used the topical keywords “racist”, “racism”, “police”, “blackbivesmatter”, “immigrant”, “immigration”, “climate change”,“global warming”, and “health care” in their broadcasting language. Though there is a general dip in semantic polarity around 2013-14 across all keywords with the exception of “health care”, semantic polarity between the two stations starts to sharply increase every year on average by 112% across all keywords starting in 2016. These results corroborate findings from a recent study analyzing the airtime of partisan actors on CNN and Fox, which shows that the two stations became more polarized in terms of visibility bias particularly following the 2016 election (Kim, Lelkes, and McCrain 2022).

Further, the highest peaks generally occur in 2020 for the terms “racism”, “racist”, “blacklivesmatter”, “police”, along with “immigrant”, and “global warming” (Table 2). Specifically, semantic polarity is highest for the keyword “police” in 2020, denoting that across the nine keywords, CNN and Fox were most polarized in how they semantically used the word “police” in their news programs in 2020.

如有侵权请联系:admin#unsafe.sh