2024-6-29 20:0:19 Author: hackernoon.com(查看原文) 阅读量:2 收藏

Author:

(1) Mohammad AL-Smad, Qatar University, Qatar and (e-mail: [email protected]).

Table of Links

History of Using AI in Education

5. Summary

The integration of generative AI models in education offer a wide range of potential benefits, including improved productivity, enhanced creativity, and personalized learning, providing timely feedback and support, and automating tasks (Farrokhnia et al., 2023; Cotton et al., 2023). The advantages of using generative AI models in education are summarised in Table 1. However, the adoption of generative AI models in education raises challenges that need to be tackled in order to guarantee a safe and responsible usage of such technologies (Su & Yang, 2023). These challenges are summarised in Table 2.

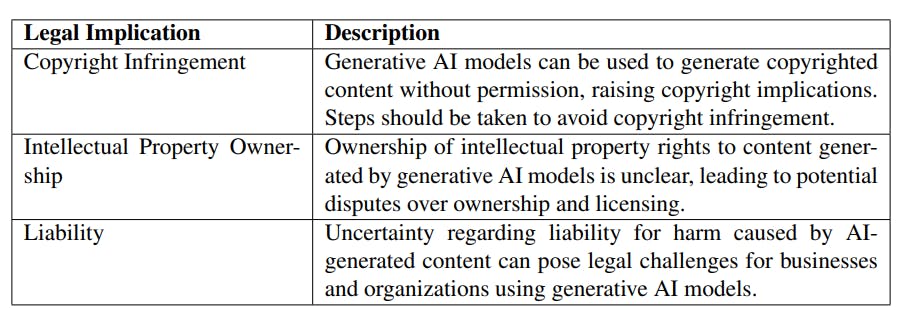

Findings of this survey underscore the need for further research, regulatory policies, and pedagogical guidance to navigate issues related to academic integrity and equity in the era of AI enhanced learning (Chaudhry et al., 2023; Yan, 2023; Cotton et al., 2023). The irresponsible implementation of generative AI models in education have ethical and legal consequences (Chaudhry et al., 2023). Table 3 discusses potential ethical consideration for the adoption of Generative AI models in education. Whereas, Table 4 focuses on the legal implication.

Governments around the world are developing regulations to address the ethical and legal challenges posed by generative AI models. For example, the ”European Union Artificial Intelligence Act (EU AI Act)” was proposed to promote the development and use of trustworthy AI, while also mitigating the risks associated with AI (Madiega, 2021). The Act does this by establishing a riskbased approach to AI regulation. AI systems are classified into four risk categories: ”unacceptable risk, high risk, limited risk, and minimal risk”. The EU AI Act includes the following provisions: (a) Risk Assessment: the AI Act mandates the assessment of risks associated with AI systems, ensuring that potential harms are identified and mitigated, (b) Transparency: transparency requirements are specified to enhance the explainability of AI systems and make their decisionmaking processes more understandable, the EU AI Act specified the following three transparency requirements when it comes to adopt generative AI technology:

• Disclosure of AI Generation: The imperative for transparency in the context of generative AI pertains to the acknowledgment and notification that content has been produced by artificial intelligence rather than a human agent. Such disclosures serve to elucidate the nature of the content creation process, fostering user understanding and trust.

• Mitigation of Illicit Content Generation: The design and implementation of generative AI models must encompass measures to forestall the generation of content that contravenes legal statutes. These measures are integral to ethical and legal compliance, involving content moderation and safety mechanisms to curtail the production of potentially harmful, offensive, or unlawful materials.

• Publication of Summaries of Copyrighted Data Utilized in Training: In the sphere of AI model development, the utilization of copyrighted data engenders concerns pertaining to intellectual property rights. To address these concerns and exemplify transparency, developers may be encouraged to provide summaries or descriptions of the copyrighted data sources employed during training. This step showcases adherence to copyright laws while enhancing transparency regarding the model’s training data.

and (c) Human Oversight: the AI Act emphasizes the importance of human oversight in AI systems to ensure responsible and ethical use. However, there are some limitations to the current regulatory landscape (Schuett, 2023). For example, the EU AI Act does not explicitly address the issue of generative AI models hallucination. Additionally, the EU AI Act focuses on the obligations of AI developers and users, rather than on the rights of individuals who may be affected by AI systems (Enqvist, 2023).

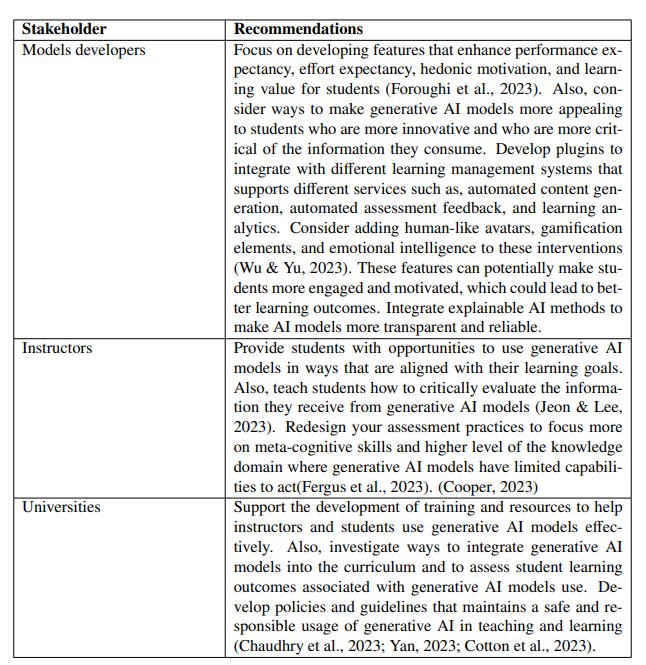

Findings from literature also provide valuable insights into the determinants of intention to use generative AI models for educational purposes. The findings have important implications for the models developers, instructors, and universities as they work to accelerate the adoption of them in educational contexts. Table 5 discusses a set of recommendations for the different stakeholders fostering them to have a safe and responsible adoption of such technologies in education.

The adoption or generative AI models in education requires a trust raising plan (Choudhury & Shamszare, 2023). In addition to addressing the forementioned challenges (See Table 2), there are a number of steps that can be done to build trust in generative AI, including: (1) Transparency: developers should be transparent about the limitations, biases, data sources, and potential risks of these models. (2) Explainability: developers should develop explainable AI methods to explain how their models generate outputs (Dosilovi ˇ c et al., 2018). (3) ´ Human-in-the-loop validation: human experts should be involved in the evaluation of AI-generated content to identify and rectify errors. This will help into solving other problems such as bias, and hallucination. (4) Accountability: developers and users of generative AI should be accountable for the consequences of their actions.

如有侵权请联系:admin#unsafe.sh