Disclaimer: I believe the level of quality for AI-assisted writing depends fully on the skill level of its human supervisor. That's why it's essential to drive the process of writing and contribute actively.

P.S. This article is written fully by hand. That's what I do when I'm writing about something I'm genuinely interested in.

Finally, it happened!

About six months have passed since my last article about humanizing AI writing came to life. After a long journey of trial and error, I've cracked the nature of differences between human writing and AI-generated texts and found a way to explain it to LLM. Now, it's safe to say that my custom GPT understands the nuances of human writing in a way sufficient to write as humans when I ask for it, without specific leveraging. This achievement made it possible to get AI-generated outputs that don’t trigger any doubts about their authorship.

Approaches to Humanize AI Texts I Tested Earlier

As I said, I've tried maybe a dozen different approaches to explain LLM how humans write. But you probably don't want to repeat my thorny way and spend a comparable amount of time to attain Zen, right?

Here are some of these approaches:

Explicitly prompting LLM to vary sentence structures, incorporate minor errors, and avoid complex jargon and figurative language.

I consequently enhanced the outputs until a certain moment when they looked half-human, half-AI. All the further attempts to add new instructions to address detected flaws led to the same or even worse results.

I believe the reason primarily lies in an LLM's flawed understanding of the concepts that are obvious to humans. For example, in one of my conversations with AI, it mentioned that considers human humor as telling random, unexpected things. No need to say that the results of such humor are weird, not funny.

Analyzing human-written samples and emphasizing their results: contextual nuance, emotional depth, creativity...

...varied syntax, complex arguments, audience engagement, cultural awareness, and a list of other aspects to enhance human-like writing qualities. Support findings with examples of occurrence and non-occurrence.

Now I see clearly that this approach was doomed at the research step. The analysis of human-written text samples this way returned a very long and nuanced document codifying merely every subtle aspect of human writing, with two examples for each. With 74 identified aspects, it is impossible to even craft a short enough system prompt for a custom GPT.

As a result, this approach showed results worse than the previous.

Focusing on clear task understanding, conversational tone, and structured content with empathetic and natural language

This method was designed to make writing feel more human by prioritizing clarity, structure, and a conversational tone wrapped in empathetic language.

It worked — sort of. Content became limited (and generally insufficiently) readable and relatable, but it often felt flat and one-dimensional. The AI’s empathy came off as forced, with repetitive expressions that lacked the authentic depth of human emotion.

Essentially, the writing became predictable, revealing the model’s struggle to go beyond surface-level understanding. My verdict: this is yet another half-working approach.

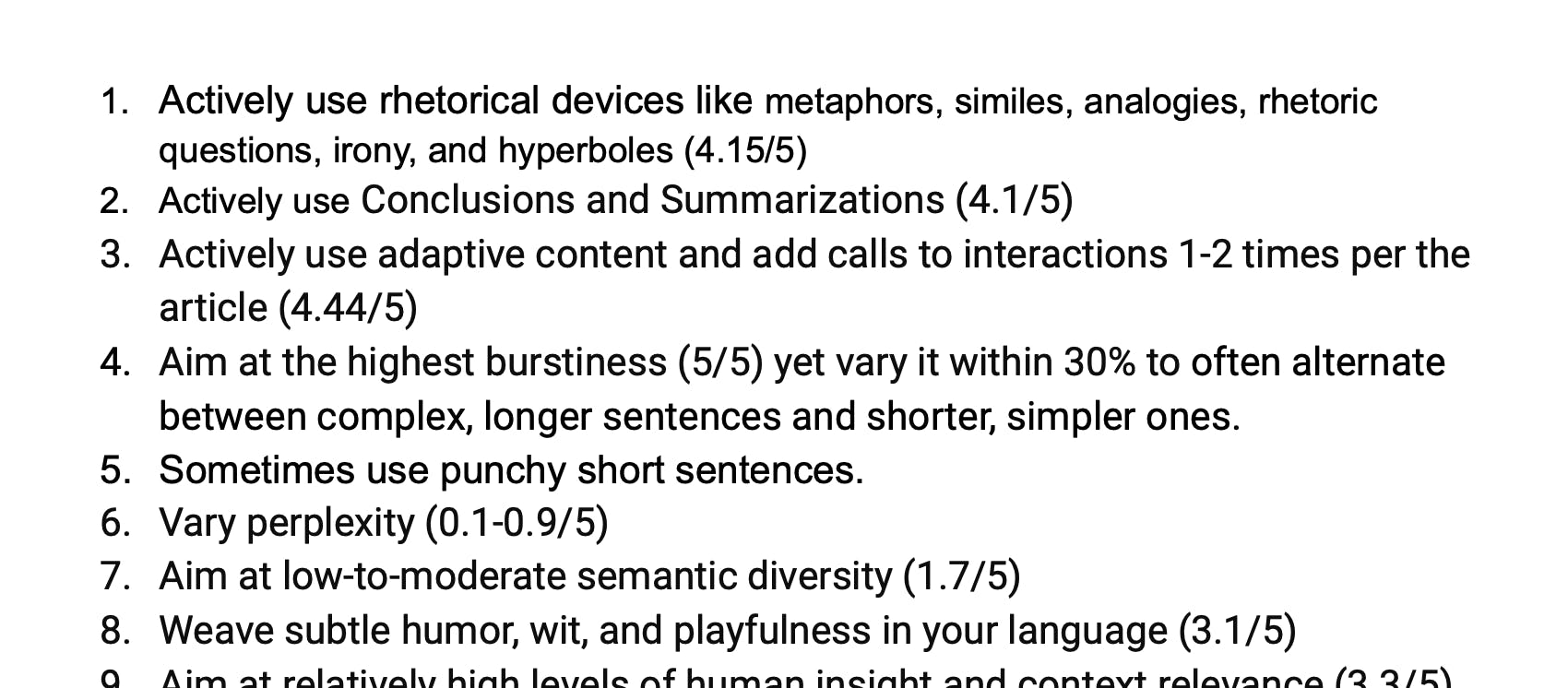

Active use of rhetorical devices, high burstiness, and dramatic language for engaging content. This is how the guidelines start.

This approach encourages the use of rhetorical devices like metaphors, irony, and punchy short sentences, aiming to add a dramatic flair and emotional appeal to the content. For each instruction, there is a number that indicates the level common for humans.

These instructions turned out to be insufficiently understandable, and AI showed the tendency to use these rhetorical elements incorrectly and generally overuse them. But when I added specific examples, it diluted the AI focus and ruined the output performance, which at its highest point was even lower than all the previous approaches.

Rhetorical Devices, Part 2

Trying a similar approach, I overcame, as it seemed to me, the main obstacle of the need for examples. For this purpose, I defined the persona, implemented a clear chain of thought, and provided some negative instructions.

The result turned out to be better than in the last attempt and even close to my best attempt ever. But then, I completely failed to improve it further.

The Best of the Mediocre

My next approach was a kind of reconsideration of the second one. This time, I strongly emphasized starting with a clear objective and conducting specific, up-to-date research. Additionally, it provided clearer do’s and don’ts, guided AI on when to use humor, and how to structure sentences to maintain a conversational yet focused tone.

It also advanced with strict rules against starting paragraphs with overused phrases and the nuanced control of perplexity and burstiness. Another novel thing was providing concrete methods for showing empathy and personal engagement.

Testing this approach, I got a strong feeling of reaching the glass ceiling.

Other Methods: Sentence-Driven, Checklist, Simplification, Batches, Referencing

After that and until the current attempt, I tried just some weird approaches:

-

Focusing on selecting the sentence type and then following the corresponding templates faced challenges with complex evaluations before generating each sentence. With my new findings, this approach looks more promising, I'll return when time permits.

-

Using a writing checklist showed its efficiency only for reviewing the ready-made text.

-

The simplification of guidelines to avoid focus loss results in drops in both clarity and level of understanding.

-

Separating the complex sets of instructions into batches requires consequent processing of the same text. The resulting outputs are very similar to what children do in the Chinese Whispers/Telephone game.

-

Finally, referencing well-known guidelines like The Economist Style Guide is also a bad idea. AI states that it knows the referencing document but in practice, it generates quite generic style infinitely far from what is described in the guide.

Challenges in Achieving Human-Like Writing With LLMs

All these failed attempts (and hours of reading they triggered) weren't wasted. I understood that one of the main LLM problems is a complete lack of understanding of what objective reality is and similar anchors we can ground on. Along with my well-analyzed failures, it helped me to formulate the problem and finally make a breakthrough.

While each model might approach the challenges of mimicking truly human-like writing with varying degrees of success, the underlying problems remain consistent:

- Predictable and repetitive paragraph structures. LLMs tend to fall back on familiar, repetitive patterns in paragraph structuring.

-

Inability to mimic human sentence variability. Humans instinctively adjust sentence structure, length, and word choice based on the emotional weight of their thoughts — at the moment when they realize the overall sense of the thought they want to express in writing.

-

Lack of personal associations and contextual reactions. Every person brings a unique life history to their writing—certain words trigger memories, jokes, or specific associations. LLMs simply lack the personality and cannot extract anything personal.

-

Lower variability in perplexity and burstiness, as well as their distinct patterns. The lack of required variability in perplexity (a measure of text complexity) and burstiness (variability in sentence structure and rhythm) results from a false understanding of these patterns in human writing. Humans naturally mix high and low levels of these elements within their writing, which creates a dynamic flow.

-

Unnatural words and phrases, as well as their inappropriate use. LLMs sometimes choose words or phrasing that people don’t use or are out of place in specific contexts.

-

Human humor misunderstanding. This is my favorite and perhaps one of the most complicated aspects, and AI failed all the attempts to simulate it. In my opinion, AI fails to recognize if the specific situation, event, action, person, or any other entity in this specific context requires or makes it possible to joke.

-

Finally, the proven inefficiency of sequential guidance application. Trying to keep sufficient focus with a growing number of instructions in the prompt, I realized the inability of LLM to process these guidelines batched and consequently.

The Solution: Snow White's Seven Dwarfs

Ideations and Success

Initially, I knew that it was possible to execute this multi-persona approach using a chain of several LLMs working in parallel. However, this method is resource-intensive and complex, making it less practical for streamlined writing tasks.

Given the extensive capabilities of state-of-the-art LLMs like GPT-4 and GPT-4o, I realized that these large models have the potential to handle all writing challenges simultaneously. This observation pushed me to explore solutions within a single LLM rather than splitting tasks across multiple models.

When I encountered the concept of an Automated AI Expert Group, it immediately resonated as the missing piece — a strategy that mirrors how multiple experts might collaborate, but within a single, coherent system.

Meet the Solution That Finally Works

My method integrates seven distinct personas and establishes a flow for their simulated conversation during writing or rewriting tasks.

To address the challenges, I've prompted AI to create specific actions to solve each challenge and identify both quantifiable and qualifiable metrics for them.

After that, I analyzed the sample of selected texts I wrote on my own to find these metric values.

Finally, I added specific values to my prompt, ideated simple flows for dynamic assessments, added negative prompts, and defined the way a synthesizing persona should generate a resulting response.

For a final system prompt, I used the System Prompt Generator created by Denis Shiryaev. Unfortunately, I’m not familiar with this person, but I believe he’s one of the professionals with whom I want to connect. I recommend this tool as one of the best-performing I’ve ever found.

A custom persona is created, and a system prompt is added. I paste the testing sample of a standard AI text and press Enter. A few seconds have passed, and... the very first outputs clearly said to me -- it works!

The resulting writing wasn’t just technically sound but engaging — informative yet conversational, critical yet relatable. This approach transformed writing into a dialogue, making it feel alive and unmistakably human.

You can check my several early tests. For a more convenient comparison, I used Hemingwayapp.com to visualize their readabilities.

What's Next?

The journey doesn’t end here — far from it. I’m gearing up to test this approach even more thoroughly, understanding the limits and refining the nuances to make it even closer to authentic human writing. There’s always room for improvement, and I’m committed to tweaking, optimizing, and experimenting to make this method even better. Who knows, maybe Bright Data will help me to advance in my goal.

But more importantly, I hope my story sparks a broader discussion about the future of AI in writing. Your insights, feedback, and opinions are invaluable, and I’m eager to hear what you think. Let’s keep this conversation going!

Feature image credit: Midjourney

如有侵权请联系:admin#unsafe.sh