2024-10-14 21:0:17 Author: securityboulevard.com(查看原文) 阅读量:1 收藏

|

Tiexin GuoOS Developer @Ubuntu CNCF ambassador | LinkedIn |

As Kubernetes (K8s) becomes a mainstream choice for containerized workloads, handling deployments in K8s becomes increasingly important. There are many ways to deploy applications in K8s (like defining Deployments and other resources in YAML files. For example:

- K8s resource definition in YAML files

- Kustomize: a K8s-native and template-free way to customize apps

- Helm charts: a package manager for K8s

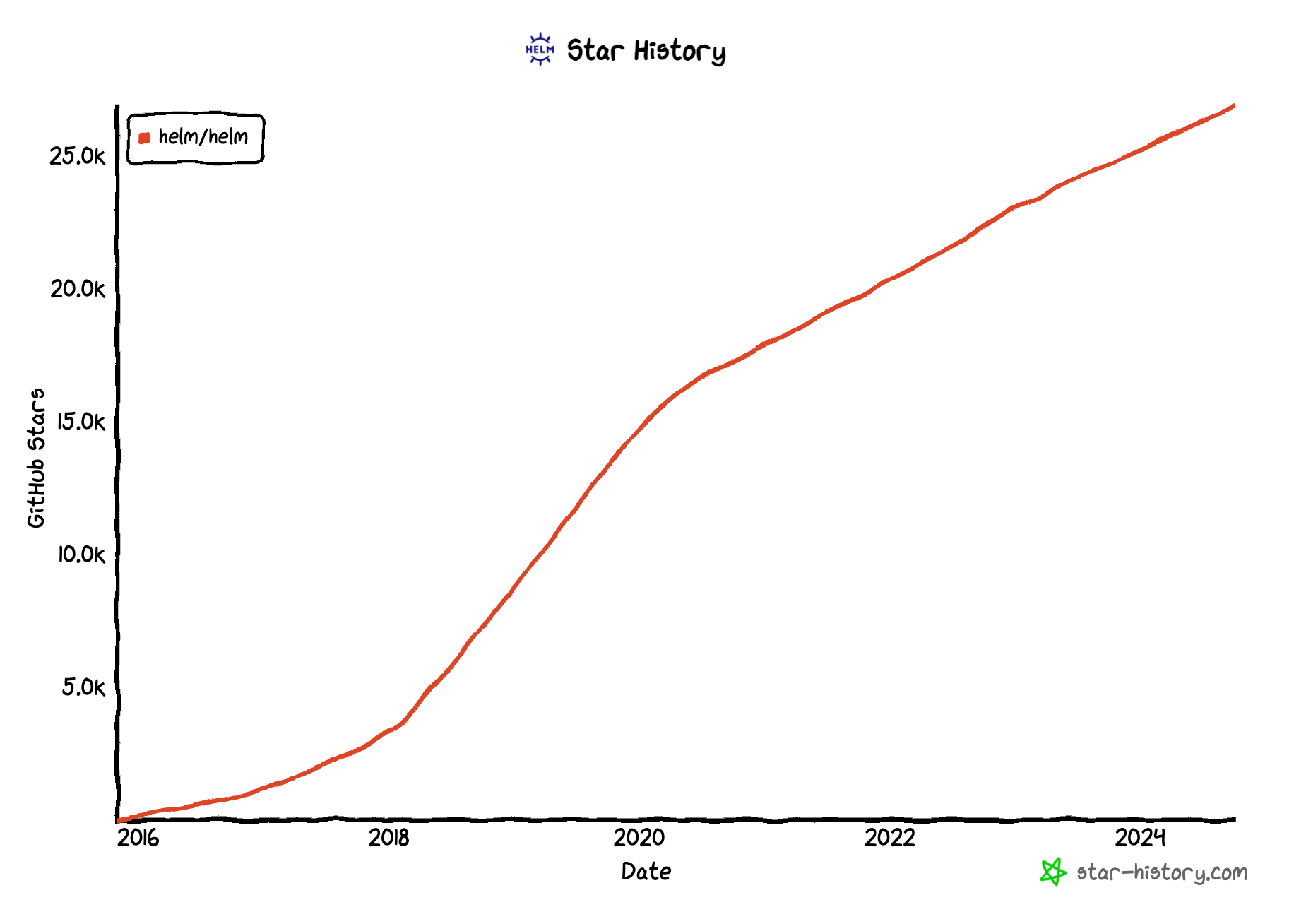

Helm is one of the most popular choices (if not the most popular) in the list because Helm templates are highly flexible and customizable. Helm also provides a simple package format and an easy-to-use CLI tool.

Although the Helm CLI is simple and straightforward, in the modern (read: automated) software development life cycle (SDLC), with DevOps and DevSecOps practices in mind, we won’t be running Helm CLI commands manually to deploy apps, we want automation.

So, in this blog, let’s examine a state-of-the-art, secure, and production-ready integration between Helm and continuous deployment (CD) tools.

Before we proceed, let’s examine an example of how you might deploy stuff with Helm.

1 – Using Helm with Continuous Integration (CI): A Real-World Example

It was not quite long ago (2022/2023) that I was working in the SRE team in an established startup company, managing dozens of microservices. Back then, the choice of the CI/CD tool was GitHub Actions (which was good), and deployments were managed using Helm (which was also good). Although the setup looked great (at least from the standpoint of tooling), the deployment process was less so. Allow me to expand:

- The CI workflow needed access to the K8s clusters where the deployments would happen. To solve this, custom runners pre-configured with access to K8s were used specifically for deployment-related jobs.

- The CI workflow was essentially a script running Helm commands, except it wasn’t a script but a workflow defined in YAML.

- This means, the deploy logic was backed in the CI workflows triggering Helm commands with command line parameters whose values (like secrets and versions) were fetched by other steps.

- To fetch secrets securely, some custom script was required to read from a secret manager before deployment and set the values on the go.

- To avoid copy-paste of the workflow in multiple microservices, a central workflow is defined with parameters so that each microservice’s workflow can dispatch the central workflow (if you are familiar with GitHub Actions, you probably know what I am talking about, and yes, you are right:

workflow_dispatch).

You might not be using GitHub Actions in your team, but the above setup probably looks quite familiar, well, to basically everybody, because no matter what CI tools you use (be it Jenkins or GitHub Actions or whatever takes your fancy), the problems need to be solved are essentially the same: handle access to clusters, use custom Helm commands with values to deploy multiple microservices, handle secrets and versions securely and effectively, all of which must be done in a human-readable, do-not-repeat-yourself, maintainable way.

Next, let’s look at an alternative way that is much more maintainable.

2 – A Very Short Introduction to GitOps

GitHub Actions (as well as many other CI tools) is a great CI tool that excels at interacting with code repositories and running tests (most likely in an ephemeral environment). However, great CI tools aren’t designed to deploy apps with Helm in mind. To deploy apps with Helm, the workflow needs to access production K8s clusters, get secrets/configurations/versions, and run customized deployment commands, which aren’t CI tools’ strongest suit.

To make deployments with Helm great again, we need the right tool for the job, and GitOps comes to the rescue.

💡

In short, GitOps is the practice of using Git repos as the single source of truth to declaratively manage apps and deployments, which are triggered automatically by events happening in the repos.

3 – Argo CD: GitOps for K8s

One of the most popular GitOps tools out there is Argo CD, a declarative, GitOps continuous delivery tool for Kubernetes.

Fun fact: Argo is the name of a ship from the Greek mythology stories. Since Kubernetes is the Greek word for the helmsman and since Argo CD does continuous deployment to Kubernetes clusters, the nautical theme naming blends in quite well here. Clever name.

With Argo CD, you can define an app with YAML containing information on the code repo and Helm chart, and from there, Argo CD takes over and handles the deployment and lifecycle of the app automatically.

3.1 Prepare a K8s Cluster

To demonstrate the power of Argo CD, let’s create a test environment. I’m using minikube to create a local K8s cluster, which requires a single step minikube start to create a cluster after installation (for macOS users, the easiest way to install is using brew: brew install minikube).

You can use other K8s clusters for this demo; for example, if you have access to an AWS account, the easiest way to create an AWS EKS cluster is by using

eksctl:eksctl create cluster.

3.2 Install Argo CD

Then, let’s install Argo CD with Helm:

kubectl create namespace argocd

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

To use Argo CD more effectively, we can also install its CLI tool. For macOS users, simply run brew install argocd.

For other OS users, you can download the latest Argo CD version from the latest release page which will include the Argo CD CLI. For more details, please refer to the official doc here

Get the Argo CD admin’s initial password by running:

argocd admin initial-password -n argocd

Run the following command in another terminal tab to forward Argo CD server’s port to localhost:8080:

kubectl port-forward svc/argocd-server -n argocd 8080:443

Then access localhost:8080 and you should be able to login with the admin user and the initial password.

Alternatively, we can also login using the CLI:

argocd login localhost:8080

3.3 The Sample App

For demo purposes, I have created a simple hello world app (see the source code here) and built some docker images (see the tag list here). I have also created a simple Helm chart for it here, which contains a deployment and a service.

As you can see from the Chart.yaml file:

apiVersion: v2

name: go-hello-http

description: A Helm chart for Kubernetes

type: application

version: 0.1.0

appVersion: 0.0.2

The appVersion is set to 0.0.2, which is the image tag we will use to deploy it. Since the philosophy of GitOps is using Git as the single source of truth, you can simply view this file to know what version is deployed. To deploy another version, create a new commit changing this field, simple as that.

3.4 Deploy the Sample App

To deploy the app, simply create an Argo CD application, letting Argo CD know where the single source of truth is and where to deploy it. This can be done via multiple methods, for example, in the web UI, using Argo CD CLI, or defining it in a YAML. Here we use the last method since it’s the most declarative way. Run:

cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: hello-world

namespace: argocd

spec:

project: default

source:

repoURL: https://github.com/ironcore864/gitops.git

targetRevision: hello-world

path: go-hello-http

destination:

server: https://kubernetes.default.svc

namespace: default

syncPolicy:

automated:

prune: true

selfHeal: true

EOF

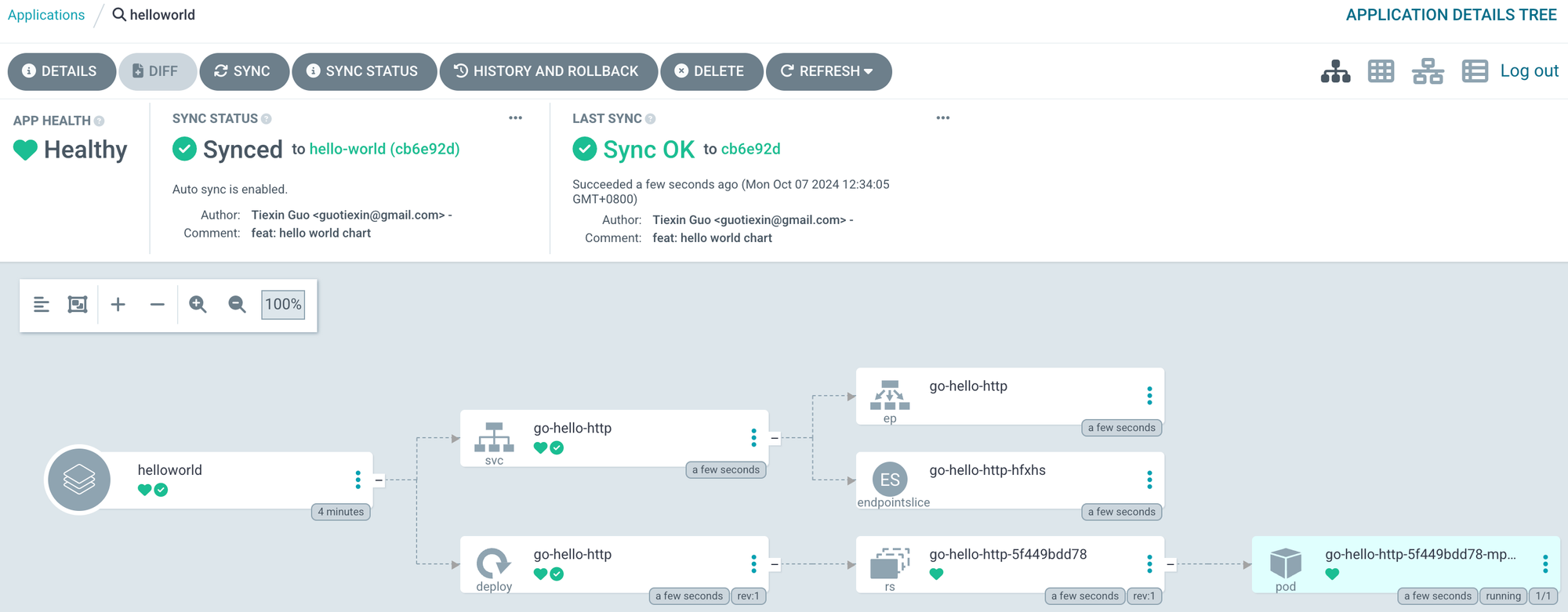

Then, Argo CD will synchronize it with the Git repo defined in the YAML above, and deploy it in the destinationcluster and namespace.

3.5 Sanity Check

You can verify the progress so far in the Argo CD server UI:

Alternatively, we can port-forward the deployed app and try to access it. Run the following command in another terminal tab:

kubectl port-forward svc/go-hello-http -n default 8000:80

Then access localhost:8000 and you should see a response like Hello, world! Version 2.

Under the hood, Argo CD checks the Git repo as the single source of truth, finds the helm charts, and synchronizes them to the K8s cluster. The chart, the appVersion, and everything else are defined in the Git repo, which is the essence of GitOps.

4 – Handle Helm Secrets Securely with GitOps/CD

Now that we’ve successfully deployed an app with GitOps and without any CI workflow whatsoever, using only a single declarative YAML file, let’s handle the most important stuff: security/secrets in Helm charts.

In the example mentioned in Section 1, handling secrets is tricky because:

- Secrets and sensitive information can’t be part of the Helm chart.

- The CI workflow needs access to a secret manager.

- The CI workflow needs to read secret values and set them to Helm charts to override default/empty values using either the

--valuesflag and pass in a file or the--setflag and pass configuration from the command line, and neither is ideal because we’d have to template the commands in the CI workflow.

With GitOps, all of a sudden, everything is solved:

- The GitOps tool runs in a K8s cluster, which can be configured to access a secret manager securely using the runtime platform’s identity.

- ExternalSecrets can be used as part of the Helm chart because they don’t contain sensitive information; they only refer to a path in a secret manager. And since we are doing GitOps, the external secret will be deployed automatically.

- There is no need to maintain any script or pipeline definition code to achieve any of the above, Git is the single source of truth, again, because we are using GitOps.

In a nutshell, the process looks like this:

- The External Secrets Operator (a K8s operator that integrates external secret managers with K8s) automatically retrieves secrets from secret managers like AWS Secrets Manager, HashiCorp Vault, Google Secrets Manager, Azure Key Vault, etc., using external APIs, and injects them into Kubernetes as Secrets.

- The definition of those external secrets is declaratively defined in YAML, which is part of the Helm chart.

- The apps/Deployments use K8s Secrets as environment varibles, following the 12-factor app methodology.

- GitOps handles the rest.

To learn more about handling secrets in Helm, read my blog, How to Handle Secrets in Helm. Specifically, the section on External Secrets Operator mentions how it works and how to install it.

For a more production-ready integration between the external secrets operator and secret managers, see my other blog, specifically, the section on Read Secrets from a Secret Manager.

5 – Handle Pod Auto-Restart with GitOps/CD

Now that the secrets are out of the way securely let’s make the whole thing one step closer to production-ready: restarting Pods when config changes.

This might seem trivial for a demo or a tutorial, but in production, automatically restarting Pods when secrets and configs change is a common requirement for most. Although there is a hacky way to do so (you probably know and have used it: add an annotation that is a time string or a checksum of the config YAML file), it’s not simple, hence not ideal.

A good solution is to use the Reloader: a K8s controller watching changes in ConfigMap and Secrets and doing rolling upgrades on Pods (with their associated Deployment, StatefulSet and DaemonSet).

Reloader can automatically discover Deployments where specific ConfigMaps or Secrets are used. To do this, add the reloader.stakater.com/auto annotation to the metadata of our Deployment as part of the Helm chart template:

kind: Deployment

metadata:

annotations:

reloader.stakater.com/auto: "true"

spec:

template:

metadata:

[...]

And since this is also part of the Helm chart, it will be handled automatically by GitOps, no other operations needed.

To know more details about how to install Reloader, how it works and how to configure it, read the How to Automatically Restart Pods When Secrets Are Updated section in my blog How to Handle Secrets in Helm .

To know more technical information and more use cases and features of Reloader, refer to the official documentation here.

6 – Handle Version Propagation with GitOps/CD

The last step in making our setup fully production-ready is handling versions or solving the version propagation issue.

In real-world scenarios, we have more than one set of environments. For example, we would have a production environment, a test environment, and maybe a staging environment as well before everything goes to production.

Since GitOps uses Git as the single source of truth to decide what version to deploy, setting a single appVersionvalue in the Chart.yaml file (as demonstrated in section 3) means the same version will be deployed everywhere, and this doesn’t fit our need.

For example, normally, we have version 1 running in test, staging and production at the same time. Then we develop a new version 2 and want to deploy it to the test environment first. At this time, both staging and production are still on version 1. After testing is done, we then want to deploy version 2 to staging but not production yet. After thorough tests are done in staging, we finally move the new version 2 to production. A new version is spread from one environment to another, hence the “version propagation” issue.

We must be able to set a limit on what versions can be deployed in which environment to solve this issue. One intuitive way to do so is to create different branches for different environments, but this adds significant operational and maintenance overhead (if it’s maintainable at all).

6.1 A Very Short Introduction to Argo CD Image Updater

To properly address the version propagation and version constraints issue, we can create multiple Argo CD applications, each deploying to a different environment (be it a different K8s cluster or a different namespace in the same cluster), and then we set version constraints on them.

Luckily, there is another tool Argo CD Image Updater which works seamlessly with Argo CD (surprise, they are both Argo products) and can provide version constraints. It’s a tool that automatically updates the container images of K8s workloads that are managed by Argo CD.

Under the hood, Argo CD Image Updater integrates with Argo CD. It watches new tags of a specific image and compares it with user-defined constraints. If matched, a new version is used and committed back to the repo so that the repo still stays as a single source of truth, then Argo CD synchronizes the app to deploy the new version that matches the constraint.

Since it needs to write something back to the repo, we need to grant Argo CD access to the repo. For this demo, I used my own private key for this:

argocd repo add [email protected]:IronCore864/gitops.git --ssh-private-key-path ~/.ssh/id_rsa

Replace the repo/private key path accordingly.

For production usage, we can create a GitHub App for Argo CD write access.

6.2 Install Argo CD Image Updater

Argo CD Image Updater is a separate product from Argo, although it can be used with Argo CD together. Still, we have to install it separately. The easiest way is to install it in the same namespace as Argo CD, which saves us extra configuration needed to be integrated with Argo CD. Run the following command to install it:

kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj-labs/argocd-image-updater/stable/manifests/install.yaml

6.3 Version Constraints and Version Propagation

Create a new Argo CD app to simulate a new deployment in a different environment:

cat <<EOF | kubectl apply -f -

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: hello-world-test

namespace: argocd

annotations:

argocd-image-updater.argoproj.io/image-list: ironcore864/go-hello-http:0.0.X

argocd-image-updater.argoproj.io/write-back-method: git

spec:

project: default

source:

repoURL: [email protected]:IronCore864/gitops.git

path: go-hello-http

destination:

server: https://kubernetes.default.svc

namespace: default

syncPolicy:

automated:

prune: true

selfHeal: true

syncOptions:

- CreateNamespace=true

EOF

The annotation gocd-image-updater.argoproj.io/image-list indicates which image to watch and what the constraint is (0.0.X in this case), and the annotation gocd-image-updater.argoproj.io/write-back-method: gitmeans that it will write back the newly discovered image version back to git.

If we watch the logs from the image updater pod, we can see that it discovers a new image version that matches the constraint, does a commit to the repo, then Argo CD synchronizes the app.

In this way, we can define different Argo CD applications and image-list annotations for different environments (like 0.0.X for production and 0.1.X for staging), so that we can control which version gets to deploy to which environment. After thorough testing of 0.1.X and when it’s stable enough to be propagated from staging to production, we make a PR to update the annotation for the Argo CD Application for production — version propagation issue solved.

Summary

Traditional CI tools, as their names suggest, are good at continuous integration, but their features are mostly repo- and test-centric, meaning CI tools are not super easy to configure and use in a continuous deployment context with Helm. Major challenges include:

- accessing K8s clusters

- running Helm commands with parameters

- handling secrets

- handling app versions

And more.

Continuous deployment tools with GitOps are designed specifically to solve challenges related to deployments in Kubernetes with Helm, hence making deployments great again. Git repositories are used as the single source of truth, everything including versions is defined declaratively in the repo without any need for helper scripts or complicated workflow definitions. It’s worth mentioning that although this blog focuses on Argo CD, there are other popular GitOps tools. However, the methodologies described in this blog still apply no matter what tools are used.

To read a more lively story with more illustrative diagrams about the whole Helm/GitOps setup, read my other blog here. To watch the final result of this blog in a video, see this YouTube video.

I hope you enjoyed reading this article and can introduce the methodology to your organization, upgrading your DevSecOps to another level. Thanks, and see you in the next piece!

*** This is a Security Bloggers Network syndicated blog from GitGuardian Blog - Code Security for the DevOps generation authored by Guest Expert. Read the original post at: https://blog.gitguardian.com/how-to-use-helm-with-continuous-deployment-cd/

如有侵权请联系:admin#unsafe.sh