I was recently involved in a study comparing the performance of three LLM-based AIs in providing empathetic, compassionate, and helpful goal-oriented support to 40 graduate and undergraduate students. The students were simply tasked with having daily conversations about topics of emotional importance with their assigned AI.

After each conversation, they were asked to complete a modified Working Alliance Inventory (WAI). WAI is a widely used psychological assessment tool developed by Adam Horvath to measure the therapeutic alliance between a therapist and a client. At the end of the study, a more extensive 12-question Barrett-Lennard-based inventory was completed.

Given the prevalence of articles speaking to the empathetic and helpful capabilities of ChatGPT and the fact that many people do use its raw version for empathetic guidance, the goal of the study was to assess if prompted and fine-tuned LLMs were better and more acceptable to users desiring support than ChatGPT.

Other than to say the results were positive both with respect to the WAI and Barrett-Lennard, the goal of this article is not to cover the results. However, after the study, I realized that there have been a number of efforts that applied Barrett-Lennard to other disciplines and decided to compare the performance of Emy, the top-performing AI in the study, with cross-discipline norms.

Note, Emy is a non-commercial AI designed to support research into empathy. Emy is not optimized for managing goals, and her scores show that. In this article, she is used as a proxy for potential discipline-specific commercial AIs. If Emy is capable of close to or above average professional performance for most roles in a discipline, then well-funded teams should certainly do well. After all, Emy has received no third-party funding, and hands-on development was all done by one person.

First, some caveats:

-

The students were tasked with seeking general emotional support. Although full transcripts were not available for privacy reasons, topic summaries showed that conversations ranged across relationship support (romance, friends, family), school and work stressors, and mental or physical health care issues.

-

The comparisons I make to discipline norms are not apples-to-apples. When Barrett-Lennard was applied to each discipline, it was in the context of conversations specific to that discipline, not basic emotional support.

The above being said, all of the disciplines require empathy, compassion, and goal-oriented support. Across the majority of dimensions for many roles, Emy was on par with or exceeding average human performance. I think the findings illustrate that further discipline-oriented investigation is worthwhile.

I think there is promise in the use of LLMs to augment the activity of many positions outside mental and physical healthcare. In fact, I think these other positions deserve far more attention because of the lower risks and the paucity of formal empathy, compassion, communication, and goal management training provided to many practitioners.

The modified Barrett-Lennard survey used at the end of the study had these 12 questions.

- As a result of working with the AI with which I interacted, I was clearer as to how I might be able to change.

- What I was doing with the AI with which I interacted gave me new ways of looking at my problem.

- I believe the AI with which I interacted likes me.

- I and the AI with which I interacted collaborated on setting goals.

- I and the AI with which I interacted respected each other.

- I and the AI with which I interacted were working towards mutually agreed upon goals.

- I felt that the AI with which I interacted appreciated me.

- I and the AI with which I interacted agreed on what was important for me to work on.

- I felt the AI with which I interacted cared about me even when I did things that he/she did not approve of.

- I felt that the things I did with the AI with which I interacted would help me to accomplish the changes that I wanted.

- I and the AI with which I interacted had established a good understanding of the kind of changes that would be.

- I believe the way we were working with my problem was correct.

For the most part, I let the charts speak for themselves.

Mental Therapy

This is the sole chart where I compare Emy to ChatGPT, mainly because ChatGPT seems to be the defacto go-to for those not using custom AI mental health apps. Overall, Emy pretty much splits the difference between a professionally trained therapist and raw ChatGPT. This indicates to me that the role several companies like BambuAI are taking to position AIs as companions to augment therapy could be a good approach.

However, I have lost track of the number of companies pursuing AI therapy head-on through Cognitive Behavioral Therapy (CBT) based applications. I have tested a lot of these and been left underwhelmed or even concerned for safety.

Health Care

HippocraticAI is the big AI name in healthcare, and they clearly have a focus on both clinical and interpersonal issues. The big tech players are all also in this space but are tending to focus on clinical rather than interpersonal AIs. And, of course, EPIC is enhancing its system in a variety of ways.

Education

Given the current political environment and the likelihood of substantial cuts in both available direct funding and tuition support, the opportunity for AI to offset likely staff reductions or reduced staff training is certainly worth exploring. Furthermore, the student population as a whole deals with a more complex web of interconnected relationships and pressures than the typical population and could benefit from student information systems, learning management systems, and housing management systems with some built-in empathy!

The above being said, the space is already crowded by start-ups taking the “easy” route, i.e., tutoring. I think holistic student care will be the big “win”, but it will probably take big dollars. And, through either acquisition or in-house development, the space will probably be dominated by Workday, Jenzabar, Canvas, or the like.

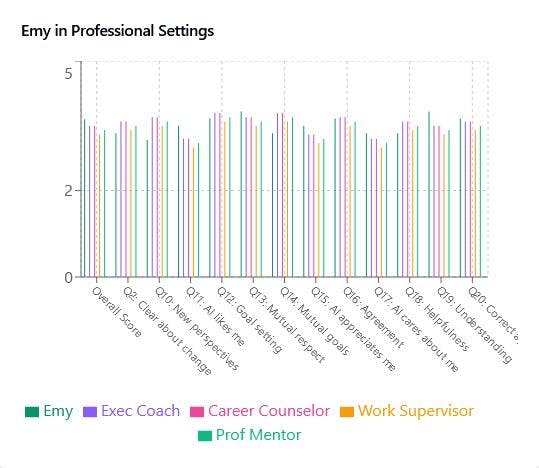

Professional Space

In general, Emy outperforms work supervisors and professional mentors, so there would seem to be a chance for AIs to either lift the performance of these roles or provide better support to the rank and file.

The professional space is the area that is least regulated and most performance-driven; hence, the opportunity for AI in roles that support people in performing should be high. It is also a highly fragmented space with a lot of opportunities driven by client sizes and industries. The shift in the last 5 years to video-conference conversations that can be easily piggy-backed to insert an AI advisor implies an area that could evolve rapidly.

A company trying to take a leadership position in this space is Parsley. Any, if they can avoid the innovator’s dilemma, large entities providing DISC, Myers-Briggs and other trainings could also make a big impact here by leveraging their existing distribution and relationships.

如有侵权请联系:admin#unsafe.sh