Anything is a Nail When Your Exploit’s a Hammer

Previously…

In previous blogs we’ve discussed HOW to exploit vulnerable configurations and develop basic exploits for vulnerable model protocols. Now it’s time to focus all of this information – protocols, models and Hugging Face itself – into a viable attack Proof-of-Concept against various libraries.

“Free Hugs” – What to be Wary of in Hugging Face – Part 2

“Free Hugs” – What To Be Wary of in Hugging Face – Part 1

Hugging Face Hub

huggingface-hub (HFH) is the Python hub client library for Hugging Face. It’s handy for uploading and downloading models from the hub and has some functionality for loading models.

Pulling and Loading a Malicious TF Model from HFH

Once a malicious model is created it can be uploaded to Hugging Face and then loaded by unsuspecting users.

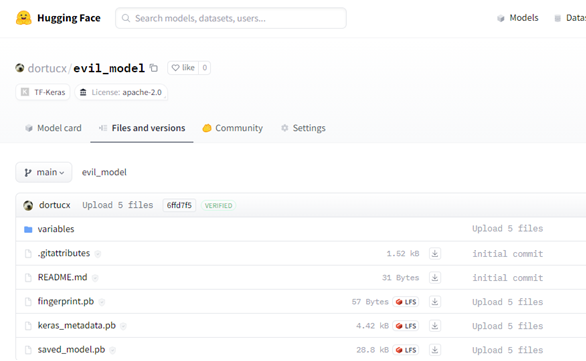

This model was uploaded to Hugging Face:

Figure 1 – TF1 File Structure

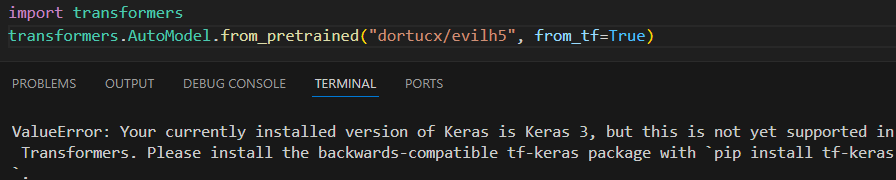

You might remember from our previous blogpost relating to Tensorflow, where an older version could generate an exploit even newer versions would consume. tf-keras also allows the use of legacy formats for Keras within the Hugging Face ecosystem. It even gets recommended when trying to open the legacy formats:

Might want a more stern security warning for that there, buddy

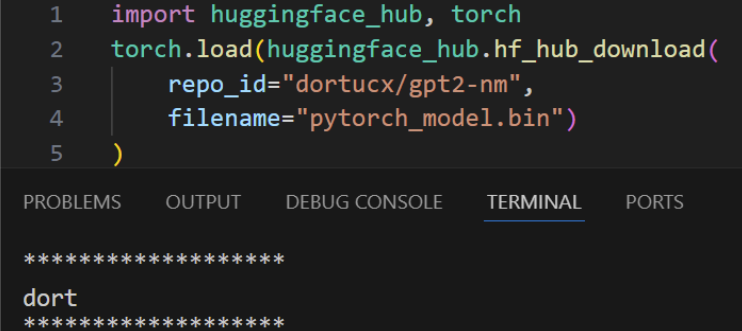

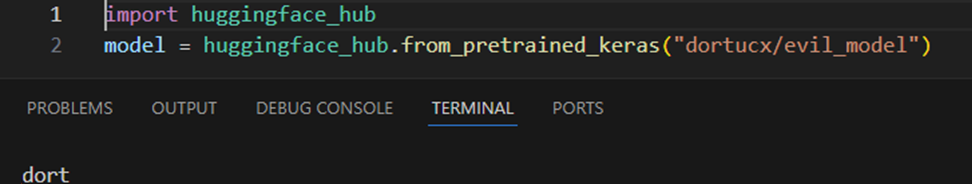

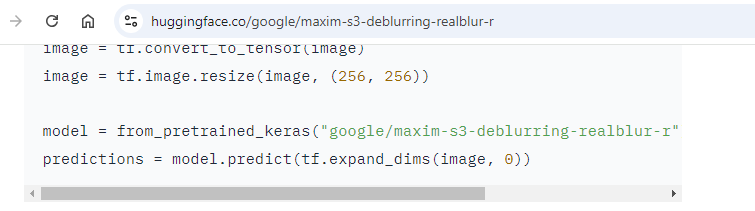

This TensorFlow model can be retrieved by the vulnerable methods:

- huggingface_hub.from_pretrained_keras

- huggingface_hub.KerasModelHubMixin.from_pretrained

Loading an older TF1 malicious model with these methods will result in code execution:

You’d be forgiven for asking,“Yeah, sure, but odds are I won’t be using these methods at all, right?”. Well, remember that this is a trust ecosystem, and model-loading code appears in ReadMe guides.

For example – here’s that vulnerable method from earlier, used by a Google model.

This does have a caveat – the tf-keras from the Keras team (which is used in TensorFlow) dependency is required, otherwise a ValueError is raised.

The issue was reported to HuggingFace on 14/08/2024 so that these methods could be fixed, removed or at least flagged as dangerous. The response only arrived in early September, and read as follows:

“Hi Dor Tumarkin and team,

Thanks for reaching out and taking the time to report. We have a security scanner in place – users should not load models from repositories they don’t know or trust. Please note that from_pretrained_keras is a deprecated method only compatible with keras 2, with very low usage for very old models. New models and users will default to the keras 3 integration implemented in the keras library. Thanks again for taking the time to send us this.”

At the time of writing, these methods are not officially deprecated in documentation or code, nor is there a reference to their vulnerable nature. Make of this what you will.

The newer TensorFlow formats do not serialize lambdas, and at the time of writing no newer exploits exist. However, the failure to outright reject (or at least block with some flag against legacy models) still leaves Hugging Face code potentially vulnerable, and so validating the version of the model can offer some degree of protection.

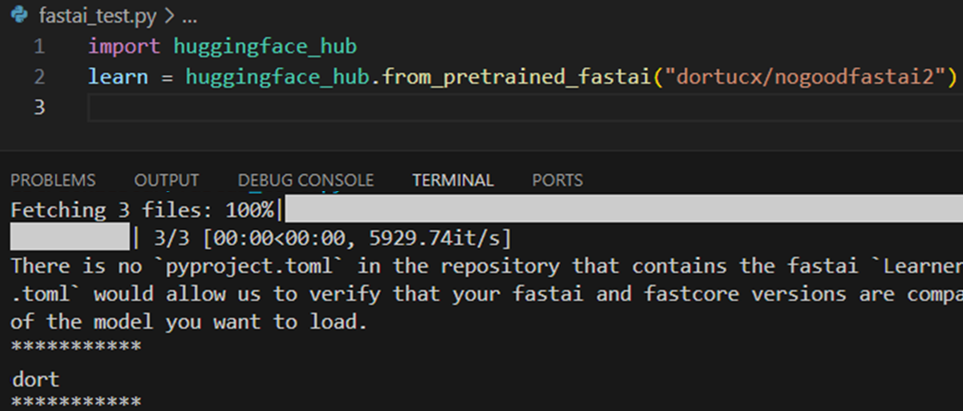

Pulling and Loading a Malicious FastAI Pickle from HFH

On second thought maybe don’t pip install huggingface_hub[fastai] and use it with some random model

We will discuss FastAI soon, as its own thing amongst the many integrated libraries HF supports.

Integrated Libraries Lightning Round Bonanza!

Going beyond HFH, Hugging Face has relevant documentation and library support for many other ML frameworks.“Integrated Libraries” offers some interaction with HF.

There wasn’t enough time (or interest – this was getting painfully repetitive at some point) to explore all of them. What did become clear was that an over-reliance on the Torch format is still very much alive and well, and that the use of known-vulnerable Torch calls is both a common practice and an open secret. This, of course, implies that they are vulnerable.

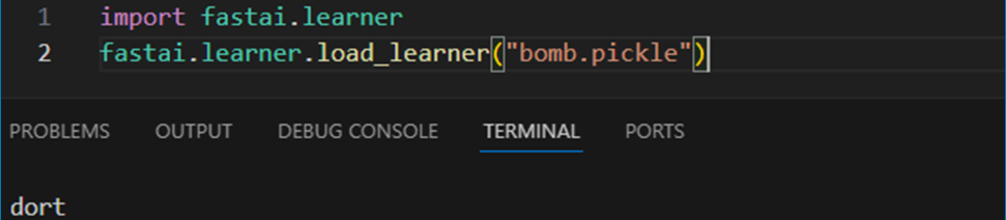

FastAI

fastai is a deep learning library. Its load_learner method is how FastAI objects are read. Unfortunately, it’s just wrapped torch.load without security flags, so it can only unpickle a plain malicious payload:

As mentioned previously, FastAI learners can also be invoked from Hugging Face using the huggingface_hub.from_pretrained_fastai method (requires the huggingface_hub[fastai] extra).

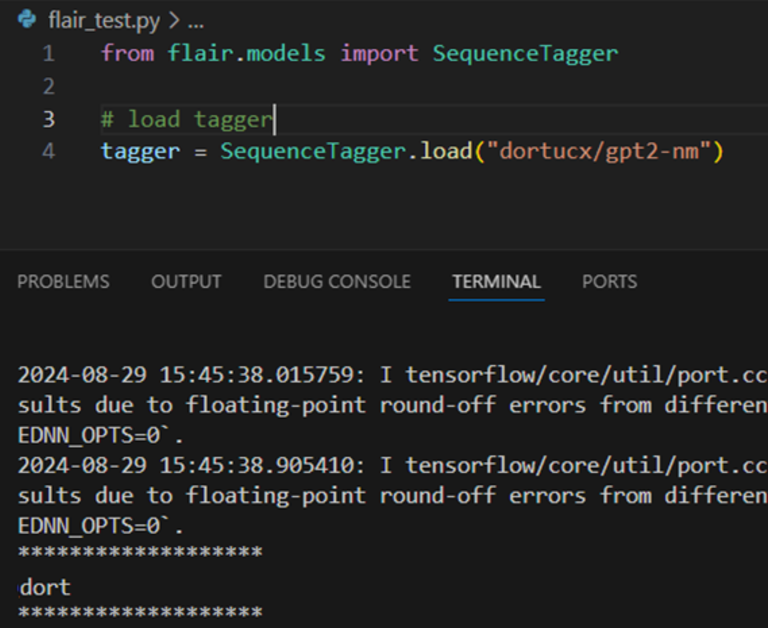

Flair

Flair is an NLP framework. Just like FastAI, Flair simply torch.loads which is vulnerable to code execution, and can download files directly from the HuggingFace repository https://github.com/flairNLP/flair/blob/master/flair/file_utils.py#L384

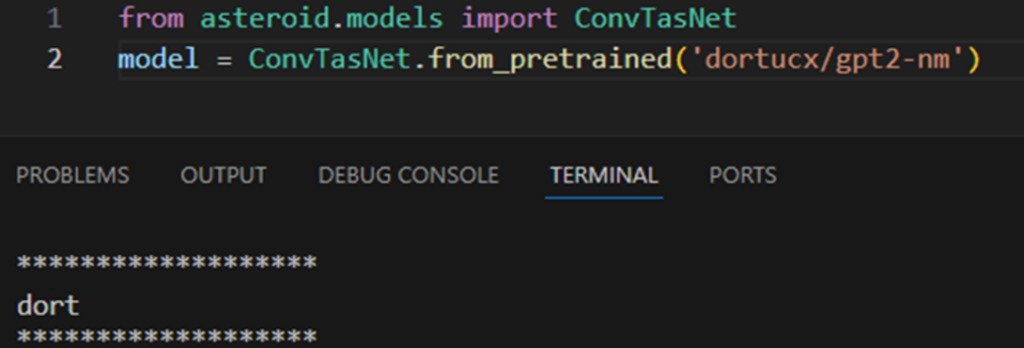

Asteroid

Asteroid is a PyTorch-based framework for audio. Being PyTorch-based usually includes a predictable hidden call to torch.load:

https://github.com/asteroid-team/asteroid/blob/master/asteroid/models/base_models.py#L114

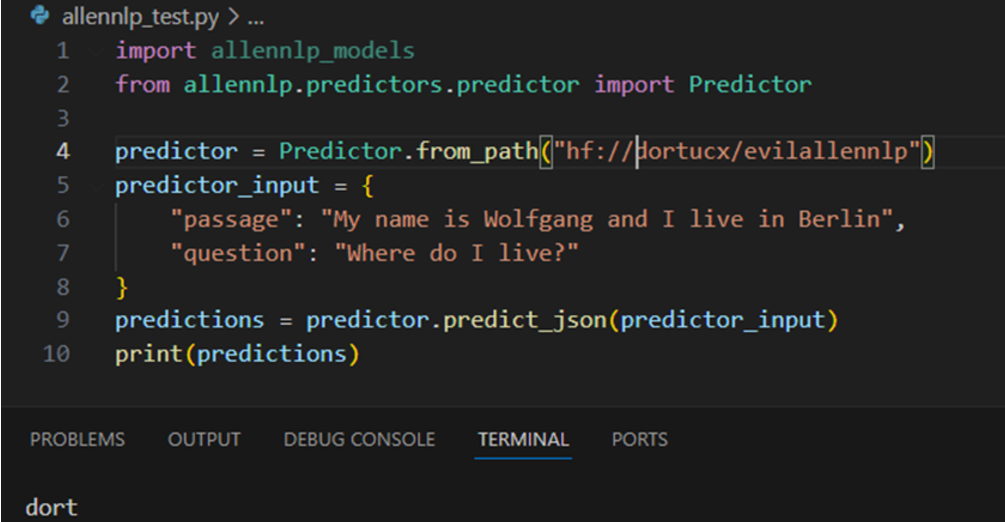

AllenNLP

AllenNLP was an NLP library for linguistic tasks. It reached EOL on December 2022, but it still appears in Hugging Face documentation without reference to its obsolescence. It is also based on PyTorch:

Due to its EOL state, it is both vulnerable – and unlikely to ever be fixed.

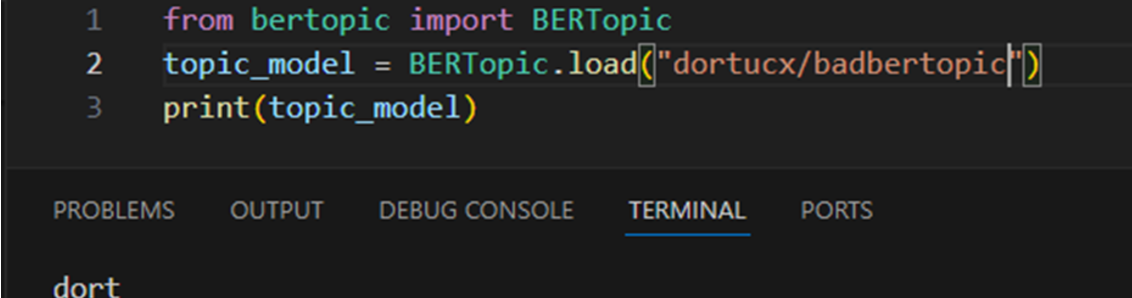

BERTopic BERTopic is a topic modeling framework, meaning that it labels topics in text it receives.

Brew Your Own!

There are probably many, many more exploitation methods and libraries in the Hugging Face Integrated Libraries list. And given just how popular PyTorch is it probably goes way beyond that.

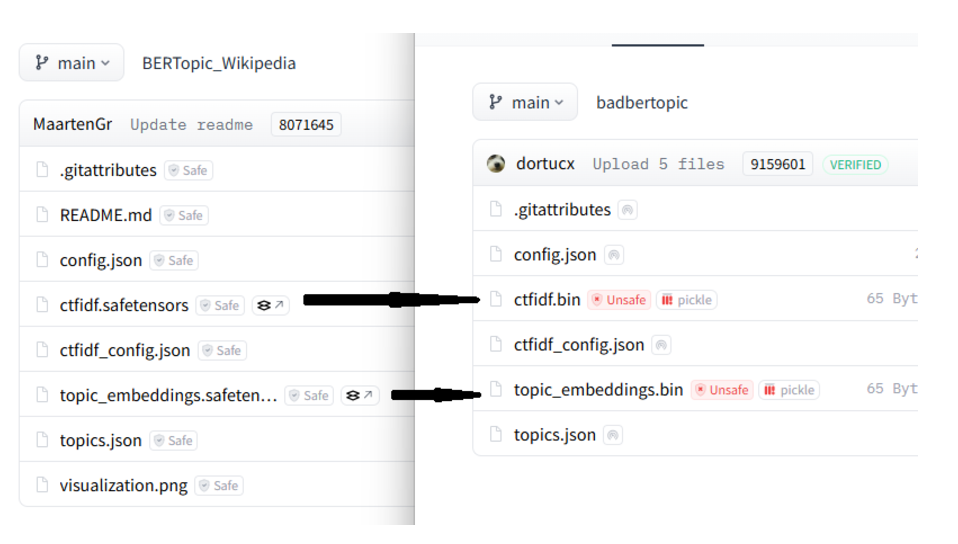

The methodology for developing basic exploits for all of these is verysimple:

Download model

Replace models with Torch/TF payloads

If a model has SafeTensors make Torches with the same file name, and delete the SafeTensor files

Upload

Exploiting an automagic failover from SafeTensors to Torch in Bertopic

While this doesn’t cover all these libraries, the point is made – there are a lot of things to look out for when using the various integrated libraries. We’ve reached out to the maintainers of these libraries, but unfortunately it seems torch.load pickle code execution is just part and parcel for this technology.

Conclusion

There are many, many, many potential cases to consider for Torch exploitation still in the HF ecosystem.

Even if SafeTensors is a viable option, many of these libraries support various formats, which is again the same issue as we had with TF-Keras – legacy support being available means legacy vulnerabilities being exploitable.

in the next blog…

So we’ve discussed the problems – vulnerable frameworks, dangerous configurations, malicious models – but what are some of the solutions? How good are they? Can they be bypassed?

Spoiler alert: yes.