2024-12-4 05:10:59 Author: hackernoon.com(查看原文) 阅读量:1 收藏

Table of Links

- Abstract and Introduction

- Related Work

- Problem Definition

- Method

- Experiments

- Conclusion and References

A. Appendix

A.1. Full Prompts and A.2 ICPL Details

A.6 Human-in-the-Loop Preference

A.3 BASELINE DETAILS

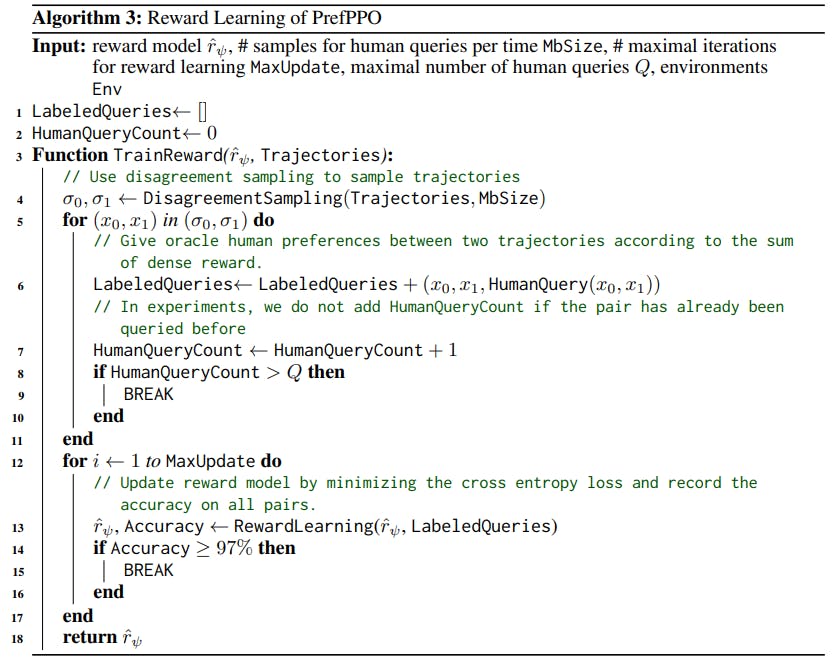

To sample trajectories for reward learning, we employ the disagreement sampling scheme from (Lee et al., 2021b) to enhance the training process. This scheme first generates a larger batch of trajectory pairs uniformly at random and then selects a smaller batch with high variance across an ensemble of preference predictors. The selected pairs are used to update the reward model.

For a fair comparison, we recorded the number of times PrefPPO queried the oracle human simulator to compare two trajectories and obtain labels during the reward learning process, using this as a measure of the human effort involved. In the proxy human experiment, we set the maximum number of human queries Q to 49, 150, 1.5k, and 15k. Once this limit is reached, the reward model ceases to update, and only the policy model is updated via PPO. Algo. 3 illustrates the pseudocode for reward learning.

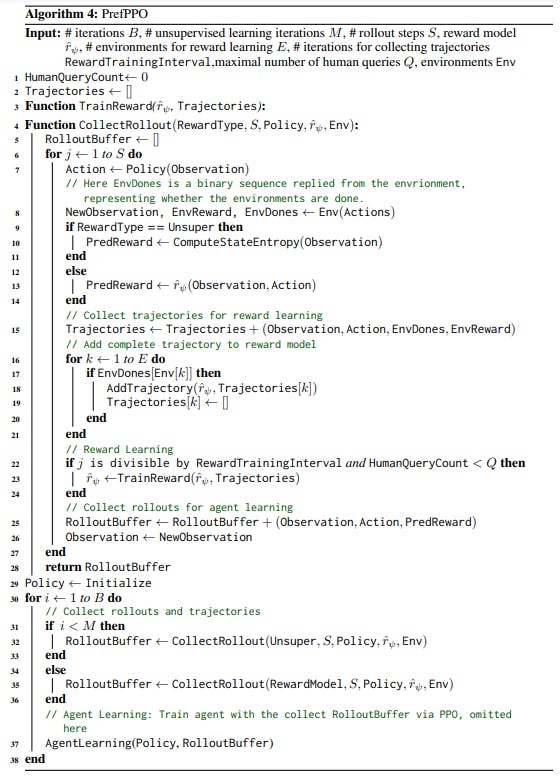

Algo. 4 illustrates the pseudocode for PrefPPO.

Authors:

(1) Chao Yu, Tsinghua University;

(2) Hong Lu, Tsinghua University;

(3) Jiaxuan Gao, Tsinghua University;

(4) Qixin Tan, Tsinghua University;

(5) Xinting Yang, Tsinghua University;

(6) Yu Wang, with equal advising from Tsinghua University;

(7) Yi Wu, with equal advising from Tsinghua University and the Shanghai Qi Zhi Institute;

(8) Eugene Vinitsky, with equal advising from New York University ([email protected]).

如有侵权请联系:admin#unsafe.sh