2024-12-4 05:9:48 Author: hackernoon.com(查看原文) 阅读量:0 收藏

Table of Links

- Abstract and Introduction

- Related Work

- Problem Definition

- Method

- Experiments

- Conclusion and References

A. Appendix

A.1. Full Prompts and A.2 ICPL Details

A.6 Human-in-the-Loop Preference

4 METHOD

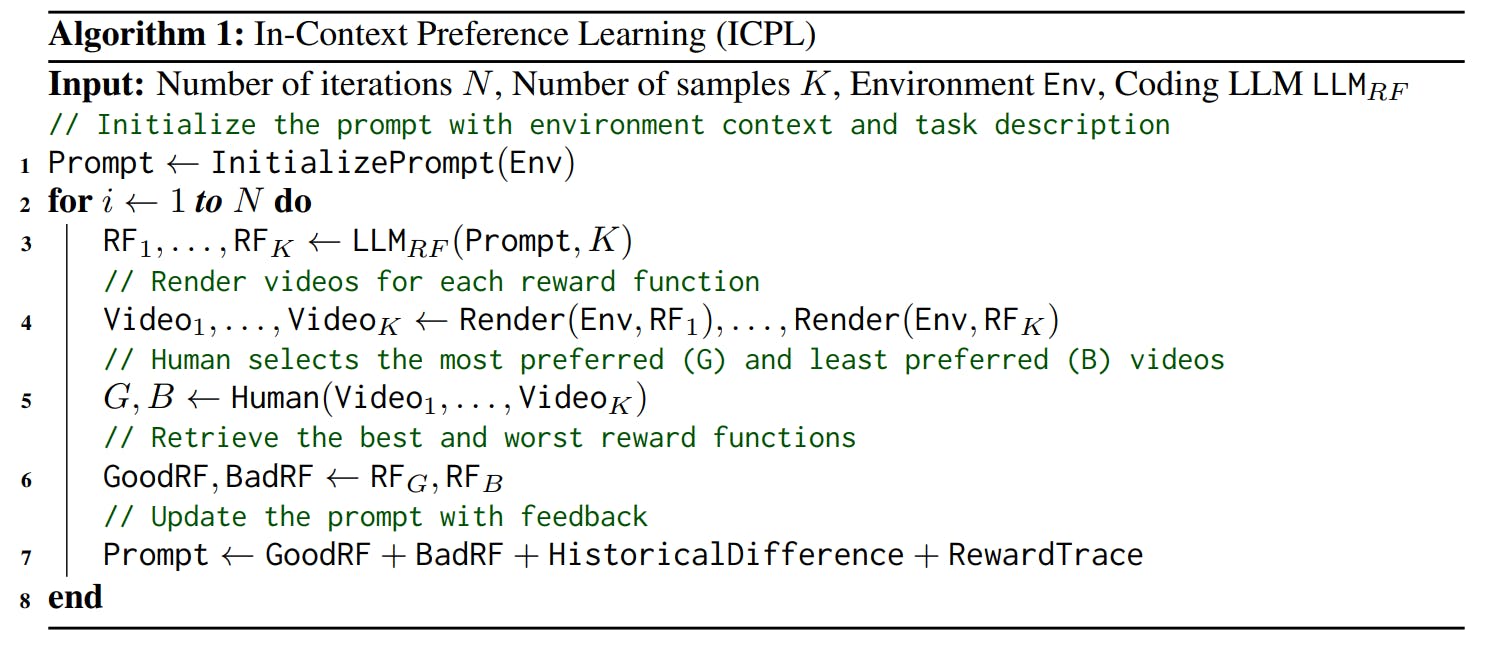

Our proposed method, In-Context Preference Learning (ICPL), integrates LLMs with human preferences to synthesize reward functions. The LLM receives environmental context and a task description to generate an initial set of K executable reward functions. ICPL then iteratively refines these functions. In each iteration, the LLM-generated reward functions are used to train agents within the environment, producing a set of agents; we use these agents to generate videos of their behavior. A ranking is formed over the videos, from which we retrieve the best and worst reward functions corresponding to the top and bottom videos in the ranking. These selections serve as examples of positive and negative preferences. The preferences, along with additional contextual information, such as reward traces and differences from previous good reward functions, are provided as feedback prompts to the LLM. The LLM takes in this context and is asked to generate a new set of rewards. Algo. 1 presents the pseudocode, and Fig. 1 illustrates the overall process of ICPL.

4.1 REWARD FUNCTION INITIALIZATION

To enable the LLM to synthesize effective reward functions, it is essential to provide task-specific information, which consists of two key components: a description of the environment, including the observation and action space, and a description of the task objectives. At each iteration, ICPL ensures that K executable reward functions are generated by resampling until there are K executable reward functions.

4.2 SEARCH REWARD FUNCTIONS BY HUMAN PREFERENCES

For tasks without reward functions, the traditional preference-based approach typically involves constructing a reward model, which often demands substantial human feedback. Our approach, ICPL, aims to enhance efficiency by leveraging LLMs to directly search for optimal reward functions without the need to learn a reward model. To expedite this search process, we use an LLM-guided search to find well-performing reward functions. Specifically, we generate K = 6 executable reward functions per iteration across N = 5 iterations. In each iteration, humans select the most preferred and least preferred videos, resulting in a good reward function and a bad one. These are used as a context for the LLM to use to synthesize a new set of K reward functions. These reward functions are then used in a PPO (Schulman et al., 2017) training loop, and videos are rendered of the final trained agents.

4.3 AUTOMATIC FEEDBACK

In each iteration, the LLM not only incorporates human preferences but also receives automatically synthesized feedback. This feedback is composed of three elements: the evaluation of selected reward functions, the differences between historical good reward functions, and the reward trace of these historical reward functions.

Evaluation of reward functions: The component values that make up the good and bad reward functions are obtained from the environment during training and provided to the LLM. This helps the LLM assess the usefulness of different parts of the reward function by comparing the two.

Differences between historical reward functions: The best reward functions selected by humans from each iteration are taken out, and for any two consecutive good reward functions, their differences are analyzed by another LLM. These differences are supplied to the primary LLM to assist in adjusting the reward function.

Reward trace of historical reward functions: The reward trace, consisting of the values of the good reward functions during training from all prior iterations, is provided to the LLM. This reward trace enables the LLM to evaluate how well the agent is actually able to optimize those reward components.

Authors:

(1) Chao Yu, Tsinghua University;

(2) Hong Lu, Tsinghua University;

(3) Jiaxuan Gao, Tsinghua University;

(4) Qixin Tan, Tsinghua University;

(5) Xinting Yang, Tsinghua University;

(6) Yu Wang, with equal advising from Tsinghua University;

(7) Yi Wu, with equal advising from Tsinghua University and the Shanghai Qi Zhi Institute;

(8) Eugene Vinitsky, with equal advising from New York University ([email protected]).

如有侵权请联系:admin#unsafe.sh