2024-12-5 00:42:33 Author: hackernoon.com(查看原文) 阅读量:2 收藏

There are plenty of ways to integrate an AI model in your code. But sometimes, when you start, it is really hard to pick one. That’s why, I decided to share my experience in going through this path. In this article we will:

- Get familiar with the Hugging Face platform

- Explore the basics of Inference API on the Hugging Face

- Create Spring Boot project

- Develop a simple Java client, which will help us to establish connection with the Inference API

- Pick a model

- Develop a small piece of the REST API

- And finally, test all that with some REST Client.

Quite a lot for a start, isn’t it? 😀

What is Hugging Face

The only way to develop such a sophisticated field, as Artificial Intelligence - is to share all the knowledge and resources through building the biggest community possible.

That is the key concept, on which this platform is based. And it is also a reason why this platform is so open and friendly to anyone who wants to share, explore and discover the possibilities of the AI-world. It has an enormous amount of models, waiting for you to try them. You can read more about its possibilities in the Hugging Face Hub Documentation.

Now, let’s take a closer look at the Inference API.

What is Inference API

When you need to prototype your application or to experiment with capabilities of the chosen model - you can do it without complicated infrastructure or setup. The Inference API is offering access to most of the models, which are available on the Hugging Face.

Essentially, all you need is the url and an api-key. Of course, as it’s free, the Inference API is having some limitations. At the moment of writing this article the number of requests per hour for one signed-up user amounts to 50. But it’s obviously enough for a quick start. You can learn much more about Inference API here.

If you need much more requests, it is helpful to get familiar with the Inference Endpoints. But you will need a credit card for that. It is also useful to know about Spring AI, which is being developed at the moment. But for this article it is not needed.

Are you ready to start? 😀

Create Spring Boot Project

This can be done through SpringInitializr or in IntelliJ, it’s up to your choice.

- Create a new project

- Add Spring Web dependency

- Add Lombok

With SpringInitializr

Visit SpringInitializr and create the project:

With IntelliJ

Or open IntelliJ and go to File ➞ New ➞ Project and pick Spring Initializr. Choose your desired settings:

And add Spring Web and Lombok.

Add more dependencies

In the build.gradle file we need to add a few more dependencies for our client.

dependencies {

implementation 'com.squareup.okhttp3:okhttp:4.12.0'

implementation 'com.fasterxml.jackson.core:jackson-databind:2.17.2'

}

Writing a simple client for Inference API

For beginning, we will try to develop the most simple client. I also picked a more easy-to-grasp group of models, which is based on generating text from a prompt.

If you want to enhance the client, you can find much more details in the API specification.

Create a client

Create a new package client and a HuggingfaceModelClient in it. This client will send requests to the Hugging Face platform. We will use a Builder pattern for our client. Let’s add some Lombok annotations to reduce the code and several necessary fields:

@Getter

@Builder

@AllArgsConstructor

public class HuggingFaceModelClient {

private static final String API_URL = "https://api-inference.huggingface.co/models/";

private final String modelName;

private final String accessToken;

private final int maxLength;

private final Double temperature;

private final int maxRetries;

private final long retryDelay;

@Builder.Default

private final OkHttpClient client = new OkHttpClient();

}

Let me explain the fields of our client…

- API_URL is the common part for all model urls at Hugging Face,

- modelName is the rest of the API_URL for the exact model (e.g.

google-t5/t5-small ), - accessToken is a token, which can be created here ,

- maxLength and temperature are model parameters,

- maxRetries is a number of attempts to send a request,

- retryDelay is a time frame between requests,

- @Builder.Default is a field, excluded from Builder, generated by Lombok,

- OkHttpClient will help us with sending requests to Hugging Face API.

Now, let’s create the most important method of this client - call():

public String call(String inputs) throws IOException {

return "";

}

Inside this method we will first check if our inputs are not empty:

if (inputs.isEmpty()) {

throw new IllegalArgumentException("Input string cannot be empty");

}

Then, let’s shape the payload:

//Create JSON payload using Jackson

ObjectMapper objectMapper = new ObjectMapper();

Map<String, Object> payload = new HashMap<>();

payload.put("inputs", inputs);

Map<String, Object> parameters = new HashMap<>();

parameters.put("max_length",maxLength);

if (temperature != null) {

parameters.put("temperature", temperature);

}

payload.put("parameters", parameters);

String requestParams = objectMapper.writeValueAsString(payload);

And the complete URL:

String url = API_URL + modelName;

And finally, everything, what is about sending the request:

RequestBody requestBody = RequestBody.create(requestParams,

MediaType.parse("application/json"));

Request request = new Request.Builder()

.url(url)

.addHeader("Authorization","Bearer " + accessToken)

.post(requestBody)

.build();

int retries = 0;

while (true) {

try (Response response = client.newCall(request).execute()) {

if(response.isSuccessful()) {

return response.body().string();

} else if (retries< maxRetries) {

retries++;

try {

Thread.sleep(retryDelay);

} catch (InterruptedException e) {

Thread.currentThread().interrupt();

}

} else {

throw new IOException("Unexpected response code: " + response.code());

}

}

}

Time to have a short coffee break. ☕ Let’s dive into the AI model’s World!

Writing a simple Controller

Before we start, let’s clarify a few important moments.

Choose a model

For the start, go to the Models page. The number of models may seem overwhelming, but you can filter them by tasks. As a developer, you should first pay attention to types of inputs and outputs of the model. I’d like to list several types of tasks I find easier to start with:

- Text generation

- Text classification

- Text-to-image

As we are using Inference API, there is no need to bother about size of a model. It is only important if you are going to use a chosen model in production later.

In this project we are going to use google-t5/t5-small. It is a text generation model. Let’s take a closer look at the google-t5/t5-small:

You can find a model name almost at the top left corner of the page.

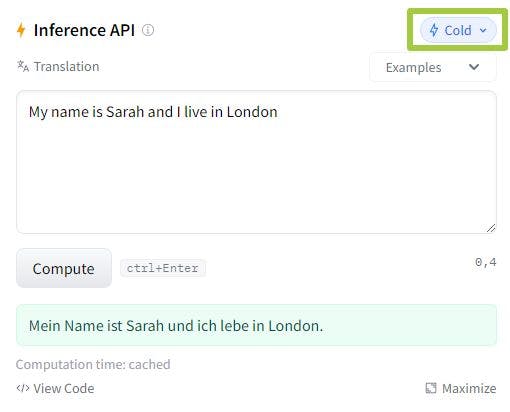

And you can play with it through the Inference API Widget on the left side of page:

Pay attention to the word Cold. That means that the model is not loaded at the moment. But if you start sending requests to the Inference API - it will soon become Warm. When the model is cold, you can get 500 Internal Server Error.

Create an Access Token

For that you need first to create an account on Hugging Face.

Then go to Access Tokens and create a new token. We will need it for authenticating our application to Hugging Face services.

For our purposes it is enough to create a read token. It is recommended to have one token per one app. Name it with a word, connected to your app by meaning. You can learn more about the roles of tokens and their creation here.

Write Controller

In a controller package create ChatController. Add necessary annotations:

@RestController

@RequestMapping("/ai")

public class ChatController {

private final String ACCESS_TOKEN = "<your-access-token-here>";

}

Access Token usage

While it is totally ok for a hello world app, in a real development you must hide your access token to prevent it from leaking. One of ways to do that is to set an environmental parameter and then pass it as a command line parameter when launching the application.

Next, we need to inject HuggingfaceModelClient to our ChatController in a following way:

private HuggingFaceModelClient client;

@Autowired

public ChatController() {

this.client = HuggingFaceModelClient.builder()

.modelName("google-t5/t5-small")

.accessToken(ACCESS_TOKEN

)

.maxLength(100)

.maxRetries(5)

.retryDelay(1000)

.build();

}

And implement GET method:

@GetMapping("/generate")

public Map generate(@RequestParam(value = "message", defaultValue = "I love coffee") String message) throws IOException {

return Map.of("generation", client.call(message));

}

We are almost close to the end of our project. 😀

Tests

I think that it’s easier to use a rest client in our case, but it is always useful to have a Junit test.

Add a test

It’s time to add some simple test to our project:

@SpringBootTest(webEnvironment = SpringBootTest.WebEnvironment.RANDOM_PORT)

class AiDemoApplicationTests {

@Autowired

TestRestTemplate restTemplate;

@Test

public void shouldReceiveResponseWithOKStatusCode() {

ResponseEntity<String> response = restTemplate

.getForEntity("/ai/generate?message=I%20love%20coffee",String.class);

assertThat(response.getStatusCode()).isEqualTo(HttpStatus.OK);

}

}

Test it with a REST client

I used ARC(Advanced Rest Client), but you can use any other. Let’s construct a simple url like this one:

http://localhost:8080/ai/generate?message=Everyone%20loves%20coffee

Run the application and your favorite rest client. Create a GET request to your app REST API and send it. Then we will get something like that:

As you see, we got a response from the model: Jeder liebt Kaffee ! 😀

Congratulations! 🎉

Few words about errors

If you get 400 BadRequest error - probably something is wrong with the request, review your code 🧐

If you get 500 InternalServer - check if your model is still cold and send few more requests to wake it up 🙂

Of course, there is a better way to handle 500 InternalServer, if you are ready to spend some time and modify our HuggingFaceModelClient with adding one more parameter in it: x-wait-for-model. You can find more information about it here.

Source code

All discussed code can be found on GitHub.

Conclusion

In this article we passed a long path while integrating a REST API on Spring Boot with Hugging Face. We’ve learned about:

- some basics of the Hugging Face platform,

- the process of choosing a model for your application,

- creation of an Access Token.

- writing a client for interacting with the Inference API on Hugging Face,

- writing a simple controller and even a test.

That was a really big job 👏

如有侵权请联系:admin#unsafe.sh