2025-1-29 20:15:17 Author: hackernoon.com(查看原文) 阅读量:0 收藏

Table of Links

4. Experiments

This section covers empirical details of model training and evaluation on the task of speechto-speech translation with speaker style transfer. We consider Spanish-to-English (Es-En) and Hungarian-to-English (Hu-En) translation as representative translations between similar and distant languages.

Data. We used in-house semantically aligned data consisting of 250k Spanish-English and 300k Hungarian-English speech pairs. A set of 1k samples were randomly taken from training data as the validation set. Table 2 summarizes all data statistics.

Automatic evaluation metrics. We reuse ASRBLEU metric to measure the semantic translation quality as existing studies of speech translation. Generated audios are first transcribed with ASR tools into texts, and we use the medium-sized Whisper English ASR model[1] in this work. BLEU evaluates the ngram overlap between the transcripts and ground truth translations.

Another important metric is vocal style similarity (VSim), quantifying how similar the generated audios sound in comparison with the source speech in term of vocal style. Following (Barrault et al., 2023), we extract vocal style embeddings of generated and source speech with a pretrained WavLM encoder (Chen et al., 2022) and compute the cosine similarity of their embeddings. Higher embedding similarity indicates better vocal style transfer. Furthermore we report model size, the number of parameters, to reflect the model efficiency.

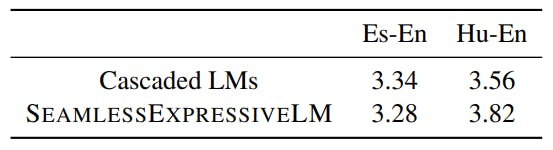

Subjective metrics. Besides automatic metrics, we include Mean Opinion Score (MOS) as the subjective metric to measure the speech quality with scores ranging from 1 to 5 (higher score indicates better quality). We get 50 samples from the model outputs in each test set, and two annotators independently evaluate the quality, and we report the score averaged over annotators and test samples.

Models. We included recent S2ST models which are only trained on semantically aligned speech data for a fair comparison with SEAMLESSEXPRESSIVELM.

• Enc-Dec S2ST. We included a textless translation model with encoder-decoder architecture as a strong baseline for semantic translation (Duquenne et al., 2023). The source speech is encoded by convolutional and Transformer encoder layers, and its decoder predicts the target semantic units. A separately trained vocoder synthesizes target speech from semantic units.

• Cascaded LMs[2]. PolyVoice (Dong et al., 2023) tackles S2ST with a cascade of three LMs for (1) semantic unit translation, (2) the first acoustic stream generation, (3) remaining acoustic streams generation. LM-1 is a decoder-only model translating source to target semantic units, LM-2 and LM-3 follow VALL-E for acoustic generation which is trained on target speech i.e., English audios.

SEAMLESSEXPRESSIVELM consists of 12 AR decoder layers and 12 NAR layers. The token embedding dimension is 512. Each layer is Transformer decoder layer with 16 attention heads, the layer dimension of 1024 and the feedforward dimension of 4096. The model is trained with 0.1 dropout at the learning rate of 0.0002. The cascaded model consists of three LMs each of which

has 12 Transformer decoder layers and other hyperparameters same as SEAMLESSEXPRESSIVELM. As for Enc-Dec S2ST, we set its convolution layers as 2, encoder layers of 6 and decoder layers of 6 with layer and feedforward dimensions of 1024 and 4096 so that its parameter size match that of semantic LM in the cascaded model.

Training. For SEAMLESSEXPRESSIVELM, the training acoustic prompt ratio is uniformly sampled between 0.25 and 0.3, and the inference prompt ratio is 0.3 in Spanish-to-English translation. The training ratio is 0.20-0.25, and the inference ratio is 0.2 for Hungarian-to-English translation. Section 5.2 discusses how the training and inference ratios are selected for Spanish-to-English. For cascaded LMs, the acoustic prompt is fixed as 3 seconds as used in (Wang et al., 2023).

Inference. We set beam search size of 10 to all models during inference. The SEAMLESSEXPRESSIVELMsamples acoustic units with the temperature of 0.9. For cascaded LMs, its semantic-toacoustic generation uses a temperature of 0.8 following (Wang et al., 2023). For LM-based S2ST models, target speech is synthesized by EnCodec decoder from acoustic units. For Enc-Dec, since it predicts semantic units, a pretrained unit based HiFi-GAN vocoder is applied to synthesize speech following (Lee et al., 2022).

4.1 Semantic Prompt

Results. Table 3 reports model size, ASR-BLEU and VSim of different S2ST models for Es-En and Hu-En. Enc-Dec model serves as the upper bound of semantic translation quality, while it does not preserve speaker vocal style. SEAMLESSEXPRESSIVELM outperforms cascaded LMs with +10.7% and +7.2% similarity in two directions. It performs on par with Cascaded LMs in terms of semantic translation as reflected by comparable ASRBLEU scores. In Table 4, SEAMLESSEXPRESSIVELM shows good MOS in comparison with cascaded LMs. We note that SEAMLESSEXPRESSIVELM has better efficiency with 312M parameters in comparison with Cascaded LMs with 469M parameters.

[1] https://huggingface.co/openai/whisper-medium.en

[2] We use the architecture of PolyVoice, and replace SoundStream with Encodec as acousitc units

如有侵权请联系:admin#unsafe.sh