2021-01-23 02:00:00 Author: www.synopsys.com(查看原文) 阅读量:365 收藏

Failure to address security early in the software development life cycle can increase business risks. Learn how a proactive, holistic approach helps achieve more-secure software.

Sometimes it’s hard to convince people that security needs to be part of every software development process. “We passed all our tests,” they might tell you. “Isn’t that good enough?”

The problem is that functional testing usually focuses on the happy path—a place where users act rationally, systems behave well, and nobody is attacking your application. The job of the testing team is often defined too narrowly as ensuring that the software has the required functionality.

The real world is a messy and chaotic place. As soon as your software is deployed, it will encounter a wide range of unexpected and badly formed input coming from systems and humans that are accident-prone, like Mr. Magoo—or actively malicious, like Lex Luthor.

To make this absolutely clear, let’s take a look at an extremely simple piece of software. It passes functional tests but is alarmingly easy to break.

Requirements and design

Somebody in your company has a great idea for a software product. “Give it your name,” gushes your product manager, “and it says ‘hello’ back to you. Everybody’s gonna love this thing!” They decide to call it faceplant.

The product has a single functional requirement:

- Given an input string x, the product will produce the output “Hello, x.”

Without any security review of the design, the requirements are done and get handed off to the development team.

Implementation

In the absence of any consideration for security, the development team has latitude to choose programming languages, frameworks, and open source software components to meet the functional requirements.

For whatever reason, your developers use C to implement this software. Here’s what they write:

#include <stdio.h>

#include &lt;string.h&gt;</span>

int main(int argc, char** argv) {

char message[32];

strcpy(message, "Hello ");

strcat(message, argv[1]);

strcat(message, ".\n");

printf(message);

return 0;

}

The developers build the software and do a little local testing to make sure it works:

$ ./faceplant Fleur Hello Fleur. $ ./faceplant Viktor Hello Viktor. $

Everything looks good, so they tell the testing group that it’s ready for testing.

Testing

The test group designs tests to make sure the functional requirements are met. They might do these manually, or they could automate them. Regardless, they work up a series of test cases that will ensure the software works as intended.

They might start with the same test cases the developers used, and then expand them.

If they’re really going the extra mile, they might throw in some test cases to support non-English-speaking customers even though there’s no functional requirements for this.

$ ./faceplant Sørina Hello Sørina. $ ./faceplant Étienne Hello Étienne. $

The software passes all the tests and gets handed off to the deployment and release team.

Wait … what?

The product team is just about finished with champagne toasts when things start falling apart.

Bug reports start flooding in. When faceplant is deployed as a network service, servers start suffering from denial-of-service attacks and possibly being compromised.

What happened? All the tests passed, which means the software is perfect, right? Right?

Nope.

Meanwhile, in a secret hacker lair

The fundamental problem here was trusting user input. Both the developers and the testers focused on functionality, assuming that the software would be handed input that makes sense.

An attacker looking to exploit faceplant would utterly disregard any explicit or implied rules about input. The first thing a hacker might try is supplying no input at all:

$ ./faceplant Segmentation fault (core dumped) $

Woops, that produced a segmentation fault. Next, an attacker might try supplying some long input:

$ ./faceplant 1234567890123456789012345678901234567890 Hello 1234567890123456789012345678901234567890. Segmentation fault (core dumped) $

That gave another segmentation fault. This one seems promising for the attacker—maybe it could lead to a remote code execution exploit.

The attacker might also try supplying format strings:

$ ./faceplant "%p %p %p %p" Hello 0x7ffd1acd62ec 0x11 0xffffffffffffffed 0x7ff5d8d41d80. $

Now the attacker is able to see the contents of memory, which could be useful in crafting an exploit or could reveal sensitive data, just like the Heartbleed vulnerability.

Yes, it’s a toy

Of course, faceplant is not a real piece of software. But it highlights how easy it is to have implementation bugs in even a trivial implementation.

Admittedly, our development team did a truly awful job with this one. But that’s not really the point. Developers will make mistakes—they’re human, after all—so the process that surrounds them must help drive down risk in the software they’re creating.

If this happened in 10 lines of code, what about an application with 10k lines of code? 100k? 1m?

Make sure it doesn’t fail

Functional testing is important. You use it to make sure that the software works when used as intended.

But it’s equally important to make sure that the software doesn’t fail when weird stuff happens. If somebody runs the software without specifying a name, it shouldn’t produce a segmentation fault. Instead, it shouldn’t respond at all, or respond with a polite error message, or write information to a log file somewhere. If somebody calls the software with a very long name, the software should handle it properly.

Battle-hardened developers know these things and write more-robust, more-secure code. Battle-hardened test teams know these things as well, and make test cases that supply unexpected or unusual inputs to software to see what happens.

Proactive security is inseparable from software development

Relying on battle-hardened developers and battle-hardened testers isn’t a viable strategy, though. For one thing, that kind of talent is hard to find. More importantly, even if you have the best engineers, they still miss things.

Baking security into every phase of software development, and taking advantage of automation, is the best way to achieve more-secure software and drive down business risk.

- Design. When an application or feature is envisioned, designers and architects need to adopt an attacker mindset to see how the application could be compromised. Threat modeling and architectural risk assessments are useful at this phase and help improve the overall structure and resiliency of the application.

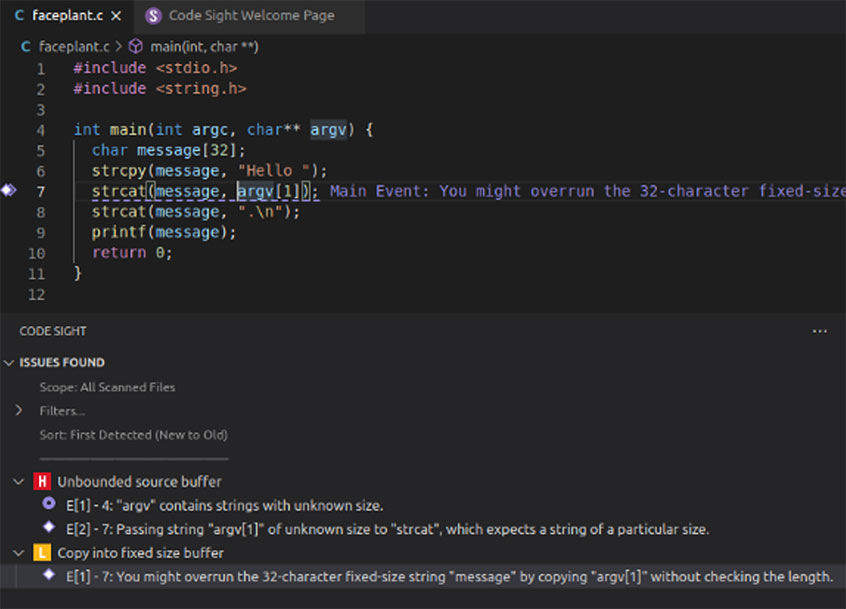

- Implementation. Tools such as the Code Sight™ IDE plugin help developers identify and remediate security issues as they’re writing code. Code Sight is like a senior engineer peeking over your shoulder as you write code, pointing out problems, suggesting solutions, and explaining concepts you don’t understand.

- Testing. Security testing should be incorporated into the test cycle just like functional testing. Automated security testing (static application security testing [SAST], software composition analysis [SCA], fuzzing, interactive application security testing [IAST], etc.) should be run automatically, with results feeding into the issue tracker and other systems that the development team is already using.

- Deployment. Policies and processes should mandate safe and secure deployments. SCA can also be advantageous here to examine open source components present in a deployment container or system.

Aside from the individual steps of the development cycle, continuous efforts are also helpful.

- Education. Ongoing education about software security topics helps the entire organization make better choices and reduce risk. Topics can range from general software security to specific coverage of programming languages and frameworks.

A proactive, holistic approach to security is the best way to reduce business risk.

Nip bugs in the bud

For example, as our developer was writing that horrible code, the Synopsys Code Sight IDE plugin could point out some problems.

This type of nearly immediate feedback is immensely helpful. It’s orders of magnitude less expensive to fix bugs early in the development cycle; the more bugs you can find earlier, the better.

Use SCA to manage third-party components

Nearly all applications use third-party, often open source, components as building blocks. Keeping track of those components, their known vulnerabilities, and their licenses is a crucial part of building software. It even extends to the maintenance phase, when teams must respond appropriately to newly discovered vulnerabilities in components they previously used.

Our faceplant example is small enough that it doesn’t use any third-party components, but many applications have hundreds or thousands of components.

Automation helps here, too. Synopsys Black Duck® scans source code to find third-party components and provides comprehensive reports on the vulnerabilities and licenses associated with those components, as well as workflows, reports, and much more.

Complement functional testing with fuzzing

Fuzzing is a technique for delivering unexpected and malformed inputs to an application. It’s a perfect complement to functional testing.

Functional testing helps verify that an application works. Fuzzing helps verify that an application doesn’t fail.

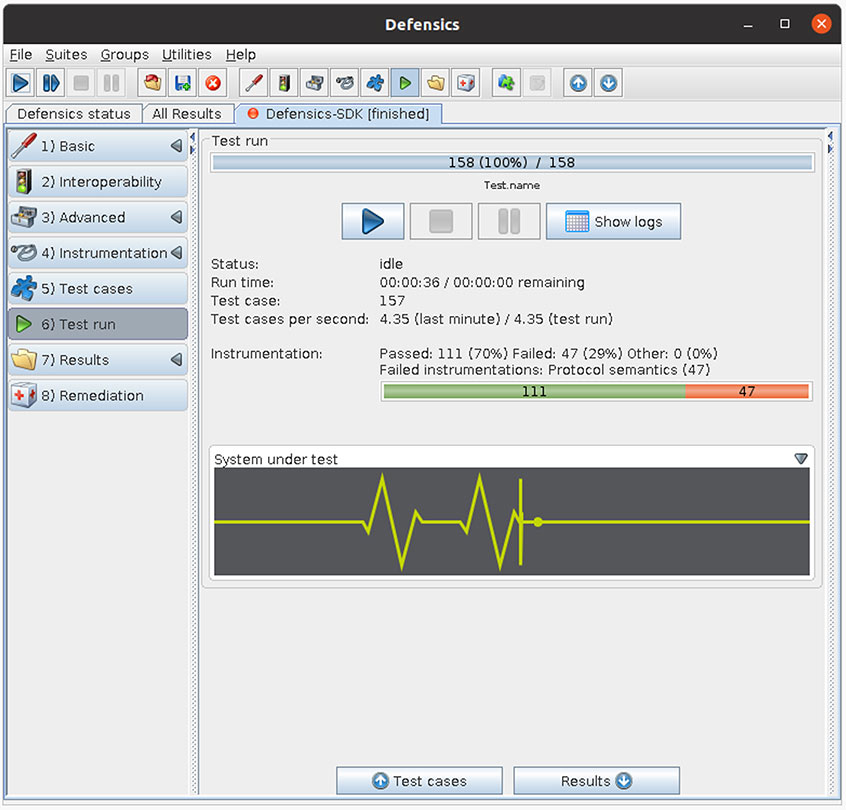

Synopsys Defensics® is a comprehensive solution for network protocol and file format fuzzing. We can use a fraction of its power to test our simple application. This is kind of like killing a mosquito by dropping 50 grand pianos on it, but it makes the point.

Based on a simple data model consisting of the name string, Defensics generates 158 test cases and can automatically deliver them to our target software. For “real” testing on network protocols or file formats, Defensics generates hundreds of thousands, or millions, of test cases.

Of the 158 test cases in this example, 47 produce failures in our application. Defensics keeps meticulous results and is able to provide detailed feedback about which test cases caused failures and how the target application responded. Developers can use Defensics to deliver the exact same test cases to reproduce the failures, which makes fixing bugs straightforward.

Automate and integrate your security tools

A holistic approach embeds security in every part of software development. Security tools, such as static analysis, SCA, fuzzing, and more, help locate weaknesses. Tools should be automated so that they run at appropriate times during development, and results should be integrated to issue tracking and other systems that the development team is already using.

When security is done right, it doesn’t feel like something extra. By automating and integrating security testing, development teams can address security issues just like they address any other issues. The end result is a stronger, more secure application that minimizes business risk for the vendor and for customers.

如有侵权请联系:admin#unsafe.sh