2021-07-10 00:39:02 Author: blog.avast.com(查看原文) 阅读量:104 收藏

New research from Avast and Czech Technical University applies automated feature extraction to machine learning to automate data processing pipelines

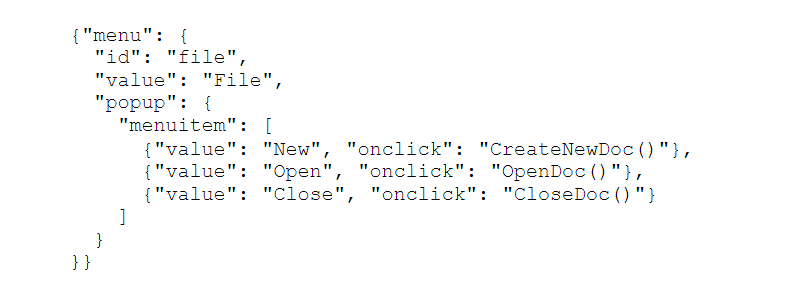

This post was written by the following Avast researchers: One of the biggest unaddressed challenges in machine learning (ML) for security is how to process large-scale and dynamically created machine data. Machine data — data generated by machines for machine processing — gets less attention in ML research than video, sound and text, yet it is as prevalent in our digital world and is as important as the dark matter in the universe. In security, machine data is the primary source of information about attacks and other anomalous behavior on the internet. Even so, it’s notoriously hard to learn from it automatically, to discover unknown patterns, and to adapt the learning process to the scale, complexity, and ever-changing nature of machine data. In this post, the Avast AI Research Lab reports on our solution to the problem. As indicated above, much of the effort in machine learning to date has centered around processing data related to human perception: through speech, vision and text. These are closely tied to the ways that humans interact with computers and systems. But there is another, much bigger class of data that lurks — a class that has the potential to revolutionize AI and ML products even further: machine data. Machine data analytics define a big part of the current cybersecurity problem. With an ever-growing deployment of AI-based automation by attackers, the volume of relevant machine data is growing so quickly that using modern machine learning techniques is the only way that we can provide cybersecurity on the internet today. What is machine data? It includes things like log files, databases, internet messages and protocols, disassemblies, and experimental device outputs. We call it machine data because its purpose is not primarily for humans to hear, see, or read it. Machine data shares some similarities with traditional data — speech is logged as a digital time series, vision is built around a sequence of matrices, and text follows the syntax and grammar of a language in the same way that machine data follows a protocol and grammar. There are, however, important differences. Machine data tends to evolve more rapidly than human-produced data because, for its intended use, it’s not bound by human perception constraints. The format and content of machine data can change as a consequence of any change in the computing environment, particularly due to automated changes in systems (with software updates, connection of new devices, or protocol changes due to network load-balancing or even due to component malfunctions). Crucially, machine data can be arbitrarily complex and large, making it hard for humans to work with. One of today’s most common forms of machine data used by web and apps is JavaScript Object Notation (JSON). JSON records a hierarchy of nested objects in a text form in which each object is a set of “key”:value pairs. A value can be a “string”, a number, a condition (true or false or null), an object or an array, (i.e. an ordered collection of objects). JSON is meant to be easily interpretable (assuming the expert knowledge of the particular data source), yet it is structured in the sense that it follows rules which allow the computer to parse messages to make use of content. So why is ML on machine data an important issue? Why do we at Avast care about it? Every day, our team handles more than 45 million new unique files, 25% of which are usually malicious. In September 2020, we blocked 1.7 billion Windows attacks, an accomplishment through which we protected close to 50 million users. In order to understand the growth of the attack landscape you can see in Fig. 3, that our team was handling closer to 200,000 files per day eight years ago, at the time of our IPO in 2018, it was around 500,000, and we’re now approaching 1.6 million new unique files per day. According to research by Akamai, 69% of web API traffic in 2018 was JSON. This is the traffic that is frequently misused as a carrier of widely spread attacks. We face an accelerating influx of new files, technologies, device types, and services, all of which can potentially be misused by adversaries to attack our customers and society as a whole. Data transferred over the internet either has JSON form or can be stored as JSON. File execution environments can even store logs as JSON. Thus, JSON data is an important source of information usable in threat detection. The problem is that there’s a discrepancy between the pace of data volume growth and the capacity of human experts and engineered automated solutions to analyze incoming data for suspected malicious behavior. Our most precious research commodity in security is time — more specifically, human time. We need tools that can process all sorts of machine data that describe anything potentially related to attacks or other malicious behaviors that our users can encounter. We need tools that discover unknown suspicious patterns in such data and that make predictions about the meaning of such patterns. Scalable, data-driven analytics is at the core of all cybersecurity vendors’ interest. Nevertheless, there are few (if any) ML techniques devoted to properly analyzing this type of data. Fig. 3. New unique files handled by AVAST infrastructure daily The former can’t directly utilize either of the inherent structure in JSON samples nor all sorts of information that has a unanimous meaning in JSON (URLs or a distinction of string versus numeric values, e.g. a string containing the word ‘null’ versus a key with a missing value). The latter approach depends on specialist human work to be done in response to each respectively addressed problem. This may be wasteful in case the machine data schema evolves quickly. More importantly, it can lead to suboptimal results if the human specialist misses an opportunity to extract all information that a neural model could utilize. This is not uncommon because in many problem areas, it can be unclear how to transform the JSON into vector form. These traditional ML approaches are insufficient in the world when attack vectors are changing so quickly — often with the intention to evade specific detectors and classifiers. In order to be able to sustain sophisticated large-scale attack campaigns that are often fully automated, we need to minimize the dependence of the defense on human experts who are simply incapable of scaling enough to combat AI-assisted attacks. Automated feature extraction is the proper answer to such a challenge, and it provides expert security analysts with the possibility to focus on the most sophisticated attacks, created by human attackers. The way we achieve automation for learning from machine data with an arbitrary schema is through a four-step process that is in itself automated: First, we either start from a known schema or deduce a schema from available data. The JSON format does not imply types of variables, hence we need to estimate them together with the schema itself. We implemented a set of heuristics to distinguish categorical variables from integer variables, integers from real numbers, and generic strings from known specialized strings like paths or URLs. For robustness, we also handle undefined cases when, for example, the same variable key is observed to have inconsistent type across multiple samples. For each singular value type, we implement feature extractors that convert the value into a vector, the size of which will then correspond to the number of neurons in a dedicated neural layer. We have a set of default extractors, which can get overridden by more specific extractors whenever such become available. Numerical value and categorical value mapping to vectors is trivial. Generic strings get encoded one-hot or as n-grams. Specific strings, like paths or URLs, first get decoded to individual terms. The remaining problems are the encoding of arrays and objects. We devise the architecture of our neural model automatically based on the JSON schema. Fig. 2 illustrates that we effectively mirror the schema into the neural architecture. Individual key:values in the schema get mapped to neural layers implied by the respective default feature extractor suitable for the estimated value type. For objects and arrays, the solution is through adding product and pooling layers in the neural network. Fig. 2. Transforming JSON structure into neural architecture A product layer simply concatenates vectors representing each of the object’s member objects (arrays or values). A pooling layer extracts information from arbitrarily large arrays. Pooling can be done through a single function or a collection of functions that extract information from the series of objects in an array. The overall properties of the neural model largely depend on how well the pooling function succeeds in capturing the properties of individual array members. At this point, there is a trade-off: More complete information (e.g. complex approximation of the distribution, sequential information, or entropy) leads to slower learning, yet simpler pooling may lead to underutilization of the structural information contained in the array. Interestingly, in most of the security machine data, our models perform well with a trivial pair of pooling functions that extract only the average and maximum of values in the array. We can even formally prove that, in theory, more complex pooling functions do not increase the expressivity of the model. In Fig. 2 this is illustrated as follows: The arbitrary number of vectors C enter the pooling layer, wherein multiple pooling functions (func1 ~ averaging of values, func2 ~ maximum of values) collect information from them. The results of pooling functions are then concatenated to form vector B. Vectors A and B are eventually concatenated to form a neural layer representing the root of the JSON sample. In its default form, our framework does not implement any explicit optimization of the neural network depth or width with respect to expressivity on concrete data, i.e., numbers of neurons are set to default values in each feature extractor, and each pooling and product layer. We obtained the defaults from testing on an extensive body of JSON machine data of various types in security context. For default use, we identified no benefit from adding more hidden layers. Layer sizes can get adjusted in case of need, but we obtained good results with the default and relatively modest sizing of just dozens of neurons per represented tree node. Note that in the default form, the neural network depth is proportional to the depth of the estimated schema (up to the factor of three due to pooling and product layers). The neural network that we obtain is in the form of the Multiple Instance Learning model, in which a JSON is a hierarchical bag with multiple instance layers. Such hierarchical extension is referred to as Hierarchical Multiple Instance Learning (HMIL). In the described default form, our neural model treats collections of instances as sets and learns only basic statistics over the sets. To better capture the structural information in collections of instances, the model can be extended by implementing stronger pooling layers. The patent application covering this technology (“Automated Malware Classification with Human Readable Explanation”) was filed in the United States on January 27, 2021, under application number 17/159,909. The constructed neural network can be trained in a standard way using backpropagation and stochastic gradient descent. We have shown that, in this case, a variant of the universal approximation theorem holds even with the simplest pooling layers. Note that we have described the automation for JSON data, but the same can be done for other analogous formats, including XML and Protobuf. We built a system for learning from (nearly) arbitrary JSON data that straightforwardly yields a good baseline prediction performance with the default set of existing feature extractors. Any improvement on top of the achieved baseline in the form of adding more specific extractors into the system then makes the improvement effective for different learning tasks. The system provides robustness against changes in data format, structure, and content, which always happen over time — sandboxes get upgrades, new logging technology gets employed, and with the increasing volume of analyzed samples, new value types get observed in logs. Our learning framework accommodates all this without the explicit need for a codebase upgrade. We use the system to process sandbox logs (from various sandboxes), IoT and network telemetry, behavioral logs, static file metadata and file disassembly using the same default codebase in multiple use cases, with malware detection being the primary one. Whenever we identify underrepresented information when applying to one of the datasets, we improve the extraction logic, which is also for the benefit of all use cases. The presented approach has formed a basis for AI explainability in our automated pipelines that is very important for man-machine interaction in highly complex use cases that require subject matter expertise. Cybersecurity is undoubtedly one of such use cases. Stay tuned for a separate post to come in which we’ll cover the problem of explainability in detail. The specific tools and scripts we use to learn from JSON files have been open sourced on GitHub in cooperation with Czech Technical University. A preprint of a more technical description of the framework has been published on arXiv. It is our hope that these tools can help practitioners across industries and that any improvements especially in the areas of specific feature extractors and pooling functions coming from potential users would increase the generality of the toolset for all.

Petr Somol, Avast Director AI Research

Tomáš Pevný, Avast Principal AI Scientist

Viliam Lisý, Avast Principal AI Scientist

Branislav Bošanský, Avast Principal AI Scientist

Andrew B. Gardner, Avast VP Research & AI

Michal Pěchouček, Avast CTO

Image credit: Weygaert, R et al.(2011). Alpha, Betti and the Megaparsec Universe: On the Topology of the Cosmic Web. Transactions on Computational Science. 14

Fig. 1. A simple JSON example of machine data encoding a menu structure of an application

That being said, common machine learning techniques can be applied to machine data — a JSON can be treated as text and modeled using text models (such as RNNs, Transformers, etc.). Alternatively, explicit transformation into vector form can be done by manual definition of feature extractors on the level of individual key:value entities, or on the level of JSON tree branches. Once an expert defines feature extractors for the given problem, any standard ML technique can be applied.

Schema inference

Data transformation

Construct a neural network

Train the model

Appendix

如有侵权请联系:admin#unsafe.sh