DPReview just published Apple still hasn't made a truly “Pro” M1 Mac – so what’s the holdup? Following on the good performance and awesome power efficiency of the Apple M1, there’s a hungry background rumble in Mac-land along the lines of “Since the M1 is an entry-level chip, the next CPU is gonna blow everyone’s mind!” But it’s been eight months since the M1 shipped and we haven’t heard from Apple. I have a good guess what’s going on: It’s proving really hard to make a CPU (or SoC) that’s perceptibly faster than the M1. Here’s why.

Attribution: Henriok, CC0, via Wikimedia Commons

But first, does it matter? Obviously, people who (like me) spend a lot of time in compute-intensive programs like Lightroom Classic want those apps to be faster. To make it concrete: I’d cheerfully upgrade my 2019 16" MBP if there were an M1 version that was noticeably better. But there isn’t.

But let’s be clear: The M1 is plenty fast enough for the vast majority of what people do with computers: Email, video-watching, document-writing, slideshow-authoring, music playing, and so on. And it’s quiet and doesn’t use much juice. Yay. But…

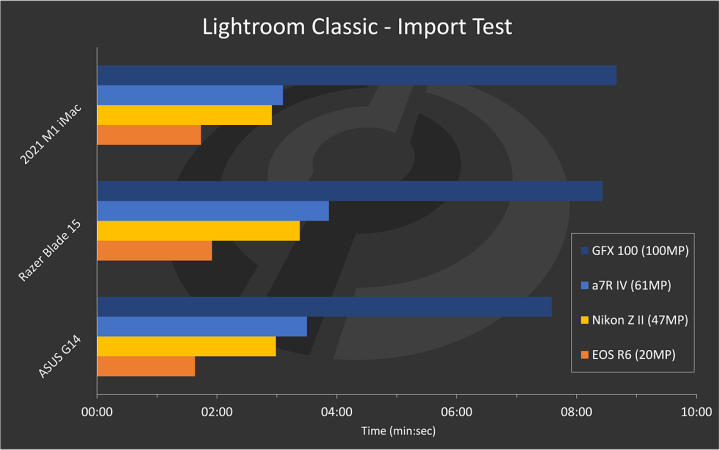

The M1 is already fast! · Check out this benchmark in the DPReview piece.

If you’re interested in this stuff at all, you should really go read the article. There are lots more good graphs; also, the config and (especially) prices of the systems they benchmarked against are interesting.

I sorely miss the benchmark I saw in some other publication but can’t find now, where they measured the interactive performance when you load up a series of photos on-screen. These import & export measurements are useful, but frankly when I do that kind of thing I go read email or get a coffee while it’s happening, so it doesn’t really hold me up as such.

To date, I haven’t heard anyone saying Lightroom is significantly snappier on an M1 than on a recent Intel MBP. I’d be happy to be corrected.

Anyhow, this graph shows the M1 holding its own well against some pretty elite Intel and AMD silicon. (On top of which, it’ll be burning way fewer watts.) (But I don’t care that much when I’m at my desktop, which I usually am when doing media work.) So, right away, it looks like the M1 already sets a pretty high bar; a significant improvement won’t be cheap or easy.

If you look a little closer, the M1 clock speed maxes out at 3.2GHz, which is respectable but nothing special. In the benchmark above, the Intel CPU is specced to run at up to 5.1GHz and and the AMD at up to 4.6. It’s interesting that Apple is getting competitive performance with fewer (specced) compute cycles.

But there’s plenty more digging to do there; all these clock rates are marked “Turbo” or “Boost” and thus mean “The speed the chip is guaranteed to never go faster than”. The actual number of delivered cycles you get when wrangling a big RAW camera image is what matters. It’s not crazy to assume that’s at least related to the specced max clock, but also not obviously true.

So, one obvious path Apple can take toward a snappier-feeling experience is upping the clock rate. Which it’s fair to assume they’re working on. But that’s a steep hill to climb; it’s cost Intel and AMD billions upon billions of investment to get those clock rates up.

Obviously, the M1 is evidence that Apple has an elite silicon design team. They’ve proved they can squeeze more compute out of fewer cycles burning fewer watts. This does not imply that they’ll be able to squeeze more cycles out of today’s silicon processes. I’m not saying they can’t. But it’s not surprising that, 8 months post-M1, they haven’t announced anything.

But threads! · It’s a long time since Moore’s law meant faster cycle times; most of the transistors Moore gives you go into more cores per chip and more threads per core. Also, memory controllers and I/O.

In the benchmark above, the M1 has something like half the effective threads offered by the Intel & AMD competition. So, is it surprising that the M1 still competes so well?

Nope. Here’s the dirty secret: Making computer programs run faster by spreading the work around multiple compute units is hard. In fact, the article you are reading will be the seventy-sixth on this blog tagged Technology/Concurrency. It’s a subject I’ve put a lot of work into, because it’s hard in interesting ways.

I guarantee that the Lightroom engineers at Adobe have worked their asses off trying to use the threads on modern CPUs to make the program feel faster. I can personally testify that over the years I’ve spent with Lightroom, the speedups have been, um, modest, while the slowdown due to camera files getting bigger and photoprocessing tools more sophisticated have been, um, not modest.

A lot of times when you’re waiting, irritated, for a computer to do something, you’re blocked on a single thread’s progress. So GHz really can matter.

Here’s another fact that matters. As programmers try to spread work around multiple cores, the return you get from each one added tends to fall off. Discouragingly steeply. So, I have no trouble believing that, at the moment, the fact that the M1 doesn’t have as many threads just doesn’t matter for interactive media-wrangling software.

Which means that an M2 distinguished by having lots more threads probably wouldn’t make people very happy.

But memory! · Yep, one problem with the M1 is that it supports a mere 16G of RAM; the competitors in the benchmark both had 32. So when the M2 comes along and supports 64G, it’ll wipe the floor with those pussies, right?

Um, not really. Let’s just pop up the performance monitor on my 16" MBP here, currently running Signal, Element, Chrome, Safari, Microsoft Edge, Goland, IntelliJ, Emacs, and Word. Look at that, only 20 of my 32GHz are being used. But wait, Lightroom isn’t running! I can fix that, hold on a second. Now it’s up to 21.5G.

The fact that that I have 10G+ of RAM showing free shows that I’m under zero memory pressure. If this were a 16G box, some of those programs I’m not using just now would get squeezed out of memory and Lightroom would get what it needs.

OK, yes, I can and have maxed out this machine’s memory. But the returns on memory investment past 16G are, for most people, just not gonna be that dramatic in general and specifically, probably won’t make your media operations feel faster. I speculate that there are 4K video tasks like color grading where you might notice the effect.

I’m totally sure that if supporting 32G would take Apple Silicon to the next level, they’d have shipped their next chip by now. But it wouldn’t so they haven’t.

Before we leave the subject of memory behind, there’s the issue of memory controllers and caching architectures and so on. Having lots of memory doesn’t help if you can’t feed its contents to your CPU fast enough. Since CPUs run a lot faster than memory — really a lot faster — this is a significant challenge. If Apple could use their silicon talents to build a memory-access subsystem with better throughput and latency than the competition, I’m pretty sure you’d notice the effects and it wouldn’t be subtle. Maybe they can. But it’s not surprising that they haven’t yet.

But I/O! · Where does the stuff in memory come from? From your disks, which these days are totally not going to be anything that spins, they’re going to be memory-only-slower. It feels to me like storage performance has progressed faster than CPU or memory in recent years. This matters. Once again, if Apple could figure out a way to give the path to and from storage significantly lower latency and higher throughput, you’d notice all right.

And to combine themes, using multiple cores to access storage in parallel can be a fruitful source of performance improvements. But, once again, it’s hard. And in the specific case of media wrangling, is probably more Adobe’s problem than Apple’s.

GPUs · Everybody knows that GPUs are faster than CPUs for fast compute. So wouldn’t better GPUs be a good way to make media apps faster?

The idea isn’t crazy. The last few releases of Lightroom have claimed to make more use of the GPU, but I haven’t really felt the speedup. Perhaps that’s because the GPU on this Mac is a (yawn) 8GB AMD Radeon Pro 5500M?

Anyhow, it’d be really surprising if Apple managed to get ahead of GPU makers like NVidia. Now, at this point, based on the M1 we should expect surprises from Apple. But I’m not even sure that’d be their best silicon bet.

Summarizing · If Apple wanted to build the M2 of my dreams, a faster clock rate might help. A better memory subsystem almost certainly would. Seriously better I/O, too. And a breakthrough in concurrent-software tech. Things that probably wouldn’t help: More threads, more memory, better GPU.

Will there be an awesome M2? · Where by “awesome” I mean “Tim thinks Lightroom Classic feels a lot faster.” Honestly: I don’t know. I suspect there are a whole lot of Mac geeks out there who just assume that this is going to happen based on how great the M1 is. If you’ve read this far you’ll know that I’m less optimistic.

But, who knows? Maybe Apple can find the right combination of clock speedup and memory magic and concurrency voodoo to make it happen. Best of luck to ’em.

They’ll need it.

如有侵权请联系:admin#unsafe.sh