Thanks to Laakso Mikko and Risto Wichman researchers at the Department of Signal Processing and Acoustics in Aalto University, Finland for submitting news that their recent paper titled "Near-field localization using machine learning: an empirical study" is available on IEEE Xplore. (To access the paper you need an IEEE subscription, but we see no harm in letting individuals know that they can search for the DOI on sci-hub to get it for free).

The work described in the paper uses 7 RTL-SDR dongles with their clocks connected together. Combined with noise source calibration, this results in a coherent SDR. They then train a Deep Neural Network to perform near field localization using an antenna array. If you are interested, we have out own 5-channel coherent SDR called "KrakenSDR" which will soon be released for crowd funding. The abstract reads:

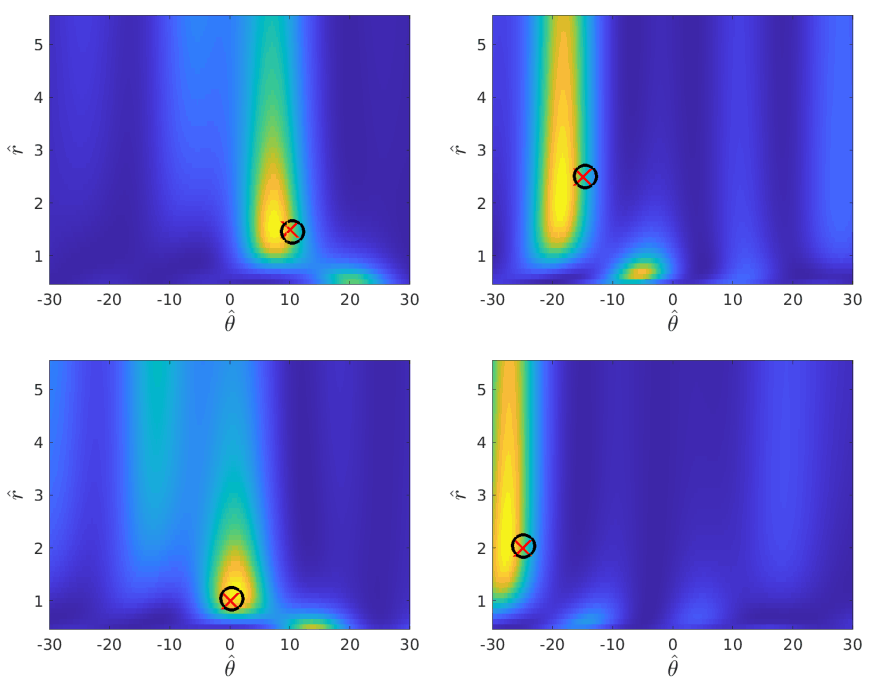

Estimation methods for passive near-field localization have been studied to an appreciable extent in signal processing research. Such localization methods find use in various applications, for instance in medical imaging. However, methods based on the standard near-field signal model can be inaccurate in real-world applications, due to deficiencies of the model itself and hardware imperfections. It is expected that deep neural network (DNN) based estimation methods trained on the nonideal sensor array signals could outperform the model-driven alternatives. In this work, a DNN based estimator is trained and validated on a set of real world measured data. The series of measurements was conducted with an inexpensive custom built multichannel software-defined radio (SDR) receiver, which makes the nonidealities more prominent. The results show that a DNN based localization estimator clearly outperforms the compared model-driven method.

The paper notes that the code used in the experiments is open source and available on GitHub.

If you're interested, we also posted about Laakso's previous work on beamforming with a phase coherent 21-channel RTL-SDR array back in February.