With this approach, we've introduced an additional layer of threat analysis explainability, which helps increase the protection that we provide to our users

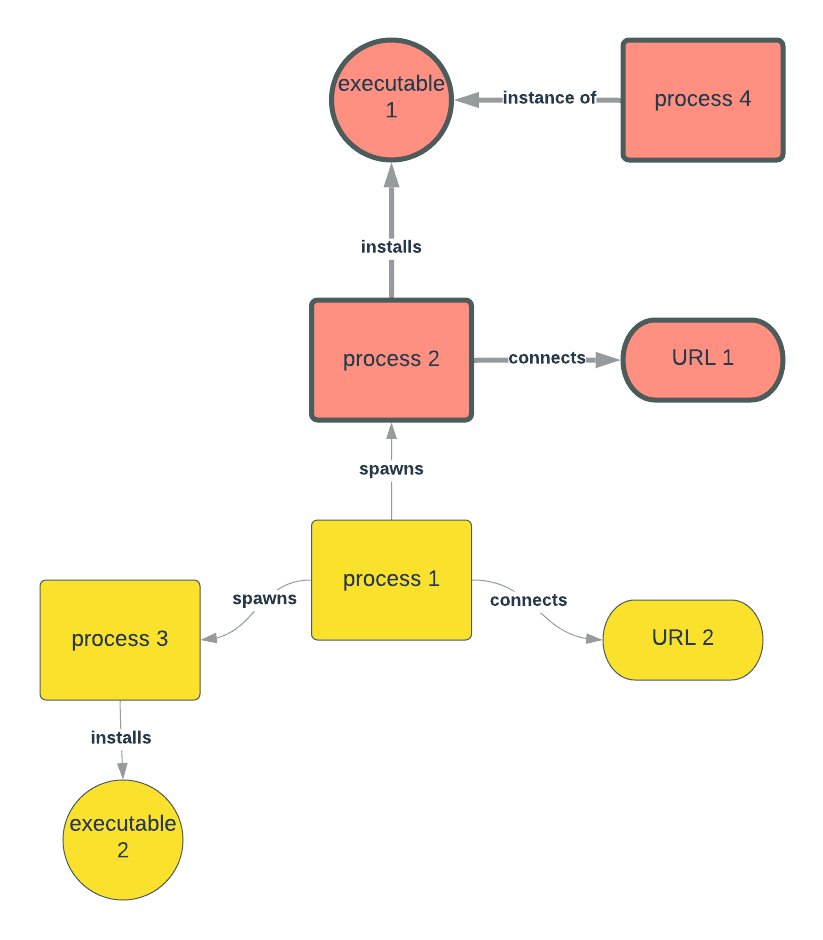

As defenders, one of the challenges today in cybersecurity detection is our ability to determine whether a multitude of observations on network communications, setting changes, website downloads, and so on represent malicious artifacts leading to fraud, ransomware, and other attacks impacting our customers. Bad actors continuously work on methods to hide those artifacts, which are also known as tactics, techniques, and procedures (TTPs) used while attacking our customers. If they are successful in hiding their TTPs, then it’s more likely that they will succeed in their objective. This challenge results in an arms race of sorts in which bad actors continue to develop more sophisticated techniques to hide and defenders look for new ways to detect them. At Avast, we continuously invest in new ways to detect malicious activities, even if they employ hidden techniques. One such analysis technique is generally known as behavioral threat analysis. This post outlines some of the key aspects of how Avast performs such analysis. Behavioral threat analytics enables the detection of threats that would otherwise fall under the radar of threat analysis techniques that are focused on static analysis of individual elements such as processes, network connections, or executables. A key element of threat analysis underpinning the behavioral approach is a graph-based representation of the dynamics unfolding on the client (such as a PC or mobile phone). Each event, such as an execution of a file or network communication, is represented in a graph as a node connected by edges representing the relationships between the events. For example, an executed file creates a process which can then download some data from a particular IP or hostname, which is subsequently executed and thus another process is created and so on, as illustrated by the figure below. The graph thus represents a snapshot of behavior observable during a particular period of time. As malware authors often employ so-called “living off the land” strategies masking the attack behind otherwise benign tools (such as cmd.exe, Windows’ default command-line interpreter), an analysis of the tools itself would yield no threat detection without understanding how the tools are used during the phase of an attack in which the behavioral events captured represent a threatening sequence. That’s because each of the individual events may — in isolation — appear to be harmless despite the fact that together, they pose a threat. This is exactly why the Avast AI Research Lab invests in developing and deploying novel techniques for representing, analyzing, and detecting malicious behavior. Thanks to Avast’s gigascale sensor network, we have the right data to calibrate machine learning models that can identify the behavioral fingerprints of malware. A fingerprint is a graph pattern of relatively small size (typically up to 10 nodes) capturing the malicious activity without the noise of benign behavior that typically dominates the data. Since the data consists of millions of graphs per day, where each graph consists of thousands of nodes (events) and edges (relationships), this task can resemble the search for a needle in a haystack in its complexity. Fortunately, we can leverage our threat intelligence systems to filter, enrich, and label the data into the shape that is suitable for training graph neural networks (GNNs), which help us to extract these fingerprints in an efficient and scalable way. Once a fingerprint of malicious behavior is generated, we can monitor programs on protected users' machines for behavior that either exactly or approximately matches the fingerprint. If the malicious behavior matches a fingerprint exactly, we can stop it before it causes any harm, while if it matches approximately, we can decide whether to stop it or not according to the rate of the approximate matching. In both cases, the newly detected behaviors can be submitted back to our cloud to improve our insight in malicious behaviors and to improve the fingerprints. GNNs have recently gained increasing attention in research as well as within the cybersecurity industry, and they’ve proven to be useful in many areas from social network analysis to cheminformatics. The key benefit of graph neural networks is that they can recursively process nodes by leveraging the relationships between them, thus naturally capturing both node attributes and graph structure. In our case, each node is characterized by hundreds of features, such as registry value modifications, string representation of the process name, modification of memory allocated by other processes, or establishing connections to certain services on the internet. Similarly, edges are labelled by the type of relationship they represent; for example, the spawning of a process or network connection. During the training, the model learns a low-rank representation (i.e. embedding) of the nodes that is useful for predicting various properties of the behavior represented by the graph. In other words, we cast the problem as a multi-task classification and train the model end-to-end from the graphs to the multi-head outputs representing the threat intelligence we aim to capture. A multi-head output is necessary to maximize the value obtained by the system and properly represent the information that our GNN is extracting from the hundred of millions of behaviors it observes. Some of the heads include: In addition, we research novel techniques for explaining these complex models in order to better understand the strategies employed by different malware strains, and in turn, to distill robust decision rules that can be easily used for the protection of our clients while also respecting their privacy. Continuing with the example, the explanation process allows us to filter out irrelevant behavior and identify only the fingerprint of the malicious activity as emphasized in the bold text and red color in the following figure. We can see that the essence of the malicious activity is that process 2 downloads an executable 1 from URL 1 and executes it which results in process 4. The rest of the activity appears to be irrelevant and thus does not need to be included in the fingerprint, which helps us to dramatically reduce the resources needed for the detection and recovery. Such analysis and optimization has been shown to provide very valuable insights at Avast, including improved protection against previously unknown bootloaders by MyKings malware. Graph-based threat analysis is an integral part of Avast threat protection that leverages recent advances in GNNs. With this novel approach, we have introduced an additional layer of threat analysis explainability using deep neural networks, which helps increase the protection that we provide to our over 435 million users.

如有侵权请联系:admin#unsafe.sh